通知设置 新通知

星球文章 获取所有文章 爬虫

李魔佛 发表了文章 • 0 个评论 • 3165 次浏览 • 2022-06-03 13:49

挺讽刺的,星球自身没有目录结构,所以浏览文章,只能通过时间线,一些一年前的文章,基本就没有会看到,而且,星球的搜索功能也是一堆bug,处于搜不到的状态。

群里没有人没有吐槽过这个搜索功能的。

所以只好自己写个程序把自己的文章抓下来,作为文章目录:

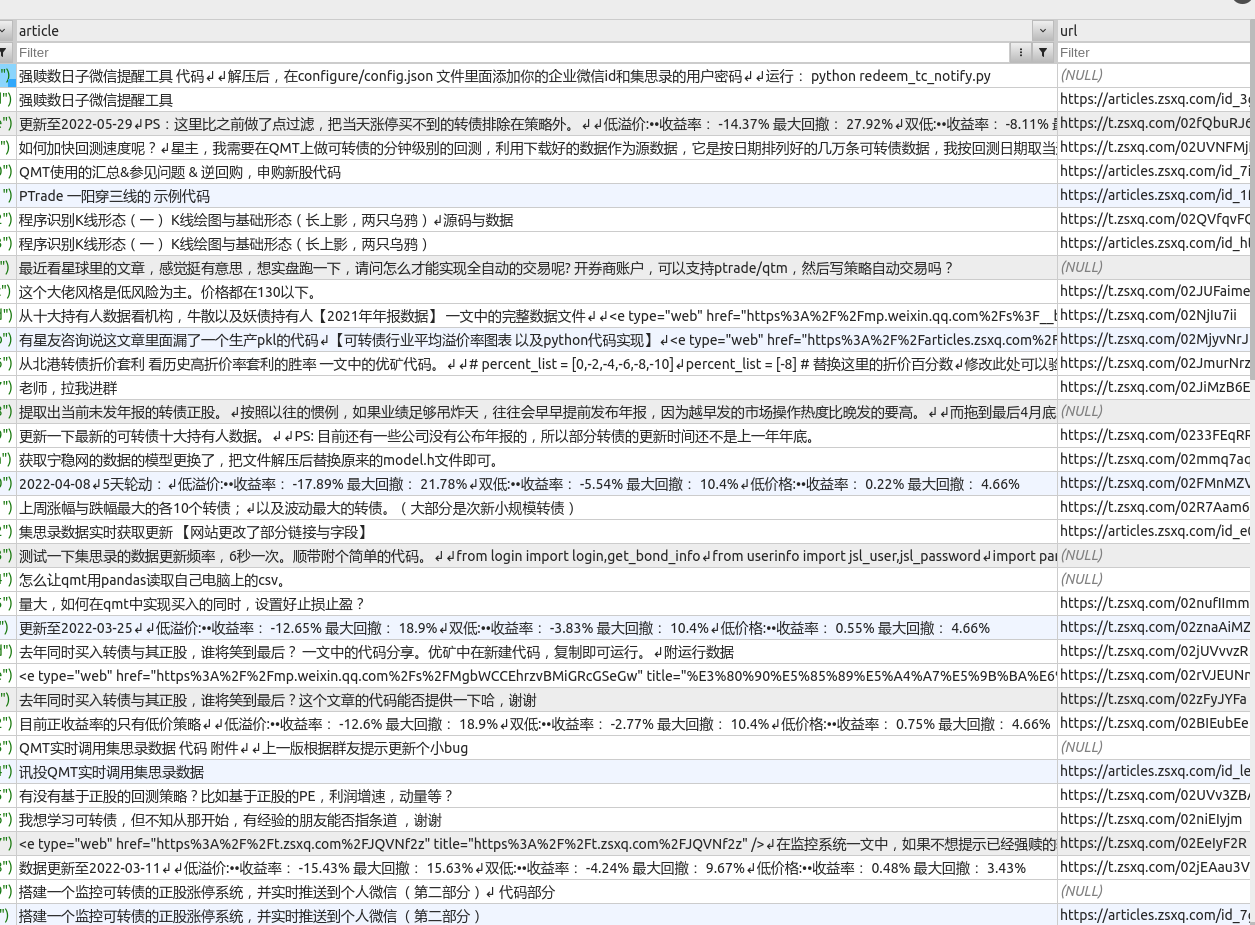

生成的markdown文件

每次只需要运行python main.py 就可以拿到最新的星球文章链接了。

需要源码可以在公众号联系~

查看全部

群里没有人没有吐槽过这个搜索功能的。

所以只好自己写个程序把自己的文章抓下来,作为文章目录:

生成的markdown文件

每次只需要运行python main.py 就可以拿到最新的星球文章链接了。

需要源码可以在公众号联系~

查看全部

scrapy源码分析<一>:入口函数以及是如何运行

李魔佛 发表了文章 • 0 个评论 • 10513 次浏览 • 2019-08-31 10:47

运行scrapy crawl example 命令的时候,就会执行我们写的爬虫程序。

下面我们从源码分析一下scrapy执行的流程:

执行scrapy crawl 命令时,调用的是Command类class Command(ScrapyCommand):

requires_project = True

def syntax(self):

return '[options]'

def short_desc(self):

return 'Runs all of the spiders - My Defined'

def run(self,args,opts):

print('==================')

print(type(self.crawler_process))

spider_list = self.crawler_process.spiders.list() # 找到爬虫类

for name in spider_list:

print('=================')

print(name)

self.crawler_process.crawl(name,**opts.__dict__)

self.crawler_process.start()

然后我们去看看crawler_process,这个是来自ScrapyCommand,而ScrapyCommand又是CrawlerProcess的子类,而CrawlerProcess又是CrawlerRunner的子类

在CrawlerRunner构造函数里面主要作用就是这个 def __init__(self, settings=None):

if isinstance(settings, dict) or settings is None:

settings = Settings(settings)

self.settings = settings

self.spider_loader = _get_spider_loader(settings) # 构造爬虫

self._crawlers = set()

self._active = set()

self.bootstrap_failed = False

1. 加载配置文件def _get_spider_loader(settings):

cls_path = settings.get('SPIDER_LOADER_CLASS')

# settings文件没有定义SPIDER_LOADER_CLASS,所以这里获取到的是系统的默认配置文件,

# 默认配置文件在接下来的代码块A

# SPIDER_LOADER_CLASS = 'scrapy.spiderloader.SpiderLoader'

loader_cls = load_object(cls_path)

# 这个函数就是根据路径转为类对象,也就是上面crapy.spiderloader.SpiderLoader 这个

# 字符串变成一个类对象

# 具体的load_object 对象代码见下面代码块B

return loader_cls.from_settings(settings.frozencopy())

默认配置文件defautl_settting.py# 代码块A

#......省略若干

SCHEDULER = 'scrapy.core.scheduler.Scheduler'

SCHEDULER_DISK_QUEUE = 'scrapy.squeues.PickleLifoDiskQueue'

SCHEDULER_MEMORY_QUEUE = 'scrapy.squeues.LifoMemoryQueue'

SCHEDULER_PRIORITY_QUEUE = 'scrapy.pqueues.ScrapyPriorityQueue'

SPIDER_LOADER_CLASS = 'scrapy.spiderloader.SpiderLoader' 就是这个值

SPIDER_LOADER_WARN_ONLY = False

SPIDER_MIDDLEWARES = {}

load_object的实现# 代码块B 为了方便,我把异常处理的去除

from importlib import import_module #导入第三方库

def load_object(path):

dot = path.rindex('.')

module, name = path[:dot], path[dot+1:]

# 上面把路径分为基本路径+模块名

mod = import_module(module)

obj = getattr(mod, name)

# 获取模块里面那个值

return obj

测试代码:In [33]: mod = import_module(module)

In [34]: mod

Out[34]: <module 'scrapy.spiderloader' from '/home/xda/anaconda3/lib/python3.7/site-packages/scrapy/spiderloader.py'>

In [35]: getattr(mod,name)

Out[35]: scrapy.spiderloader.SpiderLoader

In [36]: obj = getattr(mod,name)

In [37]: obj

Out[37]: scrapy.spiderloader.SpiderLoader

In [38]: type(obj)

Out[38]: type

在代码块A中,loader_cls是SpiderLoader,最后返回的的是SpiderLoader.from_settings(settings.frozencopy())

接下来看看SpiderLoader.from_settings, def from_settings(cls, settings):

return cls(settings)

返回类对象自己,所以直接看__init__函数即可class SpiderLoader(object):

"""

SpiderLoader is a class which locates and loads spiders

in a Scrapy project.

"""

def __init__(self, settings):

self.spider_modules = settings.getlist('SPIDER_MODULES')

# 获得settting中的模块名字,创建scrapy的时候就默认帮你生成了

# 你可以看看你的settings文件里面的内容就可以找到这个值,是一个list

self.warn_only = settings.getbool('SPIDER_LOADER_WARN_ONLY')

self._spiders = {}

self._found = defaultdict(list)

self._load_all_spiders() # 加载所有爬虫

核心就是这个_load_all_spiders:

走起:def _load_all_spiders(self):

for name in self.spider_modules:

for module in walk_modules(name): # 这个遍历文件夹里面的文件,然后再转化为类对象,

# 保存到字典:self._spiders = {}

self._load_spiders(module) # 模块变成spider

self._check_name_duplicates() # 去重,如果名字一样就异常

接下来看看_load_spiders

核心就是下面的。def iter_spider_classes(module):

from scrapy.spiders import Spider

for obj in six.itervalues(vars(module)): # 找到模块里面的变量,然后迭代出来

if inspect.isclass(obj) and \

issubclass(obj, Spider) and \

obj.__module__ == module.__name__ and \

getattr(obj, 'name', None): # 有name属性,继承于Spider

yield obj

这个obj就是我们平时写的spider类了。

原来分析了这么多,才找到了我们平时写的爬虫类

待续。。。。

原创文章

转载请注明出处

http://30daydo.com/article/530

查看全部

下面我们从源码分析一下scrapy执行的流程:

执行scrapy crawl 命令时,调用的是Command类class Command(ScrapyCommand):

requires_project = True

def syntax(self):

return '[options]'

def short_desc(self):

return 'Runs all of the spiders - My Defined'

def run(self,args,opts):

print('==================')

print(type(self.crawler_process))

spider_list = self.crawler_process.spiders.list() # 找到爬虫类

for name in spider_list:

print('=================')

print(name)

self.crawler_process.crawl(name,**opts.__dict__)

self.crawler_process.start()

然后我们去看看crawler_process,这个是来自ScrapyCommand,而ScrapyCommand又是CrawlerProcess的子类,而CrawlerProcess又是CrawlerRunner的子类

在CrawlerRunner构造函数里面主要作用就是这个 def __init__(self, settings=None):

if isinstance(settings, dict) or settings is None:

settings = Settings(settings)

self.settings = settings

self.spider_loader = _get_spider_loader(settings) # 构造爬虫

self._crawlers = set()

self._active = set()

self.bootstrap_failed = False

1. 加载配置文件def _get_spider_loader(settings):

cls_path = settings.get('SPIDER_LOADER_CLASS')

# settings文件没有定义SPIDER_LOADER_CLASS,所以这里获取到的是系统的默认配置文件,

# 默认配置文件在接下来的代码块A

# SPIDER_LOADER_CLASS = 'scrapy.spiderloader.SpiderLoader'

loader_cls = load_object(cls_path)

# 这个函数就是根据路径转为类对象,也就是上面crapy.spiderloader.SpiderLoader 这个

# 字符串变成一个类对象

# 具体的load_object 对象代码见下面代码块B

return loader_cls.from_settings(settings.frozencopy())

默认配置文件defautl_settting.py# 代码块A

#......省略若干

SCHEDULER = 'scrapy.core.scheduler.Scheduler'

SCHEDULER_DISK_QUEUE = 'scrapy.squeues.PickleLifoDiskQueue'

SCHEDULER_MEMORY_QUEUE = 'scrapy.squeues.LifoMemoryQueue'

SCHEDULER_PRIORITY_QUEUE = 'scrapy.pqueues.ScrapyPriorityQueue'

SPIDER_LOADER_CLASS = 'scrapy.spiderloader.SpiderLoader' 就是这个值

SPIDER_LOADER_WARN_ONLY = False

SPIDER_MIDDLEWARES = {}

load_object的实现# 代码块B 为了方便,我把异常处理的去除

from importlib import import_module #导入第三方库

def load_object(path):

dot = path.rindex('.')

module, name = path[:dot], path[dot+1:]

# 上面把路径分为基本路径+模块名

mod = import_module(module)

obj = getattr(mod, name)

# 获取模块里面那个值

return obj

测试代码:In [33]: mod = import_module(module)

In [34]: mod

Out[34]: <module 'scrapy.spiderloader' from '/home/xda/anaconda3/lib/python3.7/site-packages/scrapy/spiderloader.py'>

In [35]: getattr(mod,name)

Out[35]: scrapy.spiderloader.SpiderLoader

In [36]: obj = getattr(mod,name)

In [37]: obj

Out[37]: scrapy.spiderloader.SpiderLoader

In [38]: type(obj)

Out[38]: type

在代码块A中,loader_cls是SpiderLoader,最后返回的的是SpiderLoader.from_settings(settings.frozencopy())

接下来看看SpiderLoader.from_settings, def from_settings(cls, settings):

return cls(settings)

返回类对象自己,所以直接看__init__函数即可class SpiderLoader(object):

"""

SpiderLoader is a class which locates and loads spiders

in a Scrapy project.

"""

def __init__(self, settings):

self.spider_modules = settings.getlist('SPIDER_MODULES')

# 获得settting中的模块名字,创建scrapy的时候就默认帮你生成了

# 你可以看看你的settings文件里面的内容就可以找到这个值,是一个list

self.warn_only = settings.getbool('SPIDER_LOADER_WARN_ONLY')

self._spiders = {}

self._found = defaultdict(list)

self._load_all_spiders() # 加载所有爬虫

核心就是这个_load_all_spiders:

走起:def _load_all_spiders(self):

for name in self.spider_modules:

for module in walk_modules(name): # 这个遍历文件夹里面的文件,然后再转化为类对象,

# 保存到字典:self._spiders = {}

self._load_spiders(module) # 模块变成spider

self._check_name_duplicates() # 去重,如果名字一样就异常

接下来看看_load_spiders

核心就是下面的。def iter_spider_classes(module):

from scrapy.spiders import Spider

for obj in six.itervalues(vars(module)): # 找到模块里面的变量,然后迭代出来

if inspect.isclass(obj) and \

issubclass(obj, Spider) and \

obj.__module__ == module.__name__ and \

getattr(obj, 'name', None): # 有name属性,继承于Spider

yield obj

这个obj就是我们平时写的spider类了。

原来分析了这么多,才找到了我们平时写的爬虫类

待续。。。。

原创文章

转载请注明出处

http://30daydo.com/article/530

查看全部

运行scrapy crawl example 命令的时候,就会执行我们写的爬虫程序。

下面我们从源码分析一下scrapy执行的流程:

执行scrapy crawl 命令时,调用的是Command类

然后我们去看看crawler_process,这个是来自ScrapyCommand,而ScrapyCommand又是CrawlerProcess的子类,而CrawlerProcess又是CrawlerRunner的子类

在CrawlerRunner构造函数里面主要作用就是这个

1. 加载配置文件

默认配置文件defautl_settting.py

load_object的实现

测试代码:

在代码块A中,loader_cls是SpiderLoader,最后返回的的是SpiderLoader.from_settings(settings.frozencopy())

接下来看看SpiderLoader.from_settings,

返回类对象自己,所以直接看__init__函数即可

核心就是这个_load_all_spiders:

走起:

接下来看看_load_spiders

核心就是下面的。

这个obj就是我们平时写的spider类了。

原来分析了这么多,才找到了我们平时写的爬虫类

待续。。。。

原创文章

转载请注明出处

http://30daydo.com/article/530

下面我们从源码分析一下scrapy执行的流程:

执行scrapy crawl 命令时,调用的是Command类

class Command(ScrapyCommand):

requires_project = True

def syntax(self):

return '[options]'

def short_desc(self):

return 'Runs all of the spiders - My Defined'

def run(self,args,opts):

print('==================')

print(type(self.crawler_process))

spider_list = self.crawler_process.spiders.list() # 找到爬虫类

for name in spider_list:

print('=================')

print(name)

self.crawler_process.crawl(name,**opts.__dict__)

self.crawler_process.start()

然后我们去看看crawler_process,这个是来自ScrapyCommand,而ScrapyCommand又是CrawlerProcess的子类,而CrawlerProcess又是CrawlerRunner的子类

在CrawlerRunner构造函数里面主要作用就是这个

def __init__(self, settings=None):

if isinstance(settings, dict) or settings is None:

settings = Settings(settings)

self.settings = settings

self.spider_loader = _get_spider_loader(settings) # 构造爬虫

self._crawlers = set()

self._active = set()

self.bootstrap_failed = False

1. 加载配置文件

def _get_spider_loader(settings):

cls_path = settings.get('SPIDER_LOADER_CLASS')

# settings文件没有定义SPIDER_LOADER_CLASS,所以这里获取到的是系统的默认配置文件,

# 默认配置文件在接下来的代码块A

# SPIDER_LOADER_CLASS = 'scrapy.spiderloader.SpiderLoader'

loader_cls = load_object(cls_path)

# 这个函数就是根据路径转为类对象,也就是上面crapy.spiderloader.SpiderLoader 这个

# 字符串变成一个类对象

# 具体的load_object 对象代码见下面代码块B

return loader_cls.from_settings(settings.frozencopy())

默认配置文件defautl_settting.py

# 代码块A

#......省略若干

SCHEDULER = 'scrapy.core.scheduler.Scheduler'

SCHEDULER_DISK_QUEUE = 'scrapy.squeues.PickleLifoDiskQueue'

SCHEDULER_MEMORY_QUEUE = 'scrapy.squeues.LifoMemoryQueue'

SCHEDULER_PRIORITY_QUEUE = 'scrapy.pqueues.ScrapyPriorityQueue'

SPIDER_LOADER_CLASS = 'scrapy.spiderloader.SpiderLoader' 就是这个值

SPIDER_LOADER_WARN_ONLY = False

SPIDER_MIDDLEWARES = {}

load_object的实现

# 代码块B 为了方便,我把异常处理的去除

from importlib import import_module #导入第三方库

def load_object(path):

dot = path.rindex('.')

module, name = path[:dot], path[dot+1:]

# 上面把路径分为基本路径+模块名

mod = import_module(module)

obj = getattr(mod, name)

# 获取模块里面那个值

return obj

测试代码:

In [33]: mod = import_module(module)

In [34]: mod

Out[34]: <module 'scrapy.spiderloader' from '/home/xda/anaconda3/lib/python3.7/site-packages/scrapy/spiderloader.py'>

In [35]: getattr(mod,name)

Out[35]: scrapy.spiderloader.SpiderLoader

In [36]: obj = getattr(mod,name)

In [37]: obj

Out[37]: scrapy.spiderloader.SpiderLoader

In [38]: type(obj)

Out[38]: type

在代码块A中,loader_cls是SpiderLoader,最后返回的的是SpiderLoader.from_settings(settings.frozencopy())

接下来看看SpiderLoader.from_settings,

def from_settings(cls, settings):

return cls(settings)

返回类对象自己,所以直接看__init__函数即可

class SpiderLoader(object):

"""

SpiderLoader is a class which locates and loads spiders

in a Scrapy project.

"""

def __init__(self, settings):

self.spider_modules = settings.getlist('SPIDER_MODULES')

# 获得settting中的模块名字,创建scrapy的时候就默认帮你生成了

# 你可以看看你的settings文件里面的内容就可以找到这个值,是一个list

self.warn_only = settings.getbool('SPIDER_LOADER_WARN_ONLY')

self._spiders = {}

self._found = defaultdict(list)

self._load_all_spiders() # 加载所有爬虫

核心就是这个_load_all_spiders:

走起:

def _load_all_spiders(self):

for name in self.spider_modules:

for module in walk_modules(name): # 这个遍历文件夹里面的文件,然后再转化为类对象,

# 保存到字典:self._spiders = {}

self._load_spiders(module) # 模块变成spider

self._check_name_duplicates() # 去重,如果名字一样就异常

接下来看看_load_spiders

核心就是下面的。

def iter_spider_classes(module):

from scrapy.spiders import Spider

for obj in six.itervalues(vars(module)): # 找到模块里面的变量,然后迭代出来

if inspect.isclass(obj) and \

issubclass(obj, Spider) and \

obj.__module__ == module.__name__ and \

getattr(obj, 'name', None): # 有name属性,继承于Spider

yield obj

这个obj就是我们平时写的spider类了。

原来分析了这么多,才找到了我们平时写的爬虫类

待续。。。。

原创文章

转载请注明出处

http://30daydo.com/article/530

Linux下自制有道词典 - python 解密有道词典JS加密

李魔佛 发表了文章 • 0 个评论 • 9055 次浏览 • 2019-02-23 20:17

对于爬虫新手来说,JS解密是一道过不去的坎,需要不断地练习。

平时在linux下开发,鉴于没有什么好用翻译软件,打开网易也占用系统资源,所以写了个在控制台的翻译软件接口。

使用python爬虫,查看网页的JS加密方法,一步一步地分析,就能够得到最后的加密方法啦。

直接给出代码:

# -*- coding: utf-8 -*-

# website: http://30daydo.com

# @Time : 2019/2/23 19:34

# @File : youdao.py

# 解密有道词典的JS

import hashlib

import random

import requests

import time

def md5_(word):

s = bytes(word, encoding='utf8')

m = hashlib.md5()

m.update(s)

ret = m.hexdigest()

return ret

def get_sign(word, salt):

ret = md5_('fanyideskweb' + word + salt + 'p09@Bn{h02_BIEe]$P^nG')

return ret

def youdao(word):

url = 'http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule'

headers = {

'Host': 'fanyi.youdao.com',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:47.0) Gecko/20100101 Firefox/47.0',

'Accept': 'application/json, text/javascript, */*; q=0.01',

'Accept-Language': 'zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3',

'Accept-Encoding': 'gzip, deflate',

'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8',

'X-Requested-With': 'XMLHttpRequest',

'Referer': 'http://fanyi.youdao.com/',

'Content-Length': '252',

'Cookie': 'YOUDAO_MOBILE_ACCESS_TYPE=1; OUTFOX_SEARCH_USER_ID=1672542763@10.169.0.83; JSESSIONID=aaaWzxpjeDu1gbhopLzKw; ___rl__test__cookies=1550913722828; OUTFOX_SEARCH_USER_ID_NCOO=372126049.6326876',

'Connection': 'keep-alive',

'Pragma': 'no-cache',

'Cache-Control': 'no-cache',

}

ts = str(int(time.time()*1000))

salt=ts+str(random.randint(0,10))

bv = md5_("5.0 (Windows)")

sign= get_sign(word,salt)

post_data = {

'i': word,

'from': 'AUTO', 'to': 'AUTO', 'smartresult': 'dict', 'client': 'fanyideskweb', 'salt': salt,

'sign': sign, 'ts': ts, 'bv': bv, 'doctype': 'json', 'version': '2.1',

'keyfrom': 'fanyi.web', 'action': 'FY_BY_REALTIME', 'typoResult': 'false'

}

r = requests.post(

url=url,

headers=headers,

data=post_data

)

for item in r.json().get('smartResult',{}).get('entries'):

print(item)

word='student'

youdao(word)

得到结果:

Github:

https://github.com/Rockyzsu/CrawlMan/tree/master/youdao_dictionary

原创文章,转载请注明出处

http://30daydo.com/article/416 查看全部

平时在linux下开发,鉴于没有什么好用翻译软件,打开网易也占用系统资源,所以写了个在控制台的翻译软件接口。

使用python爬虫,查看网页的JS加密方法,一步一步地分析,就能够得到最后的加密方法啦。

直接给出代码:

# -*- coding: utf-8 -*-

# website: http://30daydo.com

# @Time : 2019/2/23 19:34

# @File : youdao.py

# 解密有道词典的JS

import hashlib

import random

import requests

import time

def md5_(word):

s = bytes(word, encoding='utf8')

m = hashlib.md5()

m.update(s)

ret = m.hexdigest()

return ret

def get_sign(word, salt):

ret = md5_('fanyideskweb' + word + salt + 'p09@Bn{h02_BIEe]$P^nG')

return ret

def youdao(word):

url = 'http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule'

headers = {

'Host': 'fanyi.youdao.com',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:47.0) Gecko/20100101 Firefox/47.0',

'Accept': 'application/json, text/javascript, */*; q=0.01',

'Accept-Language': 'zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3',

'Accept-Encoding': 'gzip, deflate',

'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8',

'X-Requested-With': 'XMLHttpRequest',

'Referer': 'http://fanyi.youdao.com/',

'Content-Length': '252',

'Cookie': 'YOUDAO_MOBILE_ACCESS_TYPE=1; OUTFOX_SEARCH_USER_ID=1672542763@10.169.0.83; JSESSIONID=aaaWzxpjeDu1gbhopLzKw; ___rl__test__cookies=1550913722828; OUTFOX_SEARCH_USER_ID_NCOO=372126049.6326876',

'Connection': 'keep-alive',

'Pragma': 'no-cache',

'Cache-Control': 'no-cache',

}

ts = str(int(time.time()*1000))

salt=ts+str(random.randint(0,10))

bv = md5_("5.0 (Windows)")

sign= get_sign(word,salt)

post_data = {

'i': word,

'from': 'AUTO', 'to': 'AUTO', 'smartresult': 'dict', 'client': 'fanyideskweb', 'salt': salt,

'sign': sign, 'ts': ts, 'bv': bv, 'doctype': 'json', 'version': '2.1',

'keyfrom': 'fanyi.web', 'action': 'FY_BY_REALTIME', 'typoResult': 'false'

}

r = requests.post(

url=url,

headers=headers,

data=post_data

)

for item in r.json().get('smartResult',{}).get('entries'):

print(item)

word='student'

youdao(word)

得到结果:

Github:

https://github.com/Rockyzsu/CrawlMan/tree/master/youdao_dictionary

原创文章,转载请注明出处

http://30daydo.com/article/416 查看全部

对于爬虫新手来说,JS解密是一道过不去的坎,需要不断地练习。

平时在linux下开发,鉴于没有什么好用翻译软件,打开网易也占用系统资源,所以写了个在控制台的翻译软件接口。

使用python爬虫,查看网页的JS加密方法,一步一步地分析,就能够得到最后的加密方法啦。

直接给出代码:

得到结果:

Github:

https://github.com/Rockyzsu/CrawlMan/tree/master/youdao_dictionary

原创文章,转载请注明出处

http://30daydo.com/article/416

平时在linux下开发,鉴于没有什么好用翻译软件,打开网易也占用系统资源,所以写了个在控制台的翻译软件接口。

使用python爬虫,查看网页的JS加密方法,一步一步地分析,就能够得到最后的加密方法啦。

直接给出代码:

# -*- coding: utf-8 -*-

# website: http://30daydo.com

# @Time : 2019/2/23 19:34

# @File : youdao.py

# 解密有道词典的JS

import hashlib

import random

import requests

import time

def md5_(word):

s = bytes(word, encoding='utf8')

m = hashlib.md5()

m.update(s)

ret = m.hexdigest()

return ret

def get_sign(word, salt):

ret = md5_('fanyideskweb' + word + salt + 'p09@Bn{h02_BIEe]$P^nG')

return ret

def youdao(word):

url = 'http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule'

headers = {

'Host': 'fanyi.youdao.com',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:47.0) Gecko/20100101 Firefox/47.0',

'Accept': 'application/json, text/javascript, */*; q=0.01',

'Accept-Language': 'zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3',

'Accept-Encoding': 'gzip, deflate',

'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8',

'X-Requested-With': 'XMLHttpRequest',

'Referer': 'http://fanyi.youdao.com/',

'Content-Length': '252',

'Cookie': 'YOUDAO_MOBILE_ACCESS_TYPE=1; OUTFOX_SEARCH_USER_ID=1672542763@10.169.0.83; JSESSIONID=aaaWzxpjeDu1gbhopLzKw; ___rl__test__cookies=1550913722828; OUTFOX_SEARCH_USER_ID_NCOO=372126049.6326876',

'Connection': 'keep-alive',

'Pragma': 'no-cache',

'Cache-Control': 'no-cache',

}

ts = str(int(time.time()*1000))

salt=ts+str(random.randint(0,10))

bv = md5_("5.0 (Windows)")

sign= get_sign(word,salt)

post_data = {

'i': word,

'from': 'AUTO', 'to': 'AUTO', 'smartresult': 'dict', 'client': 'fanyideskweb', 'salt': salt,

'sign': sign, 'ts': ts, 'bv': bv, 'doctype': 'json', 'version': '2.1',

'keyfrom': 'fanyi.web', 'action': 'FY_BY_REALTIME', 'typoResult': 'false'

}

r = requests.post(

url=url,

headers=headers,

data=post_data

)

for item in r.json().get('smartResult',{}).get('entries'):

print(item)

word='student'

youdao(word)

得到结果:

Github:

https://github.com/Rockyzsu/CrawlMan/tree/master/youdao_dictionary

原创文章,转载请注明出处

http://30daydo.com/article/416

python 获取 中国证券网 的公告

李魔佛 发表了文章 • 11 个评论 • 25999 次浏览 • 2016-06-30 15:45

中国证券网: http://ggjd.cnstock.com/

这个网站的公告会比同花顺东方财富的早一点,而且还出现过早上中国证券网已经发了公告,而东财却拿去做午间公告,以至于可以提前获取公告提前埋伏。

现在程序自动把抓取的公告存入本网站中:http://30daydo.com/news.php

每天早上8:30更新一次。

生成的公告保存在stock/文件夹下,以日期命名。 下面脚本是循坏检测,如果有新的公告就会继续生成。

默认保存前3页的公告。(一次过太多页会被网站暂时屏蔽几分钟)。 代码以及使用了切换header来躲避网站的封杀。

修改

getInfo(3) 里面的数字就可以抓取前面某页数据

__author__ = 'rocchen'

# working v1.0

from bs4 import BeautifulSoup

import urllib2, datetime, time, codecs, cookielib, random, threading

import os,sys

def getInfo(max_index_user=5):

stock_news_site =

"http://ggjd.cnstock.com/gglist/search/ggkx/"

my_userAgent = [

'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0',

'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; en) Presto/2.8.131 Version/11.11',

'Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)']

index = 0

max_index = max_index_user

num = 1

temp_time = time.strftime("[%Y-%m-%d]-[%H-%M]", time.localtime())

store_filename = "StockNews-%s.log" % temp_time

fOpen = codecs.open(store_filename, 'w', 'utf-8')

while index < max_index:

user_agent = random.choice(my_userAgent)

# print user_agent

company_news_site = stock_news_site + str(index)

# content = urllib2.urlopen(company_news_site)

headers = {'User-Agent': user_agent, 'Host': "ggjd.cnstock.com", 'DNT': '1',

'Accept': 'text/html, application/xhtml+xml, */*', }

req = urllib2.Request(url=company_news_site, headers=headers)

resp = None

raw_content = ""

try:

resp = urllib2.urlopen(req, timeout=30)

except urllib2.HTTPError as e:

e.fp.read()

except urllib2.URLError as e:

if hasattr(e, 'code'):

print "error code %d" % e.code

elif hasattr(e, 'reason'):

print "error reason %s " % e.reason

finally:

if resp:

raw_content = resp.read()

time.sleep(2)

resp.close()

soup = BeautifulSoup(raw_content, "html.parser")

all_content = soup.find_all("span", "time")

for i in all_content:

news_time = i.string

node = i.next_sibling

str_temp = "No.%s \n%s\t%s\n---> %s \n\n" % (str(num), news_time, node['title'], node['href'])

#print "inside %d" %num

#print str_temp

fOpen.write(str_temp)

num = num + 1

#print "index %d" %index

index = index + 1

fOpen.close()

def execute_task(n=60):

period = int(n)

while True:

print datetime.datetime.now()

getInfo(3)

time.sleep(60 * period)

if __name__ == "__main__":

sub_folder = os.path.join(os.getcwd(), "stock")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

start_time = time.time() # user can change the max index number getInfo(10), by default is getInfo(5)

if len(sys.argv) <2:

n = raw_input("Input Period : ? mins to download every cycle")

else:

n=int(sys.argv[1])

execute_task(n)

end_time = time.time()

print "Total time: %s s." % str(round((end_time - start_time), 4))

github:https://github.com/Rockyzsu/cnstock

查看全部

这个网站的公告会比同花顺东方财富的早一点,而且还出现过早上中国证券网已经发了公告,而东财却拿去做午间公告,以至于可以提前获取公告提前埋伏。

现在程序自动把抓取的公告存入本网站中:http://30daydo.com/news.php

每天早上8:30更新一次。

生成的公告保存在stock/文件夹下,以日期命名。 下面脚本是循坏检测,如果有新的公告就会继续生成。

默认保存前3页的公告。(一次过太多页会被网站暂时屏蔽几分钟)。 代码以及使用了切换header来躲避网站的封杀。

修改

getInfo(3) 里面的数字就可以抓取前面某页数据

__author__ = 'rocchen'

# working v1.0

from bs4 import BeautifulSoup

import urllib2, datetime, time, codecs, cookielib, random, threading

import os,sys

def getInfo(max_index_user=5):

stock_news_site =

"http://ggjd.cnstock.com/gglist/search/ggkx/"

my_userAgent = [

'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0',

'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; en) Presto/2.8.131 Version/11.11',

'Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)']

index = 0

max_index = max_index_user

num = 1

temp_time = time.strftime("[%Y-%m-%d]-[%H-%M]", time.localtime())

store_filename = "StockNews-%s.log" % temp_time

fOpen = codecs.open(store_filename, 'w', 'utf-8')

while index < max_index:

user_agent = random.choice(my_userAgent)

# print user_agent

company_news_site = stock_news_site + str(index)

# content = urllib2.urlopen(company_news_site)

headers = {'User-Agent': user_agent, 'Host': "ggjd.cnstock.com", 'DNT': '1',

'Accept': 'text/html, application/xhtml+xml, */*', }

req = urllib2.Request(url=company_news_site, headers=headers)

resp = None

raw_content = ""

try:

resp = urllib2.urlopen(req, timeout=30)

except urllib2.HTTPError as e:

e.fp.read()

except urllib2.URLError as e:

if hasattr(e, 'code'):

print "error code %d" % e.code

elif hasattr(e, 'reason'):

print "error reason %s " % e.reason

finally:

if resp:

raw_content = resp.read()

time.sleep(2)

resp.close()

soup = BeautifulSoup(raw_content, "html.parser")

all_content = soup.find_all("span", "time")

for i in all_content:

news_time = i.string

node = i.next_sibling

str_temp = "No.%s \n%s\t%s\n---> %s \n\n" % (str(num), news_time, node['title'], node['href'])

#print "inside %d" %num

#print str_temp

fOpen.write(str_temp)

num = num + 1

#print "index %d" %index

index = index + 1

fOpen.close()

def execute_task(n=60):

period = int(n)

while True:

print datetime.datetime.now()

getInfo(3)

time.sleep(60 * period)

if __name__ == "__main__":

sub_folder = os.path.join(os.getcwd(), "stock")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

start_time = time.time() # user can change the max index number getInfo(10), by default is getInfo(5)

if len(sys.argv) <2:

n = raw_input("Input Period : ? mins to download every cycle")

else:

n=int(sys.argv[1])

execute_task(n)

end_time = time.time()

print "Total time: %s s." % str(round((end_time - start_time), 4))

github:https://github.com/Rockyzsu/cnstock

查看全部

中国证券网: http://ggjd.cnstock.com/

这个网站的公告会比同花顺东方财富的早一点,而且还出现过早上中国证券网已经发了公告,而东财却拿去做午间公告,以至于可以提前获取公告提前埋伏。

现在程序自动把抓取的公告存入本网站中:http://30daydo.com/news.php

每天早上8:30更新一次。

生成的公告保存在stock/文件夹下,以日期命名。 下面脚本是循坏检测,如果有新的公告就会继续生成。

默认保存前3页的公告。(一次过太多页会被网站暂时屏蔽几分钟)。 代码以及使用了切换header来躲避网站的封杀。

修改

getInfo(3) 里面的数字就可以抓取前面某页数据

github:https://github.com/Rockyzsu/cnstock

这个网站的公告会比同花顺东方财富的早一点,而且还出现过早上中国证券网已经发了公告,而东财却拿去做午间公告,以至于可以提前获取公告提前埋伏。

现在程序自动把抓取的公告存入本网站中:http://30daydo.com/news.php

每天早上8:30更新一次。

生成的公告保存在stock/文件夹下,以日期命名。 下面脚本是循坏检测,如果有新的公告就会继续生成。

默认保存前3页的公告。(一次过太多页会被网站暂时屏蔽几分钟)。 代码以及使用了切换header来躲避网站的封杀。

修改

getInfo(3) 里面的数字就可以抓取前面某页数据

__author__ = 'rocchen'

# working v1.0

from bs4 import BeautifulSoup

import urllib2, datetime, time, codecs, cookielib, random, threading

import os,sys

def getInfo(max_index_user=5):

stock_news_site =

"http://ggjd.cnstock.com/gglist/search/ggkx/"

my_userAgent = [

'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0',

'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1',

'Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; en) Presto/2.8.131 Version/11.11',

'Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)']

index = 0

max_index = max_index_user

num = 1

temp_time = time.strftime("[%Y-%m-%d]-[%H-%M]", time.localtime())

store_filename = "StockNews-%s.log" % temp_time

fOpen = codecs.open(store_filename, 'w', 'utf-8')

while index < max_index:

user_agent = random.choice(my_userAgent)

# print user_agent

company_news_site = stock_news_site + str(index)

# content = urllib2.urlopen(company_news_site)

headers = {'User-Agent': user_agent, 'Host': "ggjd.cnstock.com", 'DNT': '1',

'Accept': 'text/html, application/xhtml+xml, */*', }

req = urllib2.Request(url=company_news_site, headers=headers)

resp = None

raw_content = ""

try:

resp = urllib2.urlopen(req, timeout=30)

except urllib2.HTTPError as e:

e.fp.read()

except urllib2.URLError as e:

if hasattr(e, 'code'):

print "error code %d" % e.code

elif hasattr(e, 'reason'):

print "error reason %s " % e.reason

finally:

if resp:

raw_content = resp.read()

time.sleep(2)

resp.close()

soup = BeautifulSoup(raw_content, "html.parser")

all_content = soup.find_all("span", "time")

for i in all_content:

news_time = i.string

node = i.next_sibling

str_temp = "No.%s \n%s\t%s\n---> %s \n\n" % (str(num), news_time, node['title'], node['href'])

#print "inside %d" %num

#print str_temp

fOpen.write(str_temp)

num = num + 1

#print "index %d" %index

index = index + 1

fOpen.close()

def execute_task(n=60):

period = int(n)

while True:

print datetime.datetime.now()

getInfo(3)

time.sleep(60 * period)

if __name__ == "__main__":

sub_folder = os.path.join(os.getcwd(), "stock")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

start_time = time.time() # user can change the max index number getInfo(10), by default is getInfo(5)

if len(sys.argv) <2:

n = raw_input("Input Period : ? mins to download every cycle")

else:

n=int(sys.argv[1])

execute_task(n)

end_time = time.time()

print "Total time: %s s." % str(round((end_time - start_time), 4))

github:https://github.com/Rockyzsu/cnstock

python 批量获取色影无忌 获奖图片

李魔佛 发表了文章 • 6 个评论 • 17736 次浏览 • 2016-06-29 16:41

色影无忌上的图片很多都可以直接拿来做壁纸的,而且发布面不会太广,基本不会和市面上大部分的壁纸或者图片素材重复。 关键还没有水印。 这么良心的图片服务商哪里找呀~~

不多说,直接来代码:#-*-coding=utf-8-*-

__author__ = 'rocky chen'

from bs4 import BeautifulSoup

import urllib2,sys,StringIO,gzip,time,random,re,urllib,os

reload(sys)

sys.setdefaultencoding('utf-8')

class Xitek():

def __init__(self):

self.url="http://photo.xitek.com/"

user_agent="Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"

self.headers={"User-Agent":user_agent}

self.last_page=self.__get_last_page()

def __get_last_page(self):

html=self.__getContentAuto(self.url)

bs=BeautifulSoup(html,"html.parser")

page=bs.find_all('a',class_="blast")

last_page=page[0]['href'].split('/')[-1]

return int(last_page)

def __getContentAuto(self,url):

req=urllib2.Request(url,headers=self.headers)

resp=urllib2.urlopen(req)

#time.sleep(2*random.random())

content=resp.read()

info=resp.info().get("Content-Encoding")

if info==None:

return content

else:

t=StringIO.StringIO(content)

gziper=gzip.GzipFile(fileobj=t)

html = gziper.read()

return html

#def __getFileName(self,stream):

def __download(self,url):

p=re.compile(r'href="(/photoid/\d+)"')

#html=self.__getContentNoZip(url)

html=self.__getContentAuto(url)

content = p.findall(html)

for i in content:

print i

photoid=self.__getContentAuto(self.url+i)

bs=BeautifulSoup(photoid,"html.parser")

final_link=bs.find('img',class_="mimg")['src']

print final_link

#pic_stream=self.__getContentAuto(final_link)

title=bs.title.string.strip()

filename = re.sub('[\/:*?"<>|]', '-', title)

filename=filename+'.jpg'

urllib.urlretrieve(final_link,filename)

#f=open(filename,'w')

#f.write(pic_stream)

#f.close()

#print html

#bs=BeautifulSoup(html,"html.parser")

#content=bs.find_all(p)

#for i in content:

# print i

'''

print bs.title

element_link=bs.find_all('div',class_="element")

print len(element_link)

k=1

for href in element_link:

#print type(href)

#print href.tag

'''

'''

if href.children[0]:

print href.children[0]

'''

'''

t=0

for i in href.children:

#if i.a:

if t==0:

#print k

if i['href']

print link

if p.findall(link):

full_path=self.url[0:len(self.url)-1]+link

sub_html=self.__getContent(full_path)

bs=BeautifulSoup(sub_html,"html.parser")

final_link=bs.find('img',class_="mimg")['src']

#time.sleep(2*random.random())

print final_link

#k=k+1

#print type(i)

#print i.tag

#if hasattr(i,"href"):

#print i['href']

#print i.tag

t=t+1

#print "*"

'''

'''

if href:

if href.children:

print href.children[0]

'''

#print "one element link"

def getPhoto(self):

start=0

#use style/0

photo_url="http://photo.xitek.com/style/0/p/"

for i in range(start,self.last_page+1):

url=photo_url+str(i)

print url

#time.sleep(1)

self.__download(url)

'''

url="http://photo.xitek.com/style/0/p/10"

self.__download(url)

'''

#url="http://photo.xitek.com/style/0/p/0"

#html=self.__getContent(url)

#url="http://photo.xitek.com/"

#html=self.__getContentNoZip(url)

#print html

#'''

def main():

sub_folder = os.path.join(os.getcwd(), "content")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

obj=Xitek()

obj.getPhoto()

if __name__=="__main__":

main()

下载后在content文件夹下会自动抓取所有图片。 (色影无忌的服务器没有做任何的屏蔽处理,所以脚本不能跑那么快,可以适当调用sleep函数,不要让服务器压力那么大)

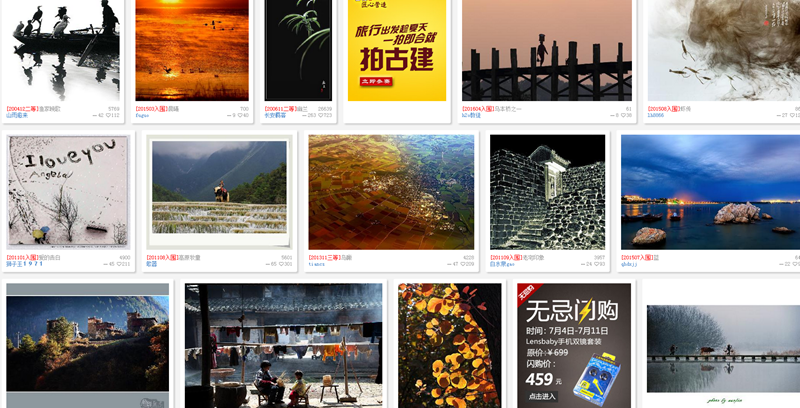

已经下载好的图片:

github: https://github.com/Rockyzsu/fetchXitek (欢迎前来star) 查看全部

不多说,直接来代码:#-*-coding=utf-8-*-

__author__ = 'rocky chen'

from bs4 import BeautifulSoup

import urllib2,sys,StringIO,gzip,time,random,re,urllib,os

reload(sys)

sys.setdefaultencoding('utf-8')

class Xitek():

def __init__(self):

self.url="http://photo.xitek.com/"

user_agent="Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"

self.headers={"User-Agent":user_agent}

self.last_page=self.__get_last_page()

def __get_last_page(self):

html=self.__getContentAuto(self.url)

bs=BeautifulSoup(html,"html.parser")

page=bs.find_all('a',class_="blast")

last_page=page[0]['href'].split('/')[-1]

return int(last_page)

def __getContentAuto(self,url):

req=urllib2.Request(url,headers=self.headers)

resp=urllib2.urlopen(req)

#time.sleep(2*random.random())

content=resp.read()

info=resp.info().get("Content-Encoding")

if info==None:

return content

else:

t=StringIO.StringIO(content)

gziper=gzip.GzipFile(fileobj=t)

html = gziper.read()

return html

#def __getFileName(self,stream):

def __download(self,url):

p=re.compile(r'href="(/photoid/\d+)"')

#html=self.__getContentNoZip(url)

html=self.__getContentAuto(url)

content = p.findall(html)

for i in content:

print i

photoid=self.__getContentAuto(self.url+i)

bs=BeautifulSoup(photoid,"html.parser")

final_link=bs.find('img',class_="mimg")['src']

print final_link

#pic_stream=self.__getContentAuto(final_link)

title=bs.title.string.strip()

filename = re.sub('[\/:*?"<>|]', '-', title)

filename=filename+'.jpg'

urllib.urlretrieve(final_link,filename)

#f=open(filename,'w')

#f.write(pic_stream)

#f.close()

#print html

#bs=BeautifulSoup(html,"html.parser")

#content=bs.find_all(p)

#for i in content:

# print i

'''

print bs.title

element_link=bs.find_all('div',class_="element")

print len(element_link)

k=1

for href in element_link:

#print type(href)

#print href.tag

'''

'''

if href.children[0]:

print href.children[0]

'''

'''

t=0

for i in href.children:

#if i.a:

if t==0:

#print k

if i['href']

print link

if p.findall(link):

full_path=self.url[0:len(self.url)-1]+link

sub_html=self.__getContent(full_path)

bs=BeautifulSoup(sub_html,"html.parser")

final_link=bs.find('img',class_="mimg")['src']

#time.sleep(2*random.random())

print final_link

#k=k+1

#print type(i)

#print i.tag

#if hasattr(i,"href"):

#print i['href']

#print i.tag

t=t+1

#print "*"

'''

'''

if href:

if href.children:

print href.children[0]

'''

#print "one element link"

def getPhoto(self):

start=0

#use style/0

photo_url="http://photo.xitek.com/style/0/p/"

for i in range(start,self.last_page+1):

url=photo_url+str(i)

print url

#time.sleep(1)

self.__download(url)

'''

url="http://photo.xitek.com/style/0/p/10"

self.__download(url)

'''

#url="http://photo.xitek.com/style/0/p/0"

#html=self.__getContent(url)

#url="http://photo.xitek.com/"

#html=self.__getContentNoZip(url)

#print html

#'''

def main():

sub_folder = os.path.join(os.getcwd(), "content")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

obj=Xitek()

obj.getPhoto()

if __name__=="__main__":

main()

下载后在content文件夹下会自动抓取所有图片。 (色影无忌的服务器没有做任何的屏蔽处理,所以脚本不能跑那么快,可以适当调用sleep函数,不要让服务器压力那么大)

已经下载好的图片:

github: https://github.com/Rockyzsu/fetchXitek (欢迎前来star) 查看全部

色影无忌上的图片很多都可以直接拿来做壁纸的,而且发布面不会太广,基本不会和市面上大部分的壁纸或者图片素材重复。 关键还没有水印。 这么良心的图片服务商哪里找呀~~

不多说,直接来代码:

下载后在content文件夹下会自动抓取所有图片。 (色影无忌的服务器没有做任何的屏蔽处理,所以脚本不能跑那么快,可以适当调用sleep函数,不要让服务器压力那么大)

已经下载好的图片:

github: https://github.com/Rockyzsu/fetchXitek (欢迎前来star)

不多说,直接来代码:

#-*-coding=utf-8-*-

__author__ = 'rocky chen'

from bs4 import BeautifulSoup

import urllib2,sys,StringIO,gzip,time,random,re,urllib,os

reload(sys)

sys.setdefaultencoding('utf-8')

class Xitek():

def __init__(self):

self.url="http://photo.xitek.com/"

user_agent="Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"

self.headers={"User-Agent":user_agent}

self.last_page=self.__get_last_page()

def __get_last_page(self):

html=self.__getContentAuto(self.url)

bs=BeautifulSoup(html,"html.parser")

page=bs.find_all('a',class_="blast")

last_page=page[0]['href'].split('/')[-1]

return int(last_page)

def __getContentAuto(self,url):

req=urllib2.Request(url,headers=self.headers)

resp=urllib2.urlopen(req)

#time.sleep(2*random.random())

content=resp.read()

info=resp.info().get("Content-Encoding")

if info==None:

return content

else:

t=StringIO.StringIO(content)

gziper=gzip.GzipFile(fileobj=t)

html = gziper.read()

return html

#def __getFileName(self,stream):

def __download(self,url):

p=re.compile(r'href="(/photoid/\d+)"')

#html=self.__getContentNoZip(url)

html=self.__getContentAuto(url)

content = p.findall(html)

for i in content:

print i

photoid=self.__getContentAuto(self.url+i)

bs=BeautifulSoup(photoid,"html.parser")

final_link=bs.find('img',class_="mimg")['src']

print final_link

#pic_stream=self.__getContentAuto(final_link)

title=bs.title.string.strip()

filename = re.sub('[\/:*?"<>|]', '-', title)

filename=filename+'.jpg'

urllib.urlretrieve(final_link,filename)

#f=open(filename,'w')

#f.write(pic_stream)

#f.close()

#print html

#bs=BeautifulSoup(html,"html.parser")

#content=bs.find_all(p)

#for i in content:

# print i

'''

print bs.title

element_link=bs.find_all('div',class_="element")

print len(element_link)

k=1

for href in element_link:

#print type(href)

#print href.tag

'''

'''

if href.children[0]:

print href.children[0]

'''

'''

t=0

for i in href.children:

#if i.a:

if t==0:

#print k

if i['href']

print link

if p.findall(link):

full_path=self.url[0:len(self.url)-1]+link

sub_html=self.__getContent(full_path)

bs=BeautifulSoup(sub_html,"html.parser")

final_link=bs.find('img',class_="mimg")['src']

#time.sleep(2*random.random())

print final_link

#k=k+1

#print type(i)

#print i.tag

#if hasattr(i,"href"):

#print i['href']

#print i.tag

t=t+1

#print "*"

'''

'''

if href:

if href.children:

print href.children[0]

'''

#print "one element link"

def getPhoto(self):

start=0

#use style/0

photo_url="http://photo.xitek.com/style/0/p/"

for i in range(start,self.last_page+1):

url=photo_url+str(i)

print url

#time.sleep(1)

self.__download(url)

'''

url="http://photo.xitek.com/style/0/p/10"

self.__download(url)

'''

#url="http://photo.xitek.com/style/0/p/0"

#html=self.__getContent(url)

#url="http://photo.xitek.com/"

#html=self.__getContentNoZip(url)

#print html

#'''

def main():

sub_folder = os.path.join(os.getcwd(), "content")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

obj=Xitek()

obj.getPhoto()

if __name__=="__main__":

main()

下载后在content文件夹下会自动抓取所有图片。 (色影无忌的服务器没有做任何的屏蔽处理,所以脚本不能跑那么快,可以适当调用sleep函数,不要让服务器压力那么大)

已经下载好的图片:

github: https://github.com/Rockyzsu/fetchXitek (欢迎前来star)

抓取 知乎日报 中的 大误 系类文章,生成电子书推送到kindle

李魔佛 发表了文章 • 0 个评论 • 10734 次浏览 • 2016-06-12 08:52

无意中看了知乎日报的大误系列的一篇文章,之后就停不下来了,大误是虚构故事,知乎上神人虚构故事的功力要高于网络上的很多写手啊!! 看的欲罢不能,不过还是那句,手机屏幕太小,连续看几个小时很疲劳,而且每次都要联网去看。

所以写了下面的python脚本,一劳永逸。 脚本抓取大误从开始到现在的所有文章,并推送到你自己的kindle账号。

# -*- coding=utf-8 -*-

__author__ = 'rocky @ www.30daydo.com'

import urllib2, re, os, codecs,sys,datetime

from bs4 import BeautifulSoup

# example https://zhhrb.sinaapp.com/index.php?date=20160610

from mail_template import MailAtt

reload(sys)

sys.setdefaultencoding('utf-8')

def save2file(filename, content):

filename = filename + ".txt"

f = codecs.open(filename, 'a', encoding='utf-8')

f.write(content)

f.close()

def getPost(date_time, filter_p):

url = 'https://zhhrb.sinaapp.com/index.php?date=' + date_time

user_agent = "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"

header = {"User-Agent": user_agent}

req = urllib2.Request(url, headers=header)

resp = urllib2.urlopen(req)

content = resp.read()

p = re.compile('<h2 class="question-title">(.*)</h2></br></a>')

result = re.findall(p, content)

count = -1

row = -1

for i in result:

#print i

return_content = re.findall(filter_p, i)

if return_content:

row = count

break

#print return_content[0]

count = count + 1

#print row

if row == -1:

return 0

link_p = re.compile('<a href="(.*)" target="_blank" rel="nofollow">')

link_result = re.findall(link_p, content)[row + 1]

print link_result

result_req = urllib2.Request(link_result, headers=header)

result_resp = urllib2.urlopen(result_req)

#result_content= result_resp.read()

#print result_content

bs = BeautifulSoup(result_resp, "html.parser")

title = bs.title.string.strip()

#print title

filename = re.sub('[\/:*?"<>|]', '-', title)

print filename

print date_time

save2file(filename, title)

save2file(filename, "\n\n\n\n--------------------%s Detail----------------------\n\n" %date_time)

detail_content = bs.find_all('div', class_='content')

for i in detail_content:

#print i

save2file(filename,"\n\n-------------------------answer -------------------------\n\n")

for j in i.strings:

save2file(filename, j)

smtp_server = 'smtp.126.com'

from_mail = sys.argv[1]

password = sys.argv[2]

to_mail = 'xxxxx@kindle.cn'

send_kindle = MailAtt(smtp_server, from_mail, password, to_mail)

send_kindle.send_txt(filename)

def main():

sub_folder = os.path.join(os.getcwd(), "content")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

date_time = '20160611'

filter_p = re.compile('大误.*')

ori_day=datetime.date(datetime.date.today().year,01,01)

t=datetime.date(datetime.date.today().year,datetime.date.today().month,datetime.date.today().day)

delta=(t-ori_day).days

print delta

for i in range(delta):

day=datetime.date(datetime.date.today().year,01,01)+datetime.timedelta(i)

getPost(day.strftime("%Y%m%d"),filter_p)

#getPost(date_time, filter_p)

if __name__ == "__main__":

main()

github: https://github.com/Rockyzsu/zhihu_daily__kindle

上面的代码可以稍作修改,就可以抓取瞎扯或者深夜食堂的系列文章。

附福利:

http://pan.baidu.com/s/1kVewz59

所有的知乎日报的大误文章。(截止2016/6/12日) 查看全部

所以写了下面的python脚本,一劳永逸。 脚本抓取大误从开始到现在的所有文章,并推送到你自己的kindle账号。

# -*- coding=utf-8 -*-

__author__ = 'rocky @ www.30daydo.com'

import urllib2, re, os, codecs,sys,datetime

from bs4 import BeautifulSoup

# example https://zhhrb.sinaapp.com/index.php?date=20160610

from mail_template import MailAtt

reload(sys)

sys.setdefaultencoding('utf-8')

def save2file(filename, content):

filename = filename + ".txt"

f = codecs.open(filename, 'a', encoding='utf-8')

f.write(content)

f.close()

def getPost(date_time, filter_p):

url = 'https://zhhrb.sinaapp.com/index.php?date=' + date_time

user_agent = "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"

header = {"User-Agent": user_agent}

req = urllib2.Request(url, headers=header)

resp = urllib2.urlopen(req)

content = resp.read()

p = re.compile('<h2 class="question-title">(.*)</h2></br></a>')

result = re.findall(p, content)

count = -1

row = -1

for i in result:

#print i

return_content = re.findall(filter_p, i)

if return_content:

row = count

break

#print return_content[0]

count = count + 1

#print row

if row == -1:

return 0

link_p = re.compile('<a href="(.*)" target="_blank" rel="nofollow">')

link_result = re.findall(link_p, content)[row + 1]

print link_result

result_req = urllib2.Request(link_result, headers=header)

result_resp = urllib2.urlopen(result_req)

#result_content= result_resp.read()

#print result_content

bs = BeautifulSoup(result_resp, "html.parser")

title = bs.title.string.strip()

#print title

filename = re.sub('[\/:*?"<>|]', '-', title)

print filename

print date_time

save2file(filename, title)

save2file(filename, "\n\n\n\n--------------------%s Detail----------------------\n\n" %date_time)

detail_content = bs.find_all('div', class_='content')

for i in detail_content:

#print i

save2file(filename,"\n\n-------------------------answer -------------------------\n\n")

for j in i.strings:

save2file(filename, j)

smtp_server = 'smtp.126.com'

from_mail = sys.argv[1]

password = sys.argv[2]

to_mail = 'xxxxx@kindle.cn'

send_kindle = MailAtt(smtp_server, from_mail, password, to_mail)

send_kindle.send_txt(filename)

def main():

sub_folder = os.path.join(os.getcwd(), "content")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

date_time = '20160611'

filter_p = re.compile('大误.*')

ori_day=datetime.date(datetime.date.today().year,01,01)

t=datetime.date(datetime.date.today().year,datetime.date.today().month,datetime.date.today().day)

delta=(t-ori_day).days

print delta

for i in range(delta):

day=datetime.date(datetime.date.today().year,01,01)+datetime.timedelta(i)

getPost(day.strftime("%Y%m%d"),filter_p)

#getPost(date_time, filter_p)

if __name__ == "__main__":

main()

github: https://github.com/Rockyzsu/zhihu_daily__kindle

上面的代码可以稍作修改,就可以抓取瞎扯或者深夜食堂的系列文章。

附福利:

http://pan.baidu.com/s/1kVewz59

所有的知乎日报的大误文章。(截止2016/6/12日) 查看全部

无意中看了知乎日报的大误系列的一篇文章,之后就停不下来了,大误是虚构故事,知乎上神人虚构故事的功力要高于网络上的很多写手啊!! 看的欲罢不能,不过还是那句,手机屏幕太小,连续看几个小时很疲劳,而且每次都要联网去看。

所以写了下面的python脚本,一劳永逸。 脚本抓取大误从开始到现在的所有文章,并推送到你自己的kindle账号。

github: https://github.com/Rockyzsu/zhihu_daily__kindle

上面的代码可以稍作修改,就可以抓取瞎扯或者深夜食堂的系列文章。

附福利:

http://pan.baidu.com/s/1kVewz59

所有的知乎日报的大误文章。(截止2016/6/12日)

所以写了下面的python脚本,一劳永逸。 脚本抓取大误从开始到现在的所有文章,并推送到你自己的kindle账号。

# -*- coding=utf-8 -*-

__author__ = 'rocky @ www.30daydo.com'

import urllib2, re, os, codecs,sys,datetime

from bs4 import BeautifulSoup

# example https://zhhrb.sinaapp.com/index.php?date=20160610

from mail_template import MailAtt

reload(sys)

sys.setdefaultencoding('utf-8')

def save2file(filename, content):

filename = filename + ".txt"

f = codecs.open(filename, 'a', encoding='utf-8')

f.write(content)

f.close()

def getPost(date_time, filter_p):

url = 'https://zhhrb.sinaapp.com/index.php?date=' + date_time

user_agent = "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"

header = {"User-Agent": user_agent}

req = urllib2.Request(url, headers=header)

resp = urllib2.urlopen(req)

content = resp.read()

p = re.compile('<h2 class="question-title">(.*)</h2></br></a>')

result = re.findall(p, content)

count = -1

row = -1

for i in result:

#print i

return_content = re.findall(filter_p, i)

if return_content:

row = count

break

#print return_content[0]

count = count + 1

#print row

if row == -1:

return 0

link_p = re.compile('<a href="(.*)" target="_blank" rel="nofollow">')

link_result = re.findall(link_p, content)[row + 1]

print link_result

result_req = urllib2.Request(link_result, headers=header)

result_resp = urllib2.urlopen(result_req)

#result_content= result_resp.read()

#print result_content

bs = BeautifulSoup(result_resp, "html.parser")

title = bs.title.string.strip()

#print title

filename = re.sub('[\/:*?"<>|]', '-', title)

print filename

print date_time

save2file(filename, title)

save2file(filename, "\n\n\n\n--------------------%s Detail----------------------\n\n" %date_time)

detail_content = bs.find_all('div', class_='content')

for i in detail_content:

#print i

save2file(filename,"\n\n-------------------------answer -------------------------\n\n")

for j in i.strings:

save2file(filename, j)

smtp_server = 'smtp.126.com'

from_mail = sys.argv[1]

password = sys.argv[2]

to_mail = 'xxxxx@kindle.cn'

send_kindle = MailAtt(smtp_server, from_mail, password, to_mail)

send_kindle.send_txt(filename)

def main():

sub_folder = os.path.join(os.getcwd(), "content")

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

date_time = '20160611'

filter_p = re.compile('大误.*')

ori_day=datetime.date(datetime.date.today().year,01,01)

t=datetime.date(datetime.date.today().year,datetime.date.today().month,datetime.date.today().day)

delta=(t-ori_day).days

print delta

for i in range(delta):

day=datetime.date(datetime.date.today().year,01,01)+datetime.timedelta(i)

getPost(day.strftime("%Y%m%d"),filter_p)

#getPost(date_time, filter_p)

if __name__ == "__main__":

main()

github: https://github.com/Rockyzsu/zhihu_daily__kindle

上面的代码可以稍作修改,就可以抓取瞎扯或者深夜食堂的系列文章。

附福利:

http://pan.baidu.com/s/1kVewz59

所有的知乎日报的大误文章。(截止2016/6/12日)

python雪球爬虫 抓取雪球 大V的所有文章 推送到kindle

李魔佛 发表了文章 • 3 个评论 • 23349 次浏览 • 2016-05-29 00:06

30天内完成。 开始日期:2016年5月28日

因为雪球上喷子很多,不少大V都不堪忍受,被喷的删帖离开。 比如 易碎品,小小辛巴。

所以利用python可以有效便捷的抓取想要的大V发言内容,并保存到本地。也方便自己检索,考证(有些伪大V喜欢频繁删帖,比如今天预测明天大盘大涨,明天暴跌后就把昨天的预测给删掉,给后来者造成的错觉改大V每次都能精准预测)。

下面以 抓取狂龙的帖子为例(狂龙最近老是掀人家庄家的老底,哈)

https://xueqiu.com/4742988362

2017年2月20日更新:

爬取雪球上我的收藏的文章,并生成电子书。

(PS:收藏夹中一些文章已经被作者删掉了 - -|, 这速度也蛮快了呀。估计是以前写的现在怕被放出来打脸)

# -*-coding=utf-8-*-

#抓取雪球的收藏文章

__author__ = 'Rocky'

import requests,cookielib,re,json,time

from toolkit import Toolkit

from lxml import etree

url='https://xueqiu.com/snowman/login'

session = requests.session()

session.cookies = cookielib.LWPCookieJar(filename="cookies")

try:

session.cookies.load(ignore_discard=True)

except:

print "Cookie can't load"

agent = 'Mozilla/5.0 (Windows NT 5.1; rv:33.0) Gecko/20100101 Firefox/33.0'

headers = {'Host': 'xueqiu.com',

'Referer': 'https://xueqiu.com/',

'Origin':'https://xueqiu.com',

'User-Agent': agent}

account=Toolkit.getUserData('data.cfg')

print account['snowball_user']

print account['snowball_password']

data={'username':account['snowball_user'],'password':account['snowball_password']}

s=session.post(url,data=data,headers=headers)

print s.status_code

#print s.text

session.cookies.save()

fav_temp='https://xueqiu.com/favs?page=1'

collection=session.get(fav_temp,headers=headers)

fav_content= collection.text

p=re.compile('"maxPage":(\d+)')

maxPage=p.findall(fav_content)[0]

print maxPage

print type(maxPage)

maxPage=int(maxPage)

print type(maxPage)

for i in range(1,maxPage+1):

fav='https://xueqiu.com/favs?page=%d' %i

collection=session.get(fav,headers=headers)

fav_content= collection.text

#print fav_content

p=re.compile('var favs = {(.*?)};',re.S|re.M)

result=p.findall(fav_content)[0].strip()

new_result='{'+result+'}'

#print type(new_result)

#print new_result

data=json.loads(new_result)

use_data= data['list']

host='https://xueqiu.com'

for i in use_data:

url=host+ i['target']

print url

txt_content=session.get(url,headers=headers).text

#print txt_content.text

tree=etree.HTML(txt_content)

title=tree.xpath('//title/text()')[0]

filename = re.sub('[\/:*?"<>|]', '-', title)

print filename

content=tree.xpath('//div[@class="detail"]')

for i in content:

Toolkit.save2filecn(filename, i.xpath('string(.)'))

#print content

#Toolkit.save2file(filename,)

time.sleep(10)

用法:

1. snowball.py -- 抓取雪球上我的收藏的文章

使用: 创建一个data.cfg的文件,里面格式如下:

snowball_user=xxxxx@xx.com

snowball_password=密码

然后运行python snowball.py ,会自动登录雪球,然后 在当前目录生产txt文件。

github代码:https://github.com/Rockyzsu/xueqiu 查看全部

因为雪球上喷子很多,不少大V都不堪忍受,被喷的删帖离开。 比如 易碎品,小小辛巴。

所以利用python可以有效便捷的抓取想要的大V发言内容,并保存到本地。也方便自己检索,考证(有些伪大V喜欢频繁删帖,比如今天预测明天大盘大涨,明天暴跌后就把昨天的预测给删掉,给后来者造成的错觉改大V每次都能精准预测)。

下面以 抓取狂龙的帖子为例(狂龙最近老是掀人家庄家的老底,哈)

https://xueqiu.com/4742988362

2017年2月20日更新:

爬取雪球上我的收藏的文章,并生成电子书。

(PS:收藏夹中一些文章已经被作者删掉了 - -|, 这速度也蛮快了呀。估计是以前写的现在怕被放出来打脸)

# -*-coding=utf-8-*-

#抓取雪球的收藏文章

__author__ = 'Rocky'

import requests,cookielib,re,json,time

from toolkit import Toolkit

from lxml import etree

url='https://xueqiu.com/snowman/login'

session = requests.session()

session.cookies = cookielib.LWPCookieJar(filename="cookies")

try:

session.cookies.load(ignore_discard=True)

except:

print "Cookie can't load"

agent = 'Mozilla/5.0 (Windows NT 5.1; rv:33.0) Gecko/20100101 Firefox/33.0'

headers = {'Host': 'xueqiu.com',

'Referer': 'https://xueqiu.com/',

'Origin':'https://xueqiu.com',

'User-Agent': agent}

account=Toolkit.getUserData('data.cfg')

print account['snowball_user']

print account['snowball_password']

data={'username':account['snowball_user'],'password':account['snowball_password']}

s=session.post(url,data=data,headers=headers)

print s.status_code

#print s.text

session.cookies.save()

fav_temp='https://xueqiu.com/favs?page=1'

collection=session.get(fav_temp,headers=headers)

fav_content= collection.text

p=re.compile('"maxPage":(\d+)')

maxPage=p.findall(fav_content)[0]

print maxPage

print type(maxPage)

maxPage=int(maxPage)

print type(maxPage)

for i in range(1,maxPage+1):

fav='https://xueqiu.com/favs?page=%d' %i

collection=session.get(fav,headers=headers)

fav_content= collection.text

#print fav_content

p=re.compile('var favs = {(.*?)};',re.S|re.M)

result=p.findall(fav_content)[0].strip()

new_result='{'+result+'}'

#print type(new_result)

#print new_result

data=json.loads(new_result)

use_data= data['list']

host='https://xueqiu.com'

for i in use_data:

url=host+ i['target']

print url

txt_content=session.get(url,headers=headers).text

#print txt_content.text

tree=etree.HTML(txt_content)

title=tree.xpath('//title/text()')[0]

filename = re.sub('[\/:*?"<>|]', '-', title)

print filename

content=tree.xpath('//div[@class="detail"]')

for i in content:

Toolkit.save2filecn(filename, i.xpath('string(.)'))

#print content

#Toolkit.save2file(filename,)

time.sleep(10)

用法:

1. snowball.py -- 抓取雪球上我的收藏的文章

使用: 创建一个data.cfg的文件,里面格式如下:

snowball_user=xxxxx@xx.com

snowball_password=密码

然后运行python snowball.py ,会自动登录雪球,然后 在当前目录生产txt文件。

github代码:https://github.com/Rockyzsu/xueqiu 查看全部

30天内完成。 开始日期:2016年5月28日

因为雪球上喷子很多,不少大V都不堪忍受,被喷的删帖离开。 比如 易碎品,小小辛巴。

所以利用python可以有效便捷的抓取想要的大V发言内容,并保存到本地。也方便自己检索,考证(有些伪大V喜欢频繁删帖,比如今天预测明天大盘大涨,明天暴跌后就把昨天的预测给删掉,给后来者造成的错觉改大V每次都能精准预测)。

下面以 抓取狂龙的帖子为例(狂龙最近老是掀人家庄家的老底,哈)

https://xueqiu.com/4742988362

2017年2月20日更新:

爬取雪球上我的收藏的文章,并生成电子书。

(PS:收藏夹中一些文章已经被作者删掉了 - -|, 这速度也蛮快了呀。估计是以前写的现在怕被放出来打脸)

用法:

1. snowball.py -- 抓取雪球上我的收藏的文章

使用: 创建一个data.cfg的文件,里面格式如下:

snowball_user=xxxxx@xx.com

snowball_password=密码

然后运行python snowball.py ,会自动登录雪球,然后 在当前目录生产txt文件。

github代码:https://github.com/Rockyzsu/xueqiu

因为雪球上喷子很多,不少大V都不堪忍受,被喷的删帖离开。 比如 易碎品,小小辛巴。

所以利用python可以有效便捷的抓取想要的大V发言内容,并保存到本地。也方便自己检索,考证(有些伪大V喜欢频繁删帖,比如今天预测明天大盘大涨,明天暴跌后就把昨天的预测给删掉,给后来者造成的错觉改大V每次都能精准预测)。

下面以 抓取狂龙的帖子为例(狂龙最近老是掀人家庄家的老底,哈)

https://xueqiu.com/4742988362

2017年2月20日更新:

爬取雪球上我的收藏的文章,并生成电子书。

(PS:收藏夹中一些文章已经被作者删掉了 - -|, 这速度也蛮快了呀。估计是以前写的现在怕被放出来打脸)

# -*-coding=utf-8-*-

#抓取雪球的收藏文章

__author__ = 'Rocky'

import requests,cookielib,re,json,time

from toolkit import Toolkit

from lxml import etree

url='https://xueqiu.com/snowman/login'

session = requests.session()

session.cookies = cookielib.LWPCookieJar(filename="cookies")

try:

session.cookies.load(ignore_discard=True)

except:

print "Cookie can't load"

agent = 'Mozilla/5.0 (Windows NT 5.1; rv:33.0) Gecko/20100101 Firefox/33.0'

headers = {'Host': 'xueqiu.com',

'Referer': 'https://xueqiu.com/',

'Origin':'https://xueqiu.com',

'User-Agent': agent}

account=Toolkit.getUserData('data.cfg')

print account['snowball_user']

print account['snowball_password']

data={'username':account['snowball_user'],'password':account['snowball_password']}

s=session.post(url,data=data,headers=headers)

print s.status_code

#print s.text

session.cookies.save()

fav_temp='https://xueqiu.com/favs?page=1'

collection=session.get(fav_temp,headers=headers)

fav_content= collection.text

p=re.compile('"maxPage":(\d+)')

maxPage=p.findall(fav_content)[0]

print maxPage

print type(maxPage)

maxPage=int(maxPage)

print type(maxPage)

for i in range(1,maxPage+1):

fav='https://xueqiu.com/favs?page=%d' %i

collection=session.get(fav,headers=headers)

fav_content= collection.text

#print fav_content

p=re.compile('var favs = {(.*?)};',re.S|re.M)

result=p.findall(fav_content)[0].strip()

new_result='{'+result+'}'

#print type(new_result)

#print new_result

data=json.loads(new_result)

use_data= data['list']

host='https://xueqiu.com'

for i in use_data:

url=host+ i['target']

print url

txt_content=session.get(url,headers=headers).text

#print txt_content.text

tree=etree.HTML(txt_content)

title=tree.xpath('//title/text()')[0]

filename = re.sub('[\/:*?"<>|]', '-', title)

print filename

content=tree.xpath('//div[@class="detail"]')

for i in content:

Toolkit.save2filecn(filename, i.xpath('string(.)'))

#print content

#Toolkit.save2file(filename,)

time.sleep(10)

用法:

1. snowball.py -- 抓取雪球上我的收藏的文章

使用: 创建一个data.cfg的文件,里面格式如下:

snowball_user=xxxxx@xx.com

snowball_password=密码

然后运行python snowball.py ,会自动登录雪球,然后 在当前目录生产txt文件。

github代码:https://github.com/Rockyzsu/xueqiu

python爬虫 模拟登陆知乎 推送知乎文章到kindle电子书 获取自己的关注问题

低调的哥哥 发表了文章 • 0 个评论 • 40368 次浏览 • 2016-05-12 17:53

平时逛知乎,上班的时候看到一些好的答案,不过由于答案太长,没来得及看完,所以自己写了个python脚本,把自己想要的答案抓取下来,并且推送到kindle上,下班后用kindle再慢慢看。 平时喜欢的内容也可以整理成电子书抓取下来,等周末闲时看。

#2016-08-19更新:

添加了模拟登陆知乎的模块,自动获取自己的关注的问题id,然后把这些问题的所有答案抓取下来推送到kindle

# -*-coding=utf-8-*-

__author__ = 'Rocky'

# -*-coding=utf-8-*-

from email.mime.text import MIMEText

from email.mime.multipart import MIMEMultipart

import smtplib

from email import Encoders, Utils

import urllib2

import time

import re

import sys

import os

from bs4 import BeautifulSoup

from email.Header import Header

reload(sys)

sys.setdefaultencoding('utf-8')

class GetContent():

def __init__(self, id):

# 给出的第一个参数 就是你要下载的问题的id

# 比如 想要下载的问题链接是 https://www.zhihu.com/question/29372574

# 那么 就输入 python zhihu.py 29372574

id_link = "/question/" + id

self.getAnswer(id_link)

def save2file(self, filename, content):

# 保存为电子书文件

filename = filename + ".txt"

f = open(filename, 'a')

f.write(content)

f.close()

def getAnswer(self, answerID):

host = "http://www.zhihu.com"

url = host + answerID

print url

user_agent = "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"

# 构造header 伪装一下

header = {"User-Agent": user_agent}

req = urllib2.Request(url, headers=header)

try:

resp = urllib2.urlopen(req)

except:

print "Time out. Retry"

time.sleep(30)

# try to switch with proxy ip

resp = urllib2.urlopen(req)

# 这里已经获取了 网页的代码,接下来就是提取你想要的内容。 使用beautifulSoup 来处理,很方便

try:

bs = BeautifulSoup(resp)

except:

print "Beautifulsoup error"

return None

title = bs.title

# 获取的标题

filename_old = title.string.strip()

print filename_old

filename = re.sub('[\/:*?"<>|]', '-', filename_old)

# 用来保存内容的文件名,因为文件名不能有一些特殊符号,所以使用正则表达式过滤掉

self.save2file(filename, title.string)

detail = bs.find("div", class_="zm-editable-content")

self.save2file(filename, "\n\n\n\n--------------------Detail----------------------\n\n")

# 获取问题的补充内容

if detail is not None:

for i in detail.strings:

self.save2file(filename, unicode(i))

answer = bs.find_all("div", class_="zm-editable-content clearfix")

k = 0

index = 0

for each_answer in answer:

self.save2file(filename, "\n\n-------------------------answer %s via -------------------------\n\n" % k)

for a in each_answer.strings:

# 循环获取每一个答案的内容,然后保存到文件中

self.save2file(filename, unicode(a))

k += 1

index = index + 1

smtp_server = 'smtp.126.com'

from_mail = 'your@126.com'

password = 'yourpassword'

to_mail = 'yourname@kindle.cn'

# send_kindle=MailAtt(smtp_server,from_mail,password,to_mail)

# send_kindle.send_txt(filename)

# 调用发送邮件函数,把电子书发送到你的kindle用户的邮箱账号,这样你的kindle就可以收到电子书啦

print filename

class MailAtt():

def __init__(self, smtp_server, from_mail, password, to_mail):

self.server = smtp_server

self.username = from_mail.split("@")[0]

self.from_mail = from_mail

self.password = password

self.to_mail = to_mail

# 初始化邮箱设置

def send_txt(self, filename):

# 这里发送附件尤其要注意字符编码,当时调试了挺久的,因为收到的文件总是乱码

self.smtp = smtplib.SMTP()

self.smtp.connect(self.server)

self.smtp.login(self.username, self.password)

self.msg = MIMEMultipart()

self.msg['to'] = self.to_mail

self.msg['from'] = self.from_mail

self.msg['Subject'] = "Convert"

self.filename = filename + ".txt"

self.msg['Date'] = Utils.formatdate(localtime=1)

content = open(self.filename.decode('utf-8'), 'rb').read()

# print content

self.att = MIMEText(content, 'base64', 'utf-8')

self.att['Content-Type'] = 'application/octet-stream'

# self.att["Content-Disposition"] = "attachment;filename=\"%s\"" %(self.filename.encode('gb2312'))

self.att["Content-Disposition"] = "attachment;filename=\"%s\"" % Header(self.filename, 'gb2312')

# print self.att["Content-Disposition"]

self.msg.attach(self.att)

self.smtp.sendmail(self.msg['from'], self.msg['to'], self.msg.as_string())

self.smtp.quit()

if __name__ == "__main__":

sub_folder = os.path.join(os.getcwd(), "content")

# 专门用于存放下载的电子书的目录

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

id = sys.argv[1]

# 给出的第一个参数 就是你要下载的问题的id

# 比如 想要下载的问题链接是 https://www.zhihu.com/question/29372574

# 那么 就输入 python zhihu.py 29372574

# id_link="/question/"+id

obj = GetContent(id)

# obj.getAnswer(id_link)

# 调用获取函数

print "Done"

#######################################

2016.8.19 更新

添加了新功能,模拟知乎登陆,自动获取自己关注的答案,制作成电子书并且发送到kindle

# -*-coding=utf-8-*-

__author__ = 'Rocky'

import requests

import cookielib

import re

import json

import time

import os

from getContent import GetContent

agent='Mozilla/5.0 (Windows NT 5.1; rv:33.0) Gecko/20100101 Firefox/33.0'

headers={'Host':'www.zhihu.com',

'Referer':'https://www.zhihu.com',

'User-Agent':agent}

#全局变量

session=requests.session()

session.cookies=cookielib.LWPCookieJar(filename="cookies")

try:

session.cookies.load(ignore_discard=True)

except:

print "Cookie can't load"

def isLogin():

url='https://www.zhihu.com/settings/profile'

login_code=session.get(url,headers=headers,allow_redirects=False).status_code

print login_code

if login_code == 200:

return True

else:

return False

def get_xsrf():

url='http://www.zhihu.com'

r=session.get(url,headers=headers,allow_redirects=False)

txt=r.text

result=re.findall(r'<input type=\"hidden\" name=\"_xsrf\" value=\"(\w+)\"/>',txt)[0]

return result

def getCaptcha():

#r=1471341285051

r=(time.time()*1000)

url='http://www.zhihu.com/captcha.gif?r='+str(r)+'&type=login'

image=session.get(url,headers=headers)

f=open("photo.jpg",'wb')

f.write(image.content)

f.close()

def Login():

xsrf=get_xsrf()

print xsrf

print len(xsrf)

login_url='http://www.zhihu.com/login/email'

data={

'_xsrf':xsrf,

'password':'*',

'remember_me':'true',

'email':'*'

}

try:

content=session.post(login_url,data=data,headers=headers)

login_code=content.text

print content.status_code

#this line important ! if no status, if will fail and execute the except part

#print content.status

if content.status_code != requests.codes.ok:

print "Need to verification code !"

getCaptcha()

#print "Please input the code of the captcha"

code=raw_input("Please input the code of the captcha")

data['captcha']=code

content=session.post(login_url,data=data,headers=headers)

print content.status_code

if content.status_code==requests.codes.ok:

print "Login successful"

session.cookies.save()

#print login_code

else:

session.cookies.save()

except:

print "Error in login"

return False

def focus_question():

focus_id=

url='https://www.zhihu.com/question/following'

content=session.get(url,headers=headers)

print content

p=re.compile(r'<a class="question_link" href="/question/(\d+)" target="_blank" data-id')

id_list=p.findall(content.text)

pattern=re.compile(r'<input type=\"hidden\" name=\"_xsrf\" value=\"(\w+)\"/>')

result=re.findall(pattern,content.text)[0]

print result

for i in id_list:

print i

focus_id.append(i)

url_next='https://www.zhihu.com/node/ProfileFollowedQuestionsV2'

page=20

offset=20

end_page=500

xsrf=re.findall(r'<input type=\"hidden\" name=\"_xsrf\" value=\"(\w+)\"',content.text)[0]

while offset < end_page:

#para='{"offset":20}'

#print para

print "page: %d" %offset

params={"offset":offset}

params_json=json.dumps(params)

data={

'method':'next',

'params':params_json,

'_xsrf':xsrf

}

#注意上面那里 post的data需要一个xsrf的字段,不然会返回403 的错误,这个在抓包的过程中一直都没有看到提交到xsrf,所以自己摸索出来的

offset=offset+page

headers_l={

'Host':'www.zhihu.com',

'Referer':'https://www.zhihu.com/question/following',

'User-Agent':agent,

'Origin':'https://www.zhihu.com',

'X-Requested-With':'XMLHttpRequest'

}

try:

s=session.post(url_next,data=data,headers=headers_l)

#print s.status_code

#print s.text

msgs=json.loads(s.text)

msg=msgs['msg']

for i in msg:

id_sub=re.findall(p,i)

for j in id_sub:

print j

id_list.append(j)

except:

print "Getting Error "

return id_list

def main():

if isLogin():

print "Has login"

else:

print "Need to login"

Login()

list_id=focus_question()

for i in list_id:

print i

obj=GetContent(i)

#getCaptcha()

if __name__=='__main__':

sub_folder=os.path.join(os.getcwd(),"content")

#专门用于存放下载的电子书的目录

if not os.path.exists(sub_folder):

os.mkdir(sub_folder)

os.chdir(sub_folder)

main()

完整代码请猛击这里:

github: https://github.com/Rockyzsu/zhihuToKindle

查看全部

#2016-08-19更新:

添加了模拟登陆知乎的模块,自动获取自己的关注的问题id,然后把这些问题的所有答案抓取下来推送到kindle

# -*-coding=utf-8-*-

__author__ = 'Rocky'

# -*-coding=utf-8-*-

from email.mime.text import MIMEText

from email.mime.multipart import MIMEMultipart

import smtplib

from email import Encoders, Utils

import urllib2

import time

import re

import sys

import os

from bs4 import BeautifulSoup

from email.Header import Header

reload(sys)

sys.setdefaultencoding('utf-8')

class GetContent():

def __init__(self, id):

# 给出的第一个参数 就是你要下载的问题的id

# 比如 想要下载的问题链接是 https://www.zhihu.com/question/29372574

# 那么 就输入 python zhihu.py 29372574

id_link = "/question/" + id

self.getAnswer(id_link)

def save2file(self, filename, content):

# 保存为电子书文件

filename = filename + ".txt"

f = open(filename, 'a')

f.write(content)

f.close()

def getAnswer(self, answerID):

host = "http://www.zhihu.com"

url = host + answerID

print url

user_agent = "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"

# 构造header 伪装一下

header = {"User-Agent": user_agent}

req = urllib2.Request(url, headers=header)

try:

resp = urllib2.urlopen(req)

except:

print "Time out. Retry"

time.sleep(30)

# try to switch with proxy ip

resp = urllib2.urlopen(req)

# 这里已经获取了 网页的代码,接下来就是提取你想要的内容。 使用beautifulSoup 来处理,很方便

try:

bs = BeautifulSoup(resp)

except:

print "Beautifulsoup error"

return None

title = bs.title

# 获取的标题

filename_old = title.string.strip()

print filename_old

filename = re.sub('[\/:*?"<>|]', '-', filename_old)

# 用来保存内容的文件名,因为文件名不能有一些特殊符号,所以使用正则表达式过滤掉

self.save2file(filename, title.string)

detail = bs.find("div", class_="zm-editable-content")

self.save2file(filename, "\n\n\n\n--------------------Detail----------------------\n\n")

# 获取问题的补充内容

if detail is not None:

for i in detail.strings:

self.save2file(filename, unicode(i))