通知设置 新通知

7.watch 垃圾培训机构

闲聊 • 绫波丽 发表了文章 • 0 个评论 • 2559 次浏览 • 2020-08-15 11:13

在Docker中配置Kibana连接ElasticSearch的一些小坑

数据库 • 李魔佛 发表了文章 • 0 个评论 • 12246 次浏览 • 2020-08-09 01:49

elasticseacrh.user='elastic'

elasticsearch.password='xxxxxx' # 你之前配置ES时设置的密码

BUT, 上面的配置在docker环境下无法正常启动使用kibana, 通过docker logs 容器ID, 查看的日志信息:

log [17:39:09.057] [warning][data][elasticsearch] No living connections

log [17:39:09.058] [warning][licensing][plugins] License information could not be obtained from Elasticsearch due to Error: No Living connections error

log [17:39:09.635] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

log [17:39:09.636] [warning][admin][elasticsearch] No living connections

log [17:39:12.137] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

log [17:39:12.138] [warning][admin][elasticsearch] No living connections

log [17:39:14.640] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

log [17:39:14.640] [warning][admin][elasticsearch] No living connections

log [17:39:17.143] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

log [17:39:17.143] [warning][admin][elasticsearch] No living connections

log [17:39:19.645] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/使用curl访问127.0.0.1:920也是正常的,后来想到docker貌似没有配置桥接网络,两个docker可能无法互通,故把kibana.yaml里面的host改为主机的真实IP(内网172网段ip),然后问题就得到解决了。 查看全部

elasticseacrh.user='elastic'

elasticsearch.password='xxxxxx' # 你之前配置ES时设置的密码

BUT, 上面的配置在docker环境下无法正常启动使用kibana, 通过docker logs 容器ID, 查看的日志信息:

log [17:39:09.057] [warning][data][elasticsearch] No living connections使用curl访问127.0.0.1:920也是正常的,后来想到docker貌似没有配置桥接网络,两个docker可能无法互通,故把kibana.yaml里面的host改为主机的真实IP(内网172网段ip),然后问题就得到解决了。

log [17:39:09.058] [warning][licensing][plugins] License information could not be obtained from Elasticsearch due to Error: No Living connections error

log [17:39:09.635] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

log [17:39:09.636] [warning][admin][elasticsearch] No living connections

log [17:39:12.137] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

log [17:39:12.138] [warning][admin][elasticsearch] No living connections

log [17:39:14.640] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

log [17:39:14.640] [warning][admin][elasticsearch] No living connections

log [17:39:17.143] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

log [17:39:17.143] [warning][admin][elasticsearch] No living connections

log [17:39:19.645] [warning][admin][elasticsearch] Unable to revive connection: http://127.0.0.1:9200/

腾讯云服务器无法使用阿里云smtp发送邮件服务

闲聊 • 李魔佛 发表了文章 • 0 个评论 • 4192 次浏览 • 2020-08-02 23:39

使用python的smtplib库

In [1]: import smtplib

...:

In [5]:

In [5]: In [2]: obj = smtplib.SMTP()

...:

In [6]:

In [6]: In [3]: obj.connect('smtp.qiye.aliyun.com', 25)

运行后一直卡在最后一步,没有进一步反应。

分布咨询了阿里与腾讯的客服,问题出在腾讯云,默认关闭了25端口的邮件服务,需要手工申请解封25端口。

查看全部

使用python的smtplib库

In [1]: import smtplib

...:

In [5]:

In [5]: In [2]: obj = smtplib.SMTP()

...:

In [6]:

In [6]: In [3]: obj.connect('smtp.qiye.aliyun.com', 25)

运行后一直卡在最后一步,没有进一步反应。

分布咨询了阿里与腾讯的客服,问题出在腾讯云,默认关闭了25端口的邮件服务,需要手工申请解封25端口。

一条命令要python统一江湖

闲聊 • 李魔佛 发表了文章 • 0 个评论 • 2637 次浏览 • 2020-07-12 13:52

$alias python4=gcc python5=rustc python6=javac python7=node那好不送哈哈。

Have a reset then enjoy the next

闲聊 • 李魔佛 发表了文章 • 0 个评论 • 2273 次浏览 • 2020-06-29 23:56

2020-06-29

2020-06-29

模拟登录网易163失败

python爬虫 • xiaoai 回复了问题 • 2 人关注 • 2 个回复 • 6242 次浏览 • 2020-06-28 14:25

深圳住房公积金验证码 识别破解

python爬虫 • 李魔佛 发表了文章 • 0 个评论 • 3322 次浏览 • 2020-06-26 14:34

http://gjj.sz.gov.cn/fzgn/zfcq/index.html

比较常规的验证码,使用keras全连接层,cv切割后每个字符只需要20个样本就达到准确率99%。

需要模型或者代码的私聊。 查看全部

http://gjj.sz.gov.cn/fzgn/zfcq/index.html

比较常规的验证码,使用keras全连接层,cv切割后每个字符只需要20个样本就达到准确率99%。

需要模型或者代码的私聊。

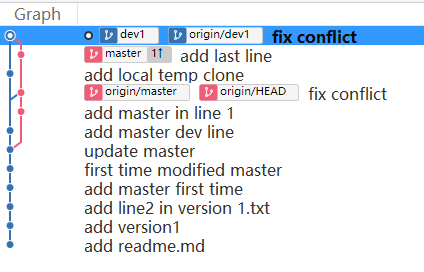

git命令行查看图形化的分支信息与commit 缩写id

Linux • 李魔佛 发表了文章 • 0 个评论 • 6807 次浏览 • 2020-06-21 16:56

可以使用 git log 加若干参数

git log --graph --pretty=oneline --abbrev-commit

效果是这样的:

而实际在source tree中是这样的:

查看全部

(1412, 'Table definition has changed, please retry transaction')

数据库 • 李魔佛 发表了文章 • 0 个评论 • 6000 次浏览 • 2020-06-13 23:20

(1412, 'Table definition has changed, please retry transaction')

一个原因是因为多个线程操作数据库,当前的cursor对应的数据库被切换到其他库了。

比如 cursor在数据库A,正在不断query一条语句,然后另一个线程在在切换cursor到另外一个数据库B。

查看全部

(1412, 'Table definition has changed, please retry transaction')

一个原因是因为多个线程操作数据库,当前的cursor对应的数据库被切换到其他库了。

比如 cursor在数据库A,正在不断query一条语句,然后另一个线程在在切换cursor到另外一个数据库B。

PyQt5自定义控件

python • 李魔佛 发表了文章 • 0 个评论 • 3684 次浏览 • 2020-06-13 23:14

Burning widget(烧录控件)

这个控件可能会在Nero,K3B或其他CD/DVD烧录软件中见到。

# -*- coding: utf-8 -*-

"""

PyQt5 tutorial

In this example, we create a custom widget.

"""

import sys

from PyQt5.QtWidgets import (QWidget, QSlider, QApplication,

QHBoxLayout, QVBoxLayout)

from PyQt5.QtCore import QObject, Qt, pyqtSignal

from PyQt5.QtGui import QPainter, QFont, QColor, QPen

class Communicate(QObject):

updateBW = pyqtSignal(int)

class BurningWidget(QWidget):

def __init__(self):

super().__init__()

self.initUI()

def initUI(self):

self.setMinimumSize(1, 30)

self.value = 75

self.num = [75, 150, 225, 300, 375, 450, 525, 600, 675]

def setValue(self, value):

self.value = value

def paintEvent(self, e):

qp = QPainter()

qp.begin(self)

self.drawWidget(qp)

qp.end()

def drawWidget(self, qp):

font = QFont('Serif', 7, QFont.Light)

qp.setFont(font)

size = self.size()

w = size.width()

h = size.height()

step = int(round(w / 10.0))

till = int(((w / 750.0) * self.value))

full = int(((w / 750.0) * 700))

if self.value >= 700:

qp.setPen(QColor(255, 255, 255))

qp.setBrush(QColor(255, 255, 184))

qp.drawRect(0, 0, full, h)

qp.setPen(QColor(255, 175, 175))

qp.setBrush(QColor(255, 175, 175))

qp.drawRect(full, 0, till - full, h)

else:

qp.setPen(QColor(255, 255, 255))

qp.setBrush(QColor(255, 255, 184))

qp.drawRect(0, 0, till, h)

pen = QPen(QColor(20, 20, 20), 1,

Qt.SolidLine)

qp.setPen(pen)

qp.setBrush(Qt.NoBrush)

qp.drawRect(0, 0, w - 1, h - 1)

j = 0

for i in range(step, 10 * step, step):

qp.drawLine(i, 0, i, 5)

metrics = qp.fontMetrics()

fw = metrics.width(str(self.num[j]))

qp.drawText(i - fw / 2, h / 2, str(self.num[j]))

j = j + 1

class Example(QWidget):

def __init__(self):

super().__init__()

self.initUI()

def initUI(self):

sld = QSlider(Qt.Horizontal, self)

sld.setFocusPolicy(Qt.NoFocus)

sld.setRange(1, 750)

sld.setValue(75)

sld.setGeometry(30, 40, 150, 30)

self.c = Communicate()

self.wid = BurningWidget()

self.c.updateBW[int].connect(self.wid.setValue)

sld.valueChanged[int].connect(self.changeValue)

hbox = QHBoxLayout()

hbox.addWidget(self.wid)

vbox = QVBoxLayout()

vbox.addStretch(1)

vbox.addLayout(hbox)

self.setLayout(vbox)

self.setGeometry(300, 300, 390, 210)

self.setWindowTitle('Burning widget')

self.show()

def changeValue(self, value):

self.c.updateBW.emit(value)

self.wid.repaint()

if __name__ == '__main__':

app = QApplication(sys.argv)

ex = Example()

sys.exit(app.exec_())

在示例中我们使用了滑块与一个自定义控件。自定义控件受滑块控制。控件显示了媒体介质的容量和剩余空间。该控件的最小值为1,最大值为750。在值超过700时颜色变为红色。这通常意味着超刻(即实际写入光盘的容量超过刻录盘片官方标称容量的一种操作)。

BurningWidget控件通过QHBoxLayout与QVBoxLayout置于窗体的底部。

class BurningWidget(QWidget):

def __init__(self):

super().__init__()

烧录的控件,它基于QWidget

self.setMinimumSize(1, 30)我们改变了控件的最小大小(高度),默认值为有点小。

font = QFont('Serif', 7, QFont.Light)

qp.setFont(font)我们使用一个比默认要小的字体。

size = self.size()

w = size.width()

h = size.height()

step = int(round(w / 10.0))

till = int(((w / 750.0) * self.value))

full = int(((w / 750.0) * 700))

控件采用了动态绘制技术。窗体越大,控件也随之变大;反之亦然。这也是我们需要计算自定义控件的载体控件(即窗体)尺寸的原因。till参数定义了需要绘制的总尺寸,它根据slider控件计算得出,是整体区域的比例值。full参数定义了红色区域的绘制起点。注意在绘制时为取得较大精度而使用的浮点数运算。

实际的绘制分三个步骤。黄色或红黄矩形的绘制,然后是刻度线的绘制,最后是刻度值的绘制。

metrics = qp.fontMetrics()

fw = metrics.width(str(self.num[j]))

qp.drawText(i-fw/2, h/2, str(self.num[j]))我们使用字体度量来绘制文本。我们必须知道文本的宽度,以中心垂直线。

def changeValue(self, value):

self.c.updateBW.emit(value)

self.wid.repaint()当滑块发生移动时,changeValue()方法会被调用。在方法内我们触发了一个自定义的updateBW信号,其参数是当前滚动条的值。该值被用于计算Burning widget的容量值。然后对控件进行重绘。

查看全部

Burning widget(烧录控件)

这个控件可能会在Nero,K3B或其他CD/DVD烧录软件中见到。

# -*- coding: utf-8 -*-

"""

PyQt5 tutorial

In this example, we create a custom widget.

"""

import sys

from PyQt5.QtWidgets import (QWidget, QSlider, QApplication,

QHBoxLayout, QVBoxLayout)

from PyQt5.QtCore import QObject, Qt, pyqtSignal

from PyQt5.QtGui import QPainter, QFont, QColor, QPen

class Communicate(QObject):

updateBW = pyqtSignal(int)

class BurningWidget(QWidget):

def __init__(self):

super().__init__()

self.initUI()

def initUI(self):

self.setMinimumSize(1, 30)

self.value = 75

self.num = [75, 150, 225, 300, 375, 450, 525, 600, 675]

def setValue(self, value):

self.value = value

def paintEvent(self, e):

qp = QPainter()

qp.begin(self)

self.drawWidget(qp)

qp.end()

def drawWidget(self, qp):

font = QFont('Serif', 7, QFont.Light)

qp.setFont(font)

size = self.size()

w = size.width()

h = size.height()

step = int(round(w / 10.0))

till = int(((w / 750.0) * self.value))

full = int(((w / 750.0) * 700))

if self.value >= 700:

qp.setPen(QColor(255, 255, 255))

qp.setBrush(QColor(255, 255, 184))

qp.drawRect(0, 0, full, h)

qp.setPen(QColor(255, 175, 175))

qp.setBrush(QColor(255, 175, 175))

qp.drawRect(full, 0, till - full, h)

else:

qp.setPen(QColor(255, 255, 255))

qp.setBrush(QColor(255, 255, 184))

qp.drawRect(0, 0, till, h)

pen = QPen(QColor(20, 20, 20), 1,

Qt.SolidLine)

qp.setPen(pen)

qp.setBrush(Qt.NoBrush)

qp.drawRect(0, 0, w - 1, h - 1)

j = 0

for i in range(step, 10 * step, step):

qp.drawLine(i, 0, i, 5)

metrics = qp.fontMetrics()

fw = metrics.width(str(self.num[j]))

qp.drawText(i - fw / 2, h / 2, str(self.num[j]))

j = j + 1

class Example(QWidget):

def __init__(self):

super().__init__()

self.initUI()

def initUI(self):

sld = QSlider(Qt.Horizontal, self)

sld.setFocusPolicy(Qt.NoFocus)

sld.setRange(1, 750)

sld.setValue(75)

sld.setGeometry(30, 40, 150, 30)

self.c = Communicate()

self.wid = BurningWidget()

self.c.updateBW[int].connect(self.wid.setValue)

sld.valueChanged[int].connect(self.changeValue)

hbox = QHBoxLayout()

hbox.addWidget(self.wid)

vbox = QVBoxLayout()

vbox.addStretch(1)

vbox.addLayout(hbox)

self.setLayout(vbox)

self.setGeometry(300, 300, 390, 210)

self.setWindowTitle('Burning widget')

self.show()

def changeValue(self, value):

self.c.updateBW.emit(value)

self.wid.repaint()

if __name__ == '__main__':

app = QApplication(sys.argv)

ex = Example()

sys.exit(app.exec_())

在示例中我们使用了滑块与一个自定义控件。自定义控件受滑块控制。控件显示了媒体介质的容量和剩余空间。该控件的最小值为1,最大值为750。在值超过700时颜色变为红色。这通常意味着超刻(即实际写入光盘的容量超过刻录盘片官方标称容量的一种操作)。

BurningWidget控件通过QHBoxLayout与QVBoxLayout置于窗体的底部。

class BurningWidget(QWidget):

def __init__(self):

super().__init__()

烧录的控件,它基于QWidget

self.setMinimumSize(1, 30)我们改变了控件的最小大小(高度),默认值为有点小。

font = QFont('Serif', 7, QFont.Light)

qp.setFont(font)我们使用一个比默认要小的字体。size = self.size()

w = size.width()

h = size.height()

step = int(round(w / 10.0))

till = int(((w / 750.0) * self.value))

full = int(((w / 750.0) * 700))

控件采用了动态绘制技术。窗体越大,控件也随之变大;反之亦然。这也是我们需要计算自定义控件的载体控件(即窗体)尺寸的原因。till参数定义了需要绘制的总尺寸,它根据slider控件计算得出,是整体区域的比例值。full参数定义了红色区域的绘制起点。注意在绘制时为取得较大精度而使用的浮点数运算。

实际的绘制分三个步骤。黄色或红黄矩形的绘制,然后是刻度线的绘制,最后是刻度值的绘制。

metrics = qp.fontMetrics()我们使用字体度量来绘制文本。我们必须知道文本的宽度,以中心垂直线。

fw = metrics.width(str(self.num[j]))

qp.drawText(i-fw/2, h/2, str(self.num[j]))

def changeValue(self, value):当滑块发生移动时,changeValue()方法会被调用。在方法内我们触发了一个自定义的updateBW信号,其参数是当前滚动条的值。该值被用于计算Burning widget的容量值。然后对控件进行重绘。

self.c.updateBW.emit(value)

self.wid.repaint()

Windows安装pyminizip

python • 李魔佛 发表了文章 • 0 个评论 • 4204 次浏览 • 2020-05-31 19:06

pip install pyminizip

电脑需要安装vc的编译库,或者在其他机子上把pyd文件拷贝到程序的当前目录。

pip install pyminizip

电脑需要安装vc的编译库,或者在其他机子上把pyd文件拷贝到程序的当前目录。

win10 vbs无法隐藏执行批处理问题

闲聊 • 李魔佛 发表了文章 • 0 个评论 • 3247 次浏览 • 2020-05-10 22:45

Set ws = CreateObject("Wscript.Shell")

ws.run "cmd /c D:\新建\diff.bat",vbhide

WScript.sleep 1000始终无法执行,莫名其妙。

通过换路径执行,发现是路径不能有中文。。。。

居然windows也有这种中文路径问题。

win10 vbs中不能有中文路径名 查看全部

Set ws = CreateObject("Wscript.Shell")

ws.run "cmd /c D:\新建\diff.bat",vbhide

WScript.sleep 1000始终无法执行,莫名其妙。 通过换路径执行,发现是路径不能有中文。。。。

居然windows也有这种中文路径问题。

win10 vbs中不能有中文路径名

redis desktop manager windows客户端

数据库 • 李魔佛 发表了文章 • 0 个评论 • 3036 次浏览 • 2020-05-07 20:37

怪不得这么垃圾。。。。。

怪不得这么垃圾。。。。。

有没有大神能爬取注册信息或者是访客信息的

默认分类 • 李魔佛 回复了问题 • 2 人关注 • 1 个回复 • 3316 次浏览 • 2020-04-29 12:42

为什么我使用splash中间件得到的response.body和splash上访问的html代码不同

python • 李魔佛 回复了问题 • 1 人关注 • 1 个回复 • 4043 次浏览 • 2020-04-29 00:19

pyqt5 QRect在哪个类

python • 李魔佛 发表了文章 • 0 个评论 • 2915 次浏览 • 2020-04-24 10:45

from PyQt5.QtCore import Qt,QRect

from PyQt5.QtCore import Qt,QRect

分享一个电影网站 insiji.com

闲聊 • 李魔佛 发表了文章 • 0 个评论 • 2801 次浏览 • 2020-04-16 22:48

貌似电影比较新,也比较清晰。 目前良心的站点不多了。

在线看挺流畅的,不过不登录没得下载。 只好抓包,把ts文件下载后再合并。

请问各位用scrapy和redis方法爬取不到数据的问题(可悬赏),求大佬看下,感激不尽

python爬虫 • 李魔佛 回复了问题 • 2 人关注 • 1 个回复 • 9400 次浏览 • 2020-04-16 22:16

想用程序自动化交易;大佬们都用哪个lib 提交订单;【比如同花顺;平安证券等】多谢

股票 • 李魔佛 回复了问题 • 1 人关注 • 2 个回复 • 5573 次浏览 • 2021-07-16 21:44

2020-03-27 新事情坚持

30天新尝试 • 李魔佛 发表了文章 • 0 个评论 • 3025 次浏览 • 2020-03-27 00:10

接下来的30天,要养成的习惯:

1. 早睡早起

2. 持续比例的输出。(有输入就要有输出)

3. 锻炼身体

4. 先专注一件事情,让他变为习惯后再攻克另外一件事情。

接下来的30天,要养成的习惯:

1. 早睡早起

2. 持续比例的输出。(有输入就要有输出)

3. 锻炼身体

4. 先专注一件事情,让他变为习惯后再攻克另外一件事情。

windows下logstash6.5安装配置 网上的教程是linux,有坑

数据库 • 李魔佛 发表了文章 • 2 个评论 • 4341 次浏览 • 2020-02-28 10:05

百度里搜到的logstash安装配置教程 千篇一律都是这样子。

启动服务测试一下是否安装成功:

cd bin

./logstash -e 'input { stdin { } } output { stdout {} }'

上面的是linux运行的。

windows下把logstash改为logstash.bat 然后运行。

报错:

ERROR: Unknown command '{'

See: 'bin/logstash --help'

[ERROR] 2020-02-28 10:04:29.307 [main] Logstash - java.lang.IllegalStateException: Logstash stopped processing because of an error: (SystemExit) exit

后来搜了下国外的网站。原来问题出在那个单引号上,把单引号改为双引号就可以了。

查看全部

百度里搜到的logstash安装配置教程 千篇一律都是这样子。

启动服务测试一下是否安装成功:

cd bin

./logstash -e 'input { stdin { } } output { stdout {} }'

上面的是linux运行的。

windows下把logstash改为logstash.bat 然后运行。

报错:

ERROR: Unknown command '{'

See: 'bin/logstash --help'

[ERROR] 2020-02-28 10:04:29.307 [main] Logstash - java.lang.IllegalStateException: Logstash stopped processing because of an error: (SystemExit) exit后来搜了下国外的网站。原来问题出在那个单引号上,把单引号改为双引号就可以了。

薅“疫情公益”羊毛,黑产恶意爬取各大出版社电子书上万册

python爬虫 • Magiccc 发表了文章 • 0 个评论 • 3516 次浏览 • 2020-02-26 13:17

个人的知识星球

量化交易-Ptrade-QMT • 李魔佛 发表了文章 • 0 个评论 • 4272 次浏览 • 2020-02-23 11:10

微信扫一扫加入我的知识星球

星球的第一篇文章

python获取全市场LOF基金折溢价数据并进行套利

市场是总共的LOF基金有301只(上图右下角的圈圈是所有基金的条数),而集思录上只有120只左右,所以有些溢价厉害(大于10%)的LOF基金并没有在集思录的网站上显示,这对于专注于套利的投资者来说,会损失很多潜在的套利机会。

点击查看大图

我回复了该贴后,有大量的人私信我,问我能否提供一份这个数据,或者教对方如何获取这些数据。 因为人数众多,也没有那么多精力来一一回答。毕竟不同人的水平背景不一样,逐个回答起来也很累,所以就回答了几个朋友的问题后就一一婉拒了。

然后在几个投资群里,居然也有人提到这个数据,在咨询如何才能获取到这个完整的数据,并且可以实时更新显示。 因为我的微信群昵称和集思录是一样的,所以不少人@我,我也都简单的回复了下,是使用python抓取的数据,数据保存到Mysql和MongoDB。 代码行数不多,100行都不到。

具体实现在星球会有完整代码。 查看全部

微信扫一扫加入我的知识星球

星球的第一篇文章

python获取全市场LOF基金折溢价数据并进行套利

市场是总共的LOF基金有301只(上图右下角的圈圈是所有基金的条数),而集思录上只有120只左右,所以有些溢价厉害(大于10%)的LOF基金并没有在集思录的网站上显示,这对于专注于套利的投资者来说,会损失很多潜在的套利机会。

点击查看大图

我回复了该贴后,有大量的人私信我,问我能否提供一份这个数据,或者教对方如何获取这些数据。 因为人数众多,也没有那么多精力来一一回答。毕竟不同人的水平背景不一样,逐个回答起来也很累,所以就回答了几个朋友的问题后就一一婉拒了。

然后在几个投资群里,居然也有人提到这个数据,在咨询如何才能获取到这个完整的数据,并且可以实时更新显示。 因为我的微信群昵称和集思录是一样的,所以不少人@我,我也都简单的回复了下,是使用python抓取的数据,数据保存到Mysql和MongoDB。 代码行数不多,100行都不到。

具体实现在星球会有完整代码。

希望能够找到一个可以一起对彪的人

闲聊 • 李魔佛 发表了文章 • 5 个评论 • 2938 次浏览 • 2020-02-16 23:37

目前貌似身边缺少一个这样的人,希望在这个网站上能够遇到一个这样的朋友。

有意的可以私聊吧。 非诚勿扰。

目前貌似身边缺少一个这样的人,希望在这个网站上能够遇到一个这样的朋友。

有意的可以私聊吧。 非诚勿扰。

requests请求返回的json格式为bytes乱码

python爬虫 • 李魔佛 回复了问题 • 2 人关注 • 1 个回复 • 6062 次浏览 • 2020-02-16 23:35