通知设置 新通知

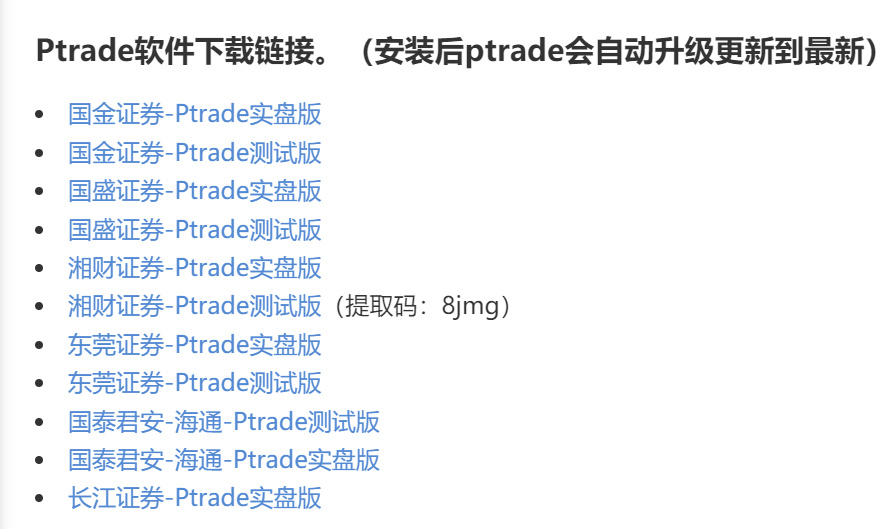

山西证券开通ptrade门槛 万0.854免5 | 山西证券ptrade下载地址

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 183 次浏览 • 2025-12-27 19:00

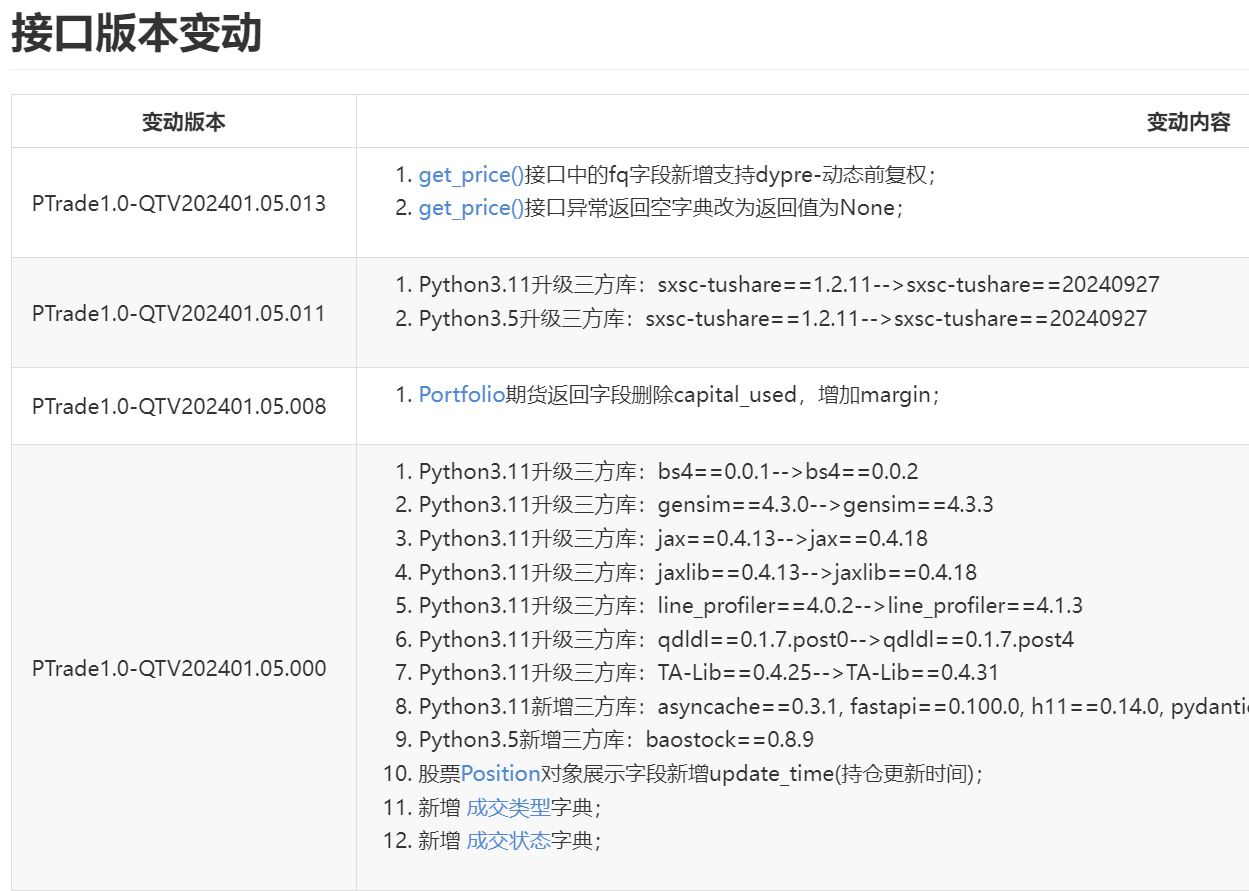

主要python3.11移除了pandas里的panel格式。导致之前ptrade获取到的3维数据不再兼容当前的最新版本。

山西证券ptrade下载地址:

山西证券ptrade实盘下载链接

山西证券ptrade仿真模拟下载链接

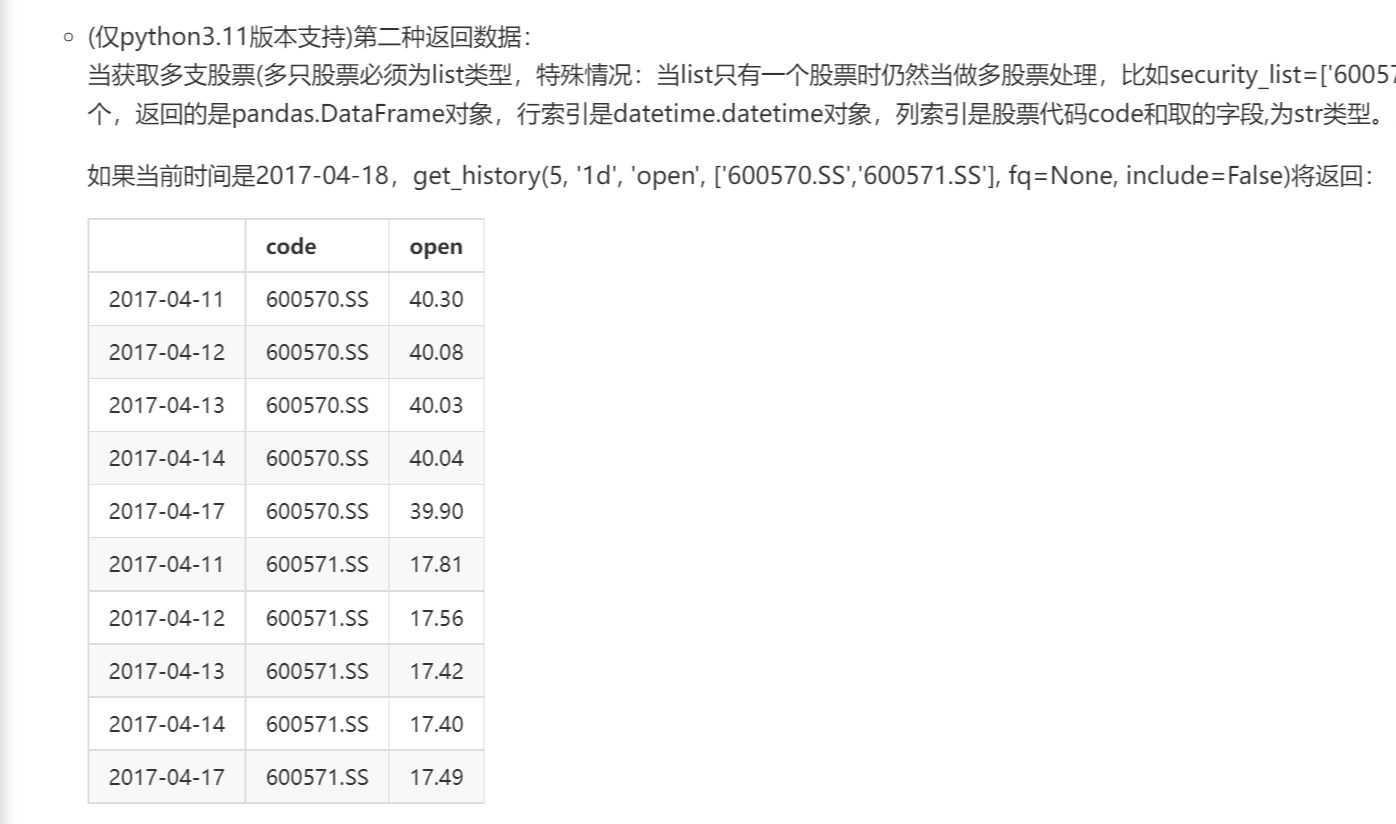

其实,个人感觉,现在的ptrade更加好用,不用再考虑不同的数据输入,来判读不同的返回结果。

get_history 函数,无论什么情况,都是返回dataframe格式。字段也是完全统一了

使用 sub_df = df[df['code']=code]

就能获取不同的输入格式。

换以前,如果传入的是一个字段 get_history(fields='close') 和get_history(fields=['low','close'])

返回的格式还得区分处理。

现在统一了,更加好用了。

目前山西证券ptrade开通门槛很低,入金5w就可以开通,而且费率是免5,万0.854免5,0.1起步,目前这么多券商里面是属于最低的了。

需要的朋友可以联系微信公众号开通:

查看全部

主要python3.11移除了pandas里的panel格式。导致之前ptrade获取到的3维数据不再兼容当前的最新版本。

山西证券ptrade下载地址:

山西证券ptrade实盘下载链接

山西证券ptrade仿真模拟下载链接

其实,个人感觉,现在的ptrade更加好用,不用再考虑不同的数据输入,来判读不同的返回结果。

get_history 函数,无论什么情况,都是返回dataframe格式。字段也是完全统一了

使用 sub_df = df[df['code']=code]

就能获取不同的输入格式。

换以前,如果传入的是一个字段 get_history(fields='close') 和get_history(fields=['low','close'])

返回的格式还得区分处理。

现在统一了,更加好用了。

目前山西证券ptrade开通门槛很低,入金5w就可以开通,而且费率是免5,万0.854免5,0.1起步,目前这么多券商里面是属于最低的了。

需要的朋友可以联系微信公众号开通:

东莞证券ptrade的编程功能模块被移除了

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 357 次浏览 • 2025-11-23 13:17

本来门槛不太高,作为备用也是不错的.

也不知道上面领导怎么想的. 把编程模块移走了.

目前就剩ptrade上内置的一些基础功能

无法通过python编程实现自己的自定义策略.

有点可惜.

查看全部

ubuntu24.04的内置的ibus中文智能输入法好垃圾

Linux • 李魔佛 发表了文章 • 0 个评论 • 283 次浏览 • 2025-11-22 23:34

搜狗输入法不知道ubuntu24.04的wayland桌面, 因为ubuntu240.4默认桌面不再是xorg.

如果需要用搜狗输入法,就必须是切换到xorg.

但是切换到xorg,系统里的chrome直接打不开, 需要对chrome进行降级...

无语了.

只能切换回去,用内置的智能中文输入法.

这输入法过于脑残.... 不太适合长句子, 不适合经常打错拼音的人...

查看全部

ptrade不支持reits,连持仓已有的也读取不出来

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 300 次浏览 • 2025-11-18 12:45

然后调用get_positions获取持仓,居然为空。

开始以为我程序代码后缀有问题,百思不得其解,反复检查之后,突然想起目前的ptrade不支持reits品种。

但没想到居然这个持仓代码都读取不到。。。

查看全部

然后调用get_positions获取持仓,居然为空。

开始以为我程序代码后缀有问题,百思不得其解,反复检查之后,突然想起目前的ptrade不支持reits品种。

但没想到居然这个持仓代码都读取不到。。。

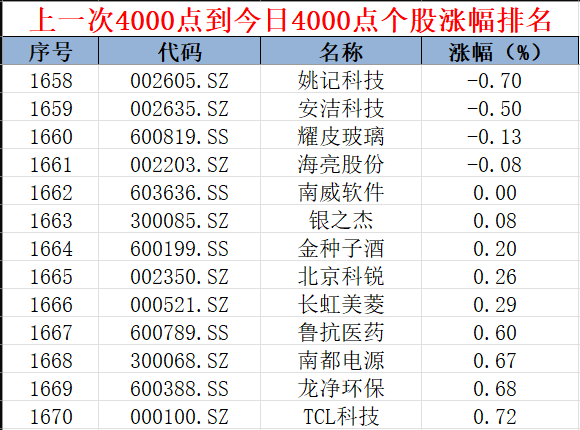

指数回来了,收益没回来!十年前 4000 点买入,多少股票至今没回本?

股票 • 李魔佛 发表了文章 • 0 个评论 • 511 次浏览 • 2025-10-29 08:58

可惜只持续了40分钟,收盘时跌回到3988点。

不过本轮并不是全面的牛市,没在暴涨板块的股民自嘲:

8000点的农行,6000点的科技,4000点的红利,剩下的全是2000点的难兄难弟。

十年光阴荏苒,上证指数上一次收盘价超过 4000 点是在 2015 年 7 月 24 日,当日收于 4070.91 点。

指数重回4000点,而又有多少股票的股价能够重回十年前4000点的时候股价呢?或者说还有多少股票依然还在以前4000点的时候的股价还低呢?

问了一下豆包,deepseek这个问题,结果说找不到现成的数据,无法分析。

迅速在ptrade上写个代码查询一下。

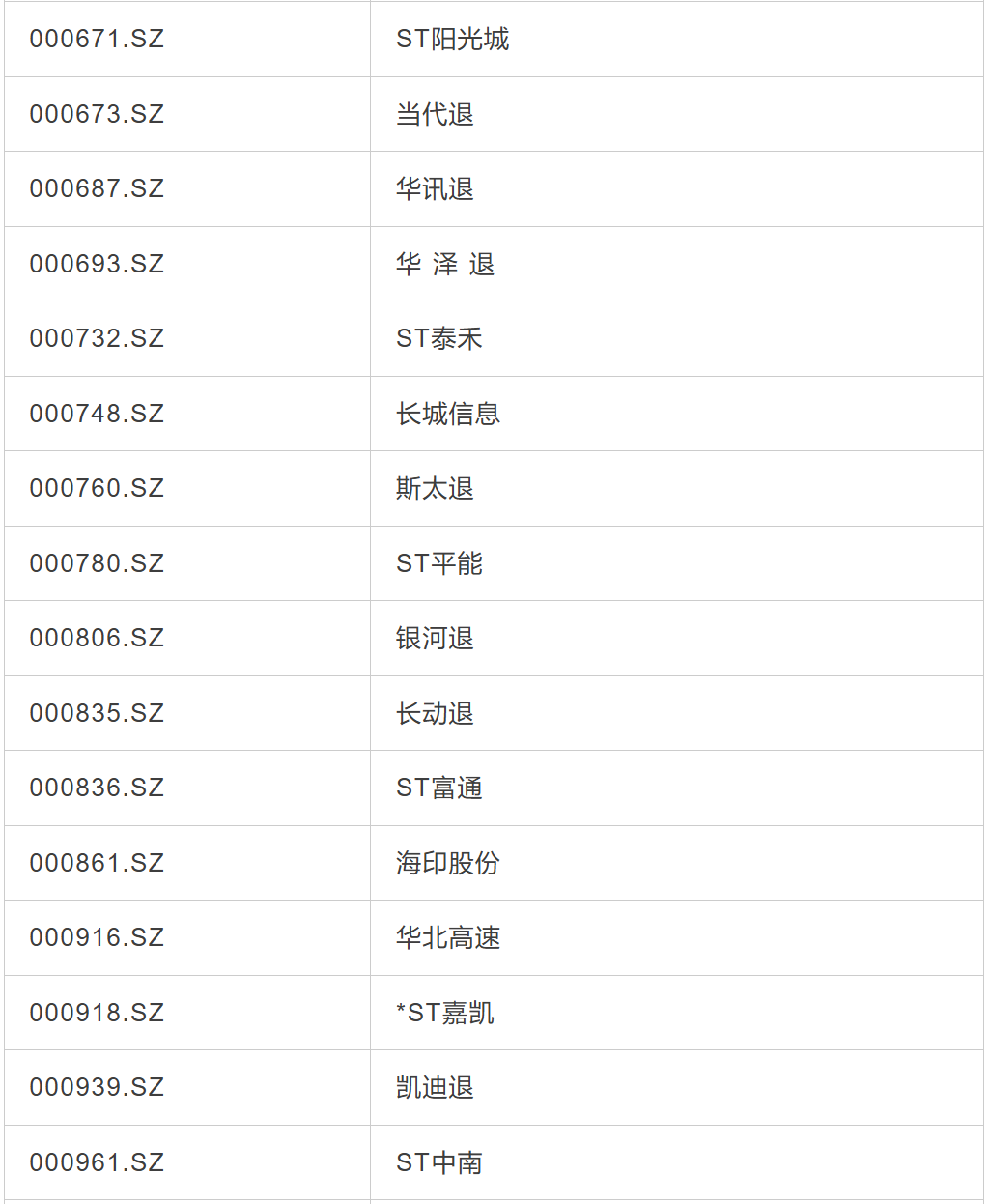

2015 年 7 月 24 日 市场有有沪深股票2783只,而当时的2783只股票今天依然在市场交易的有2567只。大约有216只股票退市(少数是合并、收购,大部分原因是退市)。

也就是十年前扔飞镖随便买一只股票,会有7.7%的概率买到退市股,并且是那种目前彻底退出的沪深板块,流入到退市板块。

下面列出期间的退市股(含收购合并),数据较多,对细节不感兴趣的可直接滚动到最后。

依然在交易的排除掉7只停牌的股票,有2559只存续交易的股票。

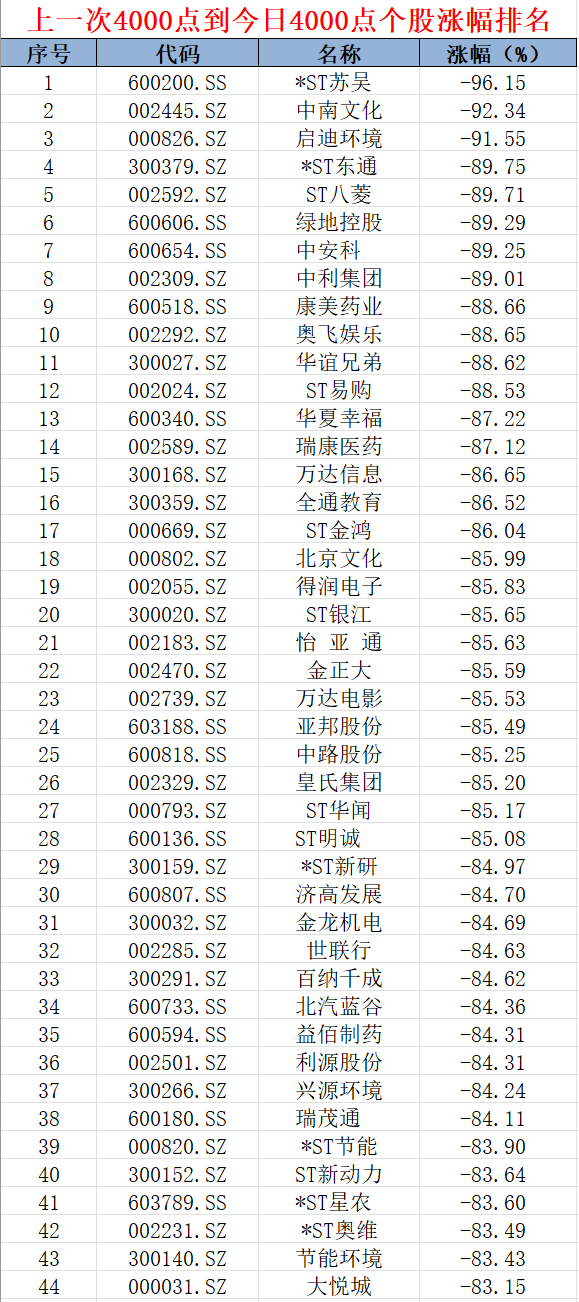

跌幅榜的排名。

不少跌幅达到80%-90%,不少是当时的牛股,全通交易,怡亚通,ST易购(苏宁易购),康美药业,万达电影等等。

跌幅为0的排到1662名了。数据太长,贴不下了。

也就是上证指数回到上一次的4000点,有1661只个股依然没有回到当时的股价,占比达到了64.9%。(注意,上面的股价是前复权的价格,是已经包含了分红的全收益价格。)

看完这个数据整个人都不太好受了,股民的十年青春在股海沉浮,最终超过一半的个股还没有回到牛市的起点。 查看全部

可惜只持续了40分钟,收盘时跌回到3988点。

不过本轮并不是全面的牛市,没在暴涨板块的股民自嘲:

8000点的农行,6000点的科技,4000点的红利,剩下的全是2000点的难兄难弟。

十年光阴荏苒,上证指数上一次收盘价超过 4000 点是在 2015 年 7 月 24 日,当日收于 4070.91 点。

指数重回4000点,而又有多少股票的股价能够重回十年前4000点的时候股价呢?或者说还有多少股票依然还在以前4000点的时候的股价还低呢?

问了一下豆包,deepseek这个问题,结果说找不到现成的数据,无法分析。

迅速在ptrade上写个代码查询一下。

2015 年 7 月 24 日 市场有有沪深股票2783只,而当时的2783只股票今天依然在市场交易的有2567只。大约有216只股票退市(少数是合并、收购,大部分原因是退市)。

也就是十年前扔飞镖随便买一只股票,会有7.7%的概率买到退市股,并且是那种目前彻底退出的沪深板块,流入到退市板块。

下面列出期间的退市股(含收购合并),数据较多,对细节不感兴趣的可直接滚动到最后。

依然在交易的排除掉7只停牌的股票,有2559只存续交易的股票。

跌幅榜的排名。

不少跌幅达到80%-90%,不少是当时的牛股,全通交易,怡亚通,ST易购(苏宁易购),康美药业,万达电影等等。

跌幅为0的排到1662名了。数据太长,贴不下了。

也就是上证指数回到上一次的4000点,有1661只个股依然没有回到当时的股价,占比达到了64.9%。(注意,上面的股价是前复权的价格,是已经包含了分红的全收益价格。)

看完这个数据整个人都不太好受了,股民的十年青春在股海沉浮,最终超过一半的个股还没有回到牛市的起点。

东北证券QMT miniQMT (NET专业版)万0.854免5

QMT • 李魔佛 发表了文章 • 0 个评论 • 795 次浏览 • 2025-10-16 23:05

东北证券的qmt名称叫NET专业版。

东北证券的QMT支持python和vba 两种语言。

开通后可加入量化技术群。

扫码联系开通。 查看全部

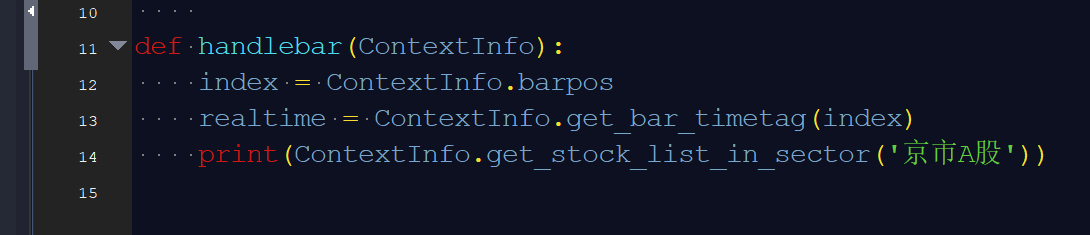

ptrade支持北交所股票量化交易吗?

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 714 次浏览 • 2025-10-09 11:36

文档里面搜不到内容,股票后缀使用 北交所代码+ SS后缀,或者 .BJ 后缀,获取不到任何的数据。

ptrade api 文档:

https://ptradeapi.com

查看全部

文档里面搜不到内容,股票后缀使用 北交所代码+ SS后缀,或者 .BJ 后缀,获取不到任何的数据。

ptrade api 文档:

https://ptradeapi.com

山西证券Ptrade 万一免五开户

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 862 次浏览 • 2025-09-26 01:44

本身山西证券的ptrade开通门槛不高, 入金5万就能开通ptrade,永久使用,不收费.

不过默认费率比较高, 也不免5.

不过入金5w后,是可以调到万一免5的.

因为高顿教育的量化课程使用的是山西证券作为 实例,所以也有不少的群众基础.

需要的可以扫码关注开通.

查看全部

Ptrade ETF轮动,收益率秒杀白云飞

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 956 次浏览 • 2025-09-11 20:07

如果抛去924行情,和今年的创业板+科创板,CPO,芯片,那么它的收益率是负的。漫长的3年回撤期,除非是闲钱很久不用的钱,否则谁也无法忍受这么长的回撤。

而ETF日,周净增轮动+动量+板块温度计+评分因子,可以做到超高的收益率。

使用ptrade进行回测。

使用ptrade进行实盘。

TBC

查看全部

ptrade计算EMA指标,对比同花顺

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1050 次浏览 • 2025-09-01 23:04

初始值计算:

标准 EMA:以周期内的第一个 SMA(简单移动平均)作为初始值

同花顺 EMA:直接以第一根 K 线的收盘价作为初始 EMA 值

计算逻辑:

两者在后续递推计算上一致,均使用公式:

plaintext

EMA(今日) = (2/(周期+1)) × 收盘价(今日) + (1-2/(周期+1)) × EMA(昨日)

实际影响:

由于初始值不同,在计算初期(小于周期长度的 K 线数量时),两种方法结果差异较大;随着数据增多,差异会逐渐缩小。

使用时只需将你的收盘价数据整理成 pandas Series 格式,调用该函数即可得到与同花顺软件完全一致的 EMA 计算结果,便于策略回测和指标对比。

import pandas as pd

def tonghuashun_ema(close_series, period):

"""

按照同花顺的算法计算EMA指标

参数:

close_series: pd.Series,包含收盘价数据,索引为日期

period: int,EMA周期(如5、10、50等)

返回:

pd.Series,计算得到的EMA指标序列

"""

if len(close_series) < 1:

return pd.Series(dtype='float64')

# 平滑系数

alpha = 2 / (period + 1)

# 初始化EMA序列

ema = pd.Series(index=close_series.index, dtype='float64')

# 同花顺特色:第一根EMA值等于当日收盘价

ema.iloc[0] = close_series.iloc[0]

# 从第二根开始使用递推公式计算

for i in range(1, len(close_series)):

ema.iloc[i] = alpha * close_series.iloc[i] + (1 - alpha) * ema.iloc[i-1]

return ema

# 示例:计算同花顺EMA(12)和EMA(26)(常用的MACD组件)

if __name__ == "__main__":

import numpy as np

# 生成模拟数据(50个交易日收盘价)

dates = pd.date_range(start='2023-01-01', periods=50)

np.random.seed(42) # 固定随机种子,保证结果可复现

close_prices = np.cumsum(np.random.randn(50)) + 100 # 模拟价格波动

close_series = pd.Series(close_prices, index=dates, name='close')

# 计算同花顺EMA(12)和EMA(26)

ema12 = tonghuashun_ema(close_series, 12)

ema26 = tonghuashun_ema(close_series, 26)

# 合并结果并显示

result = pd.DataFrame({

'收盘价': close_series,

'EMA12(同花顺)': ema12,

'EMA26(同花顺)': ema26

})

print("同花顺EMA指标计算结果(前10行):")

print(result.head(10))

print("\n同花顺EMA指标计算结果(最后10行):")

print(result.tail(10))

然后直接把上面代码贴入ptrade,封装成函数,传入日线数据即可使用。

查看全部

初始值计算:

标准 EMA:以周期内的第一个 SMA(简单移动平均)作为初始值

同花顺 EMA:直接以第一根 K 线的收盘价作为初始 EMA 值

计算逻辑:

两者在后续递推计算上一致,均使用公式:

plaintext

EMA(今日) = (2/(周期+1)) × 收盘价(今日) + (1-2/(周期+1)) × EMA(昨日)

实际影响:

由于初始值不同,在计算初期(小于周期长度的 K 线数量时),两种方法结果差异较大;随着数据增多,差异会逐渐缩小。

使用时只需将你的收盘价数据整理成 pandas Series 格式,调用该函数即可得到与同花顺软件完全一致的 EMA 计算结果,便于策略回测和指标对比。

import pandas as pd

def tonghuashun_ema(close_series, period):

"""

按照同花顺的算法计算EMA指标

参数:

close_series: pd.Series,包含收盘价数据,索引为日期

period: int,EMA周期(如5、10、50等)

返回:

pd.Series,计算得到的EMA指标序列

"""

if len(close_series) < 1:

return pd.Series(dtype='float64')

# 平滑系数

alpha = 2 / (period + 1)

# 初始化EMA序列

ema = pd.Series(index=close_series.index, dtype='float64')

# 同花顺特色:第一根EMA值等于当日收盘价

ema.iloc[0] = close_series.iloc[0]

# 从第二根开始使用递推公式计算

for i in range(1, len(close_series)):

ema.iloc[i] = alpha * close_series.iloc[i] + (1 - alpha) * ema.iloc[i-1]

return ema

# 示例:计算同花顺EMA(12)和EMA(26)(常用的MACD组件)

if __name__ == "__main__":

import numpy as np

# 生成模拟数据(50个交易日收盘价)

dates = pd.date_range(start='2023-01-01', periods=50)

np.random.seed(42) # 固定随机种子,保证结果可复现

close_prices = np.cumsum(np.random.randn(50)) + 100 # 模拟价格波动

close_series = pd.Series(close_prices, index=dates, name='close')

# 计算同花顺EMA(12)和EMA(26)

ema12 = tonghuashun_ema(close_series, 12)

ema26 = tonghuashun_ema(close_series, 26)

# 合并结果并显示

result = pd.DataFrame({

'收盘价': close_series,

'EMA12(同花顺)': ema12,

'EMA26(同花顺)': ema26

})

print("同花顺EMA指标计算结果(前10行):")

print(result.head(10))

print("\n同花顺EMA指标计算结果(最后10行):")

print(result.tail(10))

然后直接把上面代码贴入ptrade,封装成函数,传入日线数据即可使用。

申万宏源 Ptrade / QMT/miniQMT 免5 开通

QMT • 李魔佛 发表了文章 • 0 个评论 • 1329 次浏览 • 2025-08-07 19:01

支持QMT,miniQMT和Ptrade。

量化功能应有尽有,是目前为止为数不多的有miniQMT的免5券商。

费率低,ETF,可转债均为万0.5

线上开户,需要的朋友可以扫码联系开通。

查看全部

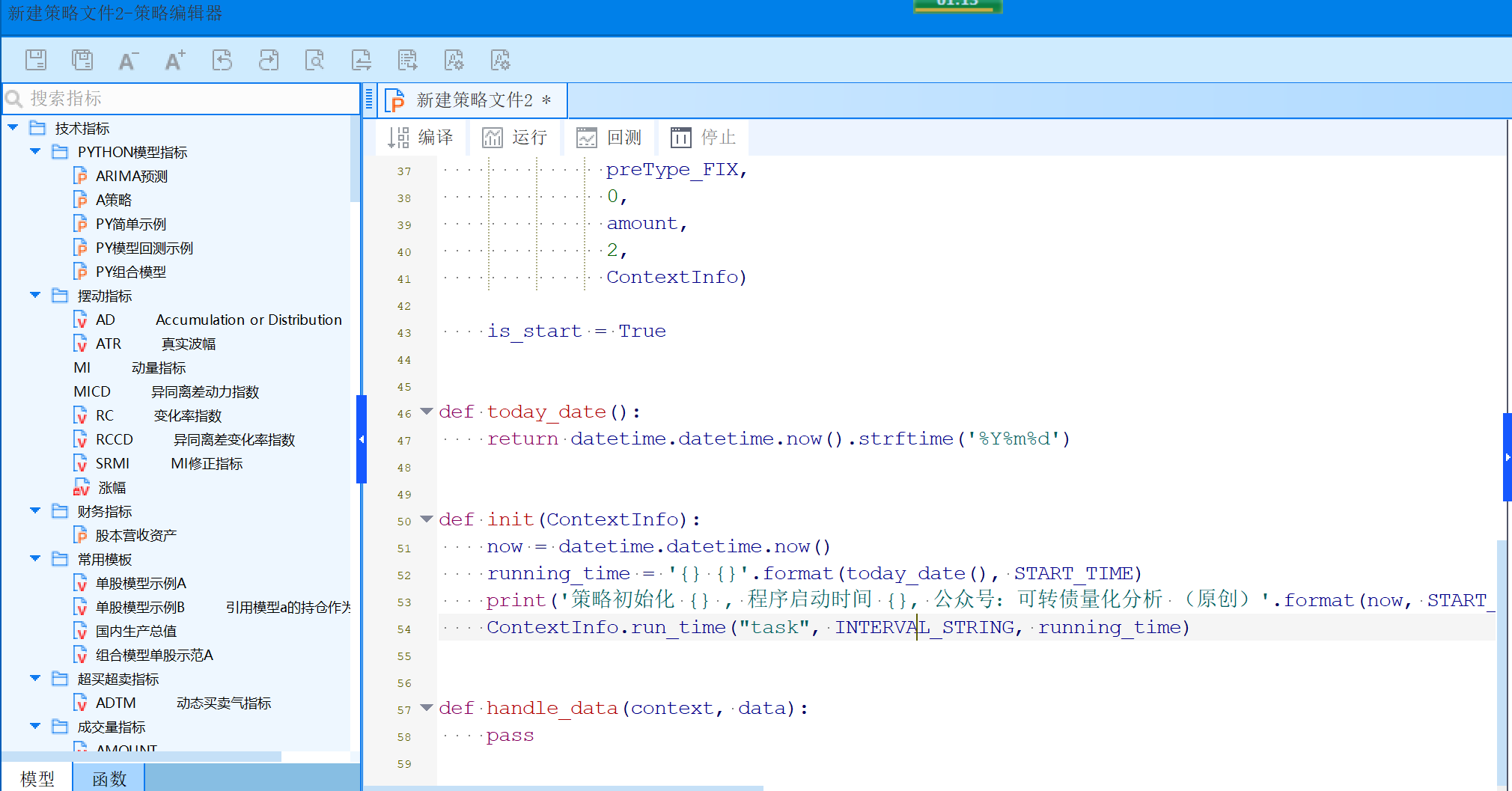

ptrade获取集合竞价数据

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1206 次浏览 • 2025-08-07 18:48

date = '20250806'

stocks = ['300333.SZ']

data = get_trend_data(date=date, stocks=stocks)

# 返回数据

{'300333.SZ': {'time_stamp': 202508060930,

'hq_px': 14.1,

'wavg_px': 14.1,

'business_amount': 40300,

'business_balance': 568230,

'amount': 0}}

在实盘,回测,研究里面就能够使用

公众号:可转债量化分析

星球

查看全部

ptrade不同券商 数据质量 之 ETF数据质量残次不齐

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1262 次浏览 • 2025-07-23 18:28

etf_list = get_etf_list()

然后在不同的券商上运行。

国盛得到的数据:2025-07-23 16:07:15 - INFO - 公众号:可转债量化分析 ---- start ----

2025-07-23 16:08:00 - INFO - start func

2025-07-23 16:08:00 - INFO - 公众号:可转债量化分析

2025-07-23 16:08:00 - INFO - 660

得到的ETF数据有660只。

同样的代码,放到国金的ptrade上运行,得到 2025-07-23 15:45:10 - INFO - 当前服务器配置为:交易时间段服务器重启后,执行拉起本交易操作

2025-07-23 15:45:12 - INFO - 公众号:可转债量化分析 ---- start ----

2025-07-23 15:46:00 - INFO - start func

2025-07-23 15:46:00 - INFO - 公众号:可转债量化分析

2025-07-23 15:46:00 - INFO - 1043有1000只的ETF。

然后把日志导出到本地,用python求解一下不同的数据

(py11) D:\github\stock_strategy\api_validation>python etf-compare.py

516330.SS

561320.SS

159265.SZ

560980.SS

159813.SZ

159859.SZ

561700.SS

511620.SS

159898.SZ

159969.SZ

512380.SS

159972.SZ

159857.SZ

516300.SS

588200.SS

510180.SS

517520.SS

515680.SS

159618.SZ

159827.SZ

561980.SS

511820.SS

515150.SS

159701.SZ

159743.SZ

159623.SZ

159991.SZ

510660.SS

159681.SZ

159845.SZ

515890.SS

515850.SS

515300.SS

159510.SZ

511920.SS

159805.SZ

159610.SZ

159929.SZ

517170.SS

530800.SS

510630.SS

159535.SZ

561790.SS

159842.SZ

510150.SS

560580.SS

517800.SS

515790.SS

159806.SZ

516530.SS

159873.SZ

159207.SZ

515320.SS

516750.SS

159635.SZ

515290.SS

515030.SS

561130.SS

588150.SS

560680.SS

560280.SS

561570.SS

515760.SS

515900.SS

511090.SS

159643.SZ

588040.SS

159968.SZ

512520.SS

159322.SZ

516520.SS

159841.SZ

159359.SZ

515160.SS

511260.SS

516710.SS

159698.SZ

588230.SS

159786.SZ

562900.SS

159309.SZ

159358.SZ

563800.SS

159677.SZ

159551.SZ

517850.SS

159899.SZ

159862.SZ

159961.SZ

159779.SZ

515580.SS

159836.SZ

510060.SS

515010.SS

159355.SZ

560700.SS

159885.SZ

517880.SS

159864.SZ

159640.SZ

510270.SS

560150.SS

515750.SS

159003.SZ

159748.SZ

515330.SS

159730.SZ

159994.SZ

516290.SS

516080.SS

560510.SS

159005.SZ

159306.SZ

159378.SZ

512960.SS

159680.SZ

159847.SZ

159310.SZ

159587.SZ

588400.SS

515770.SS

159393.SZ

159657.SZ

516220.SS

159575.SZ

560350.SS

159997.SZ

515110.SS

159713.SZ

159880.SZ

159576.SZ

159520.SZ

562360.SS

588930.SS

518660.SS

159669.SZ

159206.SZ

159621.SZ

159928.SZ

159731.SZ

516050.SS

562390.SS

159831.SZ

516510.SS

159913.SZ

159633.SZ

511190.SS

512670.SS

516780.SS

517110.SS

159820.SZ

159690.SZ

159881.SZ

561230.SS

510010.SS

159992.SZ

159666.SZ

159725.SZ

159870.SZ

511010.SS

515060.SS

560860.SS

515100.SS

159993.SZ

512820.SS

159825.SZ

511900.SS

515990.SS

159849.SZ

563330.SS

512240.SS

159974.SZ

159910.SZ

159638.SZ

512890.SS

159641.SZ

159875.SZ

159682.SZ

159796.SZ

159887.SZ

159620.SZ

510880.SS

159225.SZ

159720.SZ

510410.SS

159883.SZ

159931.SZ

159363.SZ

588100.SS

561200.SS

560550.SS

159791.SZ

159229.SZ

159982.SZ

159647.SZ

560610.SS

159386.SZ

159559.SZ

159630.SZ

159795.SZ

511200.SS

512510.SS

561260.SS

159589.SZ

562860.SS

560090.SS

159889.SZ

159707.SZ

588320.SS

159678.SZ

510680.SS

159863.SZ

515980.SS

159543.SZ

510100.SS

159679.SZ

512040.SS

530000.SS

511770.SS

159838.SZ

159553.SZ

159998.SZ

515080.SS

588110.SS

561990.SS

159729.SZ

515120.SS

159739.SZ

159856.SZ

159800.SZ

510020.SS

510650.SS

159909.SZ

517550.SS

515630.SS

159685.SZ

515960.SS

515380.SS

561910.SS

515860.SS

518680.SS

588250.SS

159603.SZ

159339.SZ

159778.SZ

159958.SZ

516120.SS

588090.SS

159781.SZ

562300.SS

159761.SZ

517080.SS

159617.SZ

516060.SS

588680.SS

511580.SS

159521.SZ

159770.SZ

159397.SZ

159888.SZ

159816.SZ

516880.SS

159716.SZ

516910.SS

159209.SZ

588880.SS

562800.SS

515650.SS

561590.SS

159715.SZ

159552.SZ

512280.SS

588830.SS

516790.SS

159656.SZ

588460.SS

561350.SS

511850.SS

516830.SS

515530.SS

517120.SS

159658.SZ

563360.SS

517010.SS

516630.SS

159608.SZ

159959.SZ

563300.SS

563350.SS

516650.SS

561920.SS

561310.SS

516460.SS

512680.SS

159782.SZ

515360.SS

159628.SZ

159629.SZ

560260.SS

512730.SS

588010.SS

159697.SZ

588760.SS

513890.SS

159977.SZ

516500.SS

561500.SS

512220.SS

510550.SS

159555.SZ

588300.SS

530300.SS

159649.SZ

159395.SZ

560560.SS

516950.SS

511810.SS

561330.SS

159732.SZ

511600.SS

562890.SS

516550.SS

159717.SZ

159797.SZ

159970.SZ

561900.SS

561560.SS

159890.SZ

159721.SZ

560190.SS

159366.SZ

515950.SS

511950.SS

159908.SZ

159861.SZ

159517.SZ

159975.SZ

159625.SZ

159830.SZ

510050.SS

159895.SZ

517900.SS

159652.SZ

510990.SS

159811.SZ

159852.SZ

517000.SS

159930.SZ

159840.SZ

515070.SS

511670.SS

159807.SZ

561550.SS

511980.SS

159675.SZ

159611.SZ

560880.SS

515660.SS

560530.SS

511830.SS

159766.SZ

159631.SZ

561190.SS

512150.SS

561950.SS

517360.SS

518880.SS

510850.SS

159768.SZ

159876.SZ

159398.SZ

159865.SZ

515600.SS

159360.SZ

159305.SZ

511970.SS

159671.SZ

588310.SS

516770.SS

159320.SZ

516670.SS

517100.SS

159572.SZ

159839.SZ

159627.SZ

159665.SZ

159790.SZ

563520.SS

511520.SS

511690.SS

561160.SS

159723.SZ

159216.SZ

159886.SZ

510190.SS

159798.SZ

562590.SS

159843.SZ

159867.SZ

159663.SZ

159619.SZ

516090.SS

515090.SS

560080.SS

159869.SZ

159872.SZ

159736.SZ

560900.SS

588950.SS

159855.SZ

516150.SS

516960.SS

159828.SZ

517050.SS

159851.SZ

159645.SZ

511930.SS

159935.SZ

159703.SZ

517200.SS

512020.SS

159787.SZ

588240.SS

159362.SZ

517990.SS

561960.SS

562880.SS

159971.SZ

159760.SZ

159871.SZ

513630.SS

561380.SS

不同的ETF数量: 432这400多只的ETF是在国盛上缺失的。

这数据有点惊人.....

如果要用国盛,可以用国金的把ETF列表导出到txt,然后上传到国盛,然后在程序中读取这个etf列表。

查看全部

etf_list = get_etf_list()

然后在不同的券商上运行。

国盛得到的数据:

2025-07-23 16:07:15 - INFO - 公众号:可转债量化分析 ---- start ----

2025-07-23 16:08:00 - INFO - start func

2025-07-23 16:08:00 - INFO - 公众号:可转债量化分析

2025-07-23 16:08:00 - INFO - 660

得到的ETF数据有660只。

同样的代码,放到国金的ptrade上运行,得到

2025-07-23 15:45:10 - INFO - 当前服务器配置为:交易时间段服务器重启后,执行拉起本交易操作有1000只的ETF。

2025-07-23 15:45:12 - INFO - 公众号:可转债量化分析 ---- start ----

2025-07-23 15:46:00 - INFO - start func

2025-07-23 15:46:00 - INFO - 公众号:可转债量化分析

2025-07-23 15:46:00 - INFO - 1043

然后把日志导出到本地,用python求解一下不同的数据

这400多只的ETF是在国盛上缺失的。

(py11) D:\github\stock_strategy\api_validation>python etf-compare.py

516330.SS

561320.SS

159265.SZ

560980.SS

159813.SZ

159859.SZ

561700.SS

511620.SS

159898.SZ

159969.SZ

512380.SS

159972.SZ

159857.SZ

516300.SS

588200.SS

510180.SS

517520.SS

515680.SS

159618.SZ

159827.SZ

561980.SS

511820.SS

515150.SS

159701.SZ

159743.SZ

159623.SZ

159991.SZ

510660.SS

159681.SZ

159845.SZ

515890.SS

515850.SS

515300.SS

159510.SZ

511920.SS

159805.SZ

159610.SZ

159929.SZ

517170.SS

530800.SS

510630.SS

159535.SZ

561790.SS

159842.SZ

510150.SS

560580.SS

517800.SS

515790.SS

159806.SZ

516530.SS

159873.SZ

159207.SZ

515320.SS

516750.SS

159635.SZ

515290.SS

515030.SS

561130.SS

588150.SS

560680.SS

560280.SS

561570.SS

515760.SS

515900.SS

511090.SS

159643.SZ

588040.SS

159968.SZ

512520.SS

159322.SZ

516520.SS

159841.SZ

159359.SZ

515160.SS

511260.SS

516710.SS

159698.SZ

588230.SS

159786.SZ

562900.SS

159309.SZ

159358.SZ

563800.SS

159677.SZ

159551.SZ

517850.SS

159899.SZ

159862.SZ

159961.SZ

159779.SZ

515580.SS

159836.SZ

510060.SS

515010.SS

159355.SZ

560700.SS

159885.SZ

517880.SS

159864.SZ

159640.SZ

510270.SS

560150.SS

515750.SS

159003.SZ

159748.SZ

515330.SS

159730.SZ

159994.SZ

516290.SS

516080.SS

560510.SS

159005.SZ

159306.SZ

159378.SZ

512960.SS

159680.SZ

159847.SZ

159310.SZ

159587.SZ

588400.SS

515770.SS

159393.SZ

159657.SZ

516220.SS

159575.SZ

560350.SS

159997.SZ

515110.SS

159713.SZ

159880.SZ

159576.SZ

159520.SZ

562360.SS

588930.SS

518660.SS

159669.SZ

159206.SZ

159621.SZ

159928.SZ

159731.SZ

516050.SS

562390.SS

159831.SZ

516510.SS

159913.SZ

159633.SZ

511190.SS

512670.SS

516780.SS

517110.SS

159820.SZ

159690.SZ

159881.SZ

561230.SS

510010.SS

159992.SZ

159666.SZ

159725.SZ

159870.SZ

511010.SS

515060.SS

560860.SS

515100.SS

159993.SZ

512820.SS

159825.SZ

511900.SS

515990.SS

159849.SZ

563330.SS

512240.SS

159974.SZ

159910.SZ

159638.SZ

512890.SS

159641.SZ

159875.SZ

159682.SZ

159796.SZ

159887.SZ

159620.SZ

510880.SS

159225.SZ

159720.SZ

510410.SS

159883.SZ

159931.SZ

159363.SZ

588100.SS

561200.SS

560550.SS

159791.SZ

159229.SZ

159982.SZ

159647.SZ

560610.SS

159386.SZ

159559.SZ

159630.SZ

159795.SZ

511200.SS

512510.SS

561260.SS

159589.SZ

562860.SS

560090.SS

159889.SZ

159707.SZ

588320.SS

159678.SZ

510680.SS

159863.SZ

515980.SS

159543.SZ

510100.SS

159679.SZ

512040.SS

530000.SS

511770.SS

159838.SZ

159553.SZ

159998.SZ

515080.SS

588110.SS

561990.SS

159729.SZ

515120.SS

159739.SZ

159856.SZ

159800.SZ

510020.SS

510650.SS

159909.SZ

517550.SS

515630.SS

159685.SZ

515960.SS

515380.SS

561910.SS

515860.SS

518680.SS

588250.SS

159603.SZ

159339.SZ

159778.SZ

159958.SZ

516120.SS

588090.SS

159781.SZ

562300.SS

159761.SZ

517080.SS

159617.SZ

516060.SS

588680.SS

511580.SS

159521.SZ

159770.SZ

159397.SZ

159888.SZ

159816.SZ

516880.SS

159716.SZ

516910.SS

159209.SZ

588880.SS

562800.SS

515650.SS

561590.SS

159715.SZ

159552.SZ

512280.SS

588830.SS

516790.SS

159656.SZ

588460.SS

561350.SS

511850.SS

516830.SS

515530.SS

517120.SS

159658.SZ

563360.SS

517010.SS

516630.SS

159608.SZ

159959.SZ

563300.SS

563350.SS

516650.SS

561920.SS

561310.SS

516460.SS

512680.SS

159782.SZ

515360.SS

159628.SZ

159629.SZ

560260.SS

512730.SS

588010.SS

159697.SZ

588760.SS

513890.SS

159977.SZ

516500.SS

561500.SS

512220.SS

510550.SS

159555.SZ

588300.SS

530300.SS

159649.SZ

159395.SZ

560560.SS

516950.SS

511810.SS

561330.SS

159732.SZ

511600.SS

562890.SS

516550.SS

159717.SZ

159797.SZ

159970.SZ

561900.SS

561560.SS

159890.SZ

159721.SZ

560190.SS

159366.SZ

515950.SS

511950.SS

159908.SZ

159861.SZ

159517.SZ

159975.SZ

159625.SZ

159830.SZ

510050.SS

159895.SZ

517900.SS

159652.SZ

510990.SS

159811.SZ

159852.SZ

517000.SS

159930.SZ

159840.SZ

515070.SS

511670.SS

159807.SZ

561550.SS

511980.SS

159675.SZ

159611.SZ

560880.SS

515660.SS

560530.SS

511830.SS

159766.SZ

159631.SZ

561190.SS

512150.SS

561950.SS

517360.SS

518880.SS

510850.SS

159768.SZ

159876.SZ

159398.SZ

159865.SZ

515600.SS

159360.SZ

159305.SZ

511970.SS

159671.SZ

588310.SS

516770.SS

159320.SZ

516670.SS

517100.SS

159572.SZ

159839.SZ

159627.SZ

159665.SZ

159790.SZ

563520.SS

511520.SS

511690.SS

561160.SS

159723.SZ

159216.SZ

159886.SZ

510190.SS

159798.SZ

562590.SS

159843.SZ

159867.SZ

159663.SZ

159619.SZ

516090.SS

515090.SS

560080.SS

159869.SZ

159872.SZ

159736.SZ

560900.SS

588950.SS

159855.SZ

516150.SS

516960.SS

159828.SZ

517050.SS

159851.SZ

159645.SZ

511930.SS

159935.SZ

159703.SZ

517200.SS

512020.SS

159787.SZ

588240.SS

159362.SZ

517990.SS

561960.SS

562880.SS

159971.SZ

159760.SZ

159871.SZ

513630.SS

561380.SS

不同的ETF数量: 432

这数据有点惊人.....

如果要用国盛,可以用国金的把ETF列表导出到txt,然后上传到国盛,然后在程序中读取这个etf列表。

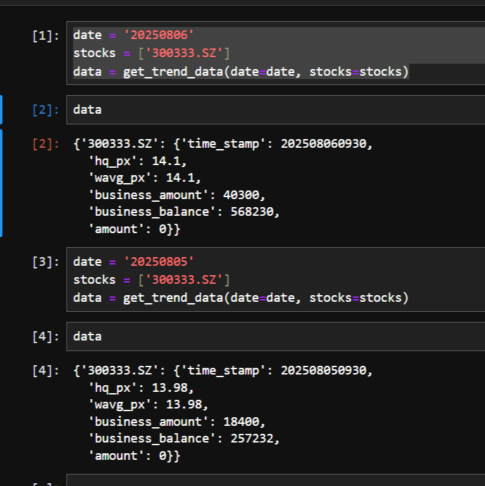

长江证券 ptrade 免五 开通门槛

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1654 次浏览 • 2025-07-22 16:57

软件描述:长江证券PTrade交易终端是由恒生电子股份有限公司开发,为服务投资者专业化个性化交易需求而打造的交易终端

功能介绍:

1. 操作便捷:支持闪电下单、一键撤补、一键清仓、批量撤单、全键盘交易等功能

2. 功能丰富:提供策略交易、拐点交易、网格交易、抢单交易、追涨停、盘口扫单、可转债套利、ETF套利、篮子交易等交易工具

3. 量化交易:支持策略单交易,提供完整的策略平台,支持客户做策略研究、策略编写、策略回测和实盘交易

4. 极速交易:支持通过华锐ATP极速柜台、快速交易通道交易

MD5码:16ab7feba617b254c1607320ce69a780

版本:PTrade1.0-Client-V202308-15-000

文件大小:215.2M

文件名:PTrade1.0-Client-V202308-15-000_CY.zip

有2个版本,一个是长江证券ptrade交易终端(极速版),这个可以在上面编写python策略;

一个是Ptrade交易终端(简版),这个只提供了基础的量化工具,比如套利,网格,日内回转交易。不支持python编程功能。所以注意不要开错了哦。

下载链接:

https://downcq.95579.com/jcj/zg/PTrade1.0-Client-V202308-15-000_CY.zip

长期更新站点:

https://ptradeapi.com/

需要开通的朋友可以关注公众号联系: 查看全部

软件描述:长江证券PTrade交易终端是由恒生电子股份有限公司开发,为服务投资者专业化个性化交易需求而打造的交易终端

功能介绍:

1. 操作便捷:支持闪电下单、一键撤补、一键清仓、批量撤单、全键盘交易等功能

2. 功能丰富:提供策略交易、拐点交易、网格交易、抢单交易、追涨停、盘口扫单、可转债套利、ETF套利、篮子交易等交易工具

3. 量化交易:支持策略单交易,提供完整的策略平台,支持客户做策略研究、策略编写、策略回测和实盘交易

4. 极速交易:支持通过华锐ATP极速柜台、快速交易通道交易

MD5码:16ab7feba617b254c1607320ce69a780

版本:PTrade1.0-Client-V202308-15-000

文件大小:215.2M

文件名:PTrade1.0-Client-V202308-15-000_CY.zip

有2个版本,一个是长江证券ptrade交易终端(极速版),这个可以在上面编写python策略;

一个是Ptrade交易终端(简版),这个只提供了基础的量化工具,比如套利,网格,日内回转交易。不支持python编程功能。所以注意不要开错了哦。

下载链接:

https://downcq.95579.com/jcj/zg/PTrade1.0-Client-V202308-15-000_CY.zip

长期更新站点:

https://ptradeapi.com/

需要开通的朋友可以关注公众号联系:

聚宽小市值策略代码转Ptrade实盘代码

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 2202 次浏览 • 2025-07-05 22:35

Mark一下,写完之后分享一下。

聚宽的也不是完全的按照市值,也会根据换手率,涨停,跌停来判断是否卖出。

聚宽源码(部分)

#导入函数库

from jqdata import *

from jqfactor import *

import numpy as np

import pandas as pd

from datetime import time, datetime, timedelta

#初始化函数

def initialize(context):

# 策略参数配置

g.signal = ''

# 开启防未来函数

set_option('avoid_future_data', True)

# 设定基准

set_benchmark('000300.XSHG')

# 交易设置

set_option('use_real_price', True)

set_slippage(FixedSlippage(3/10000))

set_order_cost(OrderCost(open_tax=0, close_tax=0.001, open_commission=2.5/10000, close_commission=2.5/10000, close_today_commission=0, min_commission=5), type='stock')

# 日志设置

log.set_level('order', 'error')

log.set_level('system', 'error')

log.set_level('strategy', 'debug')

# 策略控制参数

g.no_trading_today_signal = False # 是否为可交易日

g.pass_april = True # 是否四月空仓

g.run_stoploss = True # 是否进行止损

g.HV_control = False # 是否进行放量控制

g.HV_duration = 120 # 放量判断周期

g.HV_ratio = 0.9 # 放量判断比例

g.no_trading_hold_signal = False # 是否持有非交易期股票

# 持仓管理参数

g.hold_list = [] # 当前持仓的全部股票

g.yesterday_HL_list = [] # 记录持仓中昨日涨停的股票

g.target_list = [] # 目标买入列表

g.not_buy_again = [] # 不再买入的股票列表

# 交易参数

g.stock_num = 3 # 持仓数量

g.up_price = 100 # 最高买入价格

g.reason_to_sell = '' # 卖出原因

g.stoploss_strategy = 3 # 止损策略:1为止损线止损,2为市场趋势止损,3为联合策略

g.stoploss_limit = 0.91 # 个股止损线

g.stoploss_market = 0.95 # 市场趋势止损参数

g.no_trading_buy = ['600036.XSHG', '518880.XSHG', '600900.XSHG'] # 空仓月份持有

# 设置交易运行时间

run_daily(prepare_stock_list, '9:05')

run_weekly(weekly_adjustment, 2, '10:30')

run_daily(sell_stocks, time='10:00') # 止损函数

run_daily(trade_afternoon, time='14:25') # 检查持仓中的涨停股是否需要卖出

run_daily(trade_afternoon, time='14:55') # 检查持仓中的涨停股是否需要卖出

run_daily(close_account, '14:50')

转换后的Ptrade实盘源码(部分)

def before_trading_start(context, data):

log.info('================= 盘前运行开始:{} '.format(str(context.blotter.current_dt)))

g.__portfolio = PositionManager()

g.order_set = set()

# g.__lock = threading.Lock()

def remove_st_stock(all_stock_list):

st_dict = get_stock_status(all_stock_list, query_type='ST', query_date=None)

st_list = []

for k, v in st_dict.items():

if v:

st_list.append(k)

return st_list

def remove_halt_stock(all_stock_list):

halt_dict = get_stock_status(all_stock_list, query_type='HALT', query_date=None)

halt_list = []

for k, v in halt_dict.items():

if v:

halt_list.append(k)

return halt_list

def all_codes_in_market():

all_stock_set = set(get_Ashares(date=None))

for ignore_code in IGNORE_MARKET:

market = MARKET_DICT.get(ignore_code)

if market == '科创板':

all_stock_set = all_stock_set - set(filter(lambda x: x.startswith('68'), all_stock_set))

if market == '创业板':

all_stock_set = all_stock_set - set(filter(lambda x: x.startswith('3'), all_stock_set))

return all_stock_set

待续 查看全部

Mark一下,写完之后分享一下。

聚宽的也不是完全的按照市值,也会根据换手率,涨停,跌停来判断是否卖出。

聚宽源码(部分)

#导入函数库

from jqdata import *

from jqfactor import *

import numpy as np

import pandas as pd

from datetime import time, datetime, timedelta

#初始化函数

def initialize(context):

# 策略参数配置

g.signal = ''

# 开启防未来函数

set_option('avoid_future_data', True)

# 设定基准

set_benchmark('000300.XSHG')

# 交易设置

set_option('use_real_price', True)

set_slippage(FixedSlippage(3/10000))

set_order_cost(OrderCost(open_tax=0, close_tax=0.001, open_commission=2.5/10000, close_commission=2.5/10000, close_today_commission=0, min_commission=5), type='stock')

# 日志设置

log.set_level('order', 'error')

log.set_level('system', 'error')

log.set_level('strategy', 'debug')

# 策略控制参数

g.no_trading_today_signal = False # 是否为可交易日

g.pass_april = True # 是否四月空仓

g.run_stoploss = True # 是否进行止损

g.HV_control = False # 是否进行放量控制

g.HV_duration = 120 # 放量判断周期

g.HV_ratio = 0.9 # 放量判断比例

g.no_trading_hold_signal = False # 是否持有非交易期股票

# 持仓管理参数

g.hold_list = [] # 当前持仓的全部股票

g.yesterday_HL_list = [] # 记录持仓中昨日涨停的股票

g.target_list = [] # 目标买入列表

g.not_buy_again = [] # 不再买入的股票列表

# 交易参数

g.stock_num = 3 # 持仓数量

g.up_price = 100 # 最高买入价格

g.reason_to_sell = '' # 卖出原因

g.stoploss_strategy = 3 # 止损策略:1为止损线止损,2为市场趋势止损,3为联合策略

g.stoploss_limit = 0.91 # 个股止损线

g.stoploss_market = 0.95 # 市场趋势止损参数

g.no_trading_buy = ['600036.XSHG', '518880.XSHG', '600900.XSHG'] # 空仓月份持有

# 设置交易运行时间

run_daily(prepare_stock_list, '9:05')

run_weekly(weekly_adjustment, 2, '10:30')

run_daily(sell_stocks, time='10:00') # 止损函数

run_daily(trade_afternoon, time='14:25') # 检查持仓中的涨停股是否需要卖出

run_daily(trade_afternoon, time='14:55') # 检查持仓中的涨停股是否需要卖出

run_daily(close_account, '14:50')

转换后的Ptrade实盘源码(部分)

def before_trading_start(context, data):

log.info('================= 盘前运行开始:{} '.format(str(context.blotter.current_dt)))

g.__portfolio = PositionManager()

g.order_set = set()

# g.__lock = threading.Lock()

def remove_st_stock(all_stock_list):

st_dict = get_stock_status(all_stock_list, query_type='ST', query_date=None)

st_list = []

for k, v in st_dict.items():

if v:

st_list.append(k)

return st_list

def remove_halt_stock(all_stock_list):

halt_dict = get_stock_status(all_stock_list, query_type='HALT', query_date=None)

halt_list = []

for k, v in halt_dict.items():

if v:

halt_list.append(k)

return halt_list

def all_codes_in_market():

all_stock_set = set(get_Ashares(date=None))

for ignore_code in IGNORE_MARKET:

market = MARKET_DICT.get(ignore_code)

if market == '科创板':

all_stock_set = all_stock_set - set(filter(lambda x: x.startswith('68'), all_stock_set))

if market == '创业板':

all_stock_set = all_stock_set - set(filter(lambda x: x.startswith('3'), all_stock_set))

return all_stock_set

待续

国盛Ptrade get_Ashare函数返回为空

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1162 次浏览 • 2025-07-04 12:22

在实盘中,运行下面代码:

# author: 公众号:可转债量化分析

import datetime

def initialize(context):

# 初始化策略

pass

def before_trading_start(context, data):

log.info('盘前函数运行')

previous_tradeing_func(context) # 执行盘前函数

def previous_tradeing_func(context):

all_stock_set = get_Ashares(date=None)

print(all_stock_set)

def handle_data(context, data):

pass

运行结果:

2025-07-04 12:18:10 - INFO - 盘前函数运行

2025-07-04 12:18:10 - INFO - []

数据的是一个空的list.......

一个十分基础的数据。

反馈了几年,依然如是。。

还是多开几家,多对比。各有各的优势。 查看全部

在实盘中,运行下面代码:

# author: 公众号:可转债量化分析

import datetime

def initialize(context):

# 初始化策略

pass

def before_trading_start(context, data):

log.info('盘前函数运行')

previous_tradeing_func(context) # 执行盘前函数

def previous_tradeing_func(context):

all_stock_set = get_Ashares(date=None)

print(all_stock_set)

def handle_data(context, data):

pass

运行结果:

2025-07-04 12:18:10 - INFO - 盘前函数运行

2025-07-04 12:18:10 - INFO - []

数据的是一个空的list.......

一个十分基础的数据。

反馈了几年,依然如是。。

还是多开几家,多对比。各有各的优势。

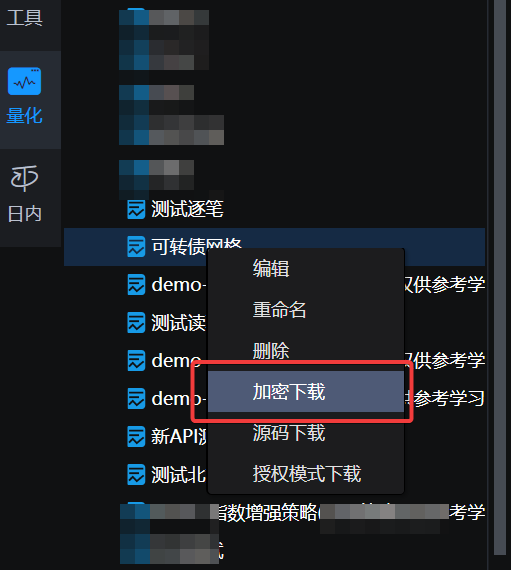

谁说ptrade代码不安全容易泄露的?

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1540 次浏览 • 2025-07-03 11:52

实际上现在的ptrade可以对策略进行加密

可以对你的策略进行加密下载,然后把策略删除了,然后在选择上传策略。

然后策略上传之后,你是无法看到具体代码的了,只能选择启动,删除策略。

连日志里面的打印数据也不会输出。

只会显示买入 卖出 的信息。

大大的提高了策略的安全性。

需要开通ptrade的读者朋友,可以关注公众号联系:

低佣 - 低门槛,提供 技术支持

查看全部

ptrade查看日志报错:远程服务器返回错误: (502) 错误的网关

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1146 次浏览 • 2025-06-30 18:59

访问这个API的时候就已经报错。

502异常代码,是服务器端的报错。和本地无关。

有时候能够正常查询到日志,但大部分时候查询日志的时候就出现上面的502异常。

揪心,就不能买多几台服务器么.....

查看全部

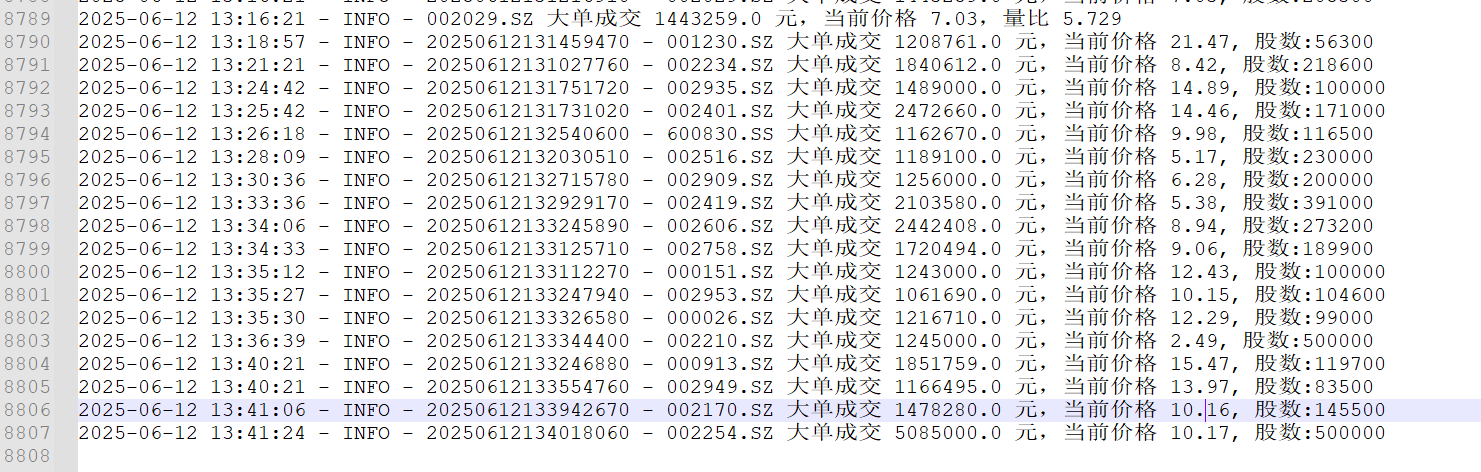

Ptrade获取的L2逐笔数据和同花顺的逐笔数据对不上的原因

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1374 次浏览 • 2025-06-12 16:40

后面有群友 发现了问题。

国泰海通支持Ptrade,万一免5

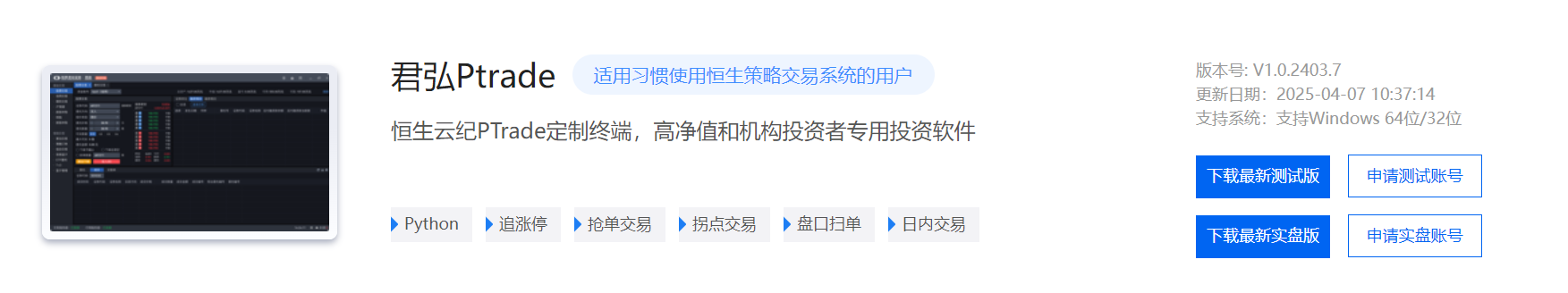

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1627 次浏览 • 2025-06-06 13:33

支持ptrade和qmt,不过没有miniqmt。

费率做到万0.854免5,起步0.01

实盘版下载链接:

https://dl2.app.gtja.com/dzswem/softwareVersion/202504/07/PTrade1.0-Client-V202403-07-005(PTrade).zip

需要开通和调佣的,可以关注公众号联系:

查看全部

支持ptrade和qmt,不过没有miniqmt。

费率做到万0.854免5,起步0.01

实盘版下载链接:

https://dl2.app.gtja.com/dzswem/softwareVersion/202504/07/PTrade1.0-Client-V202403-07-005(PTrade).zip

需要开通和调佣的,可以关注公众号联系:

Ptrade获取L2逐笔数据,免费,券商提供

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 2478 次浏览 • 2025-05-24 00:22

并且还发现居然可以免费使用L2逐笔数据,分时逐笔委托队列等高频毫秒级的数据

Ptrade接口文档已更新: https://ptradeapi.com

Python 3.5是2015 年发布,Python 3.11是2022 年发布。

性能上来说是有很大的提升,尤其对做回测和高频策略。

如果本身策略很简单或者日线交易的,影响可以忽略不计。

不过这个升级并不是个人可选的,只要你的Ptrade的券商升级了,就只能使用升级后的版本接口格式,旧代码需要做的修改来适配。

性能对比示例(Python 3.5 vs 3.11) By 豆包

以下是部分操作在 Python 3.5 和 3.11 中的性能对比(仅供参考):

并且相应的第三方库版本亦做了升级。

接口变动影响比较大的是pandas在 Python3.11 中,Pandas已不再支持Panel格式。

pandas里的Pannel类型是用于存储三维或以上的矩阵数据。

而升级后就不支持之前的多维行情数据格式。但笔者觉得现在更加统一和简单。

以前不同入参,有时候返回的是dataframe,有时候返回的是panel,比较混乱。

现在统一返回的dataframe. (或者返回dict,可以自己设定)

影响的API有get_history,get_price ,get_individual_transaction ,get_fundamentals等。

比如通过get_history获取的历史日线数据

count = 10

code_list = ['000695.SZ','001367.SZ']

df = get_history(count, frequency='1d', field=['open','close'], security_list=code_list, fq=None, include=False, fill='nan',is_dict=False)

print(type(df))

print(df)

返回的dataframe数据格式如下

<class 'pandas.core.frame.DataFrame'>

code open close

2025-05-15 000695.SZ NaN NaN

2025-05-16 000695.SZ NaN NaN

2025-05-19 000695.SZ 10.34 10.34

2025-05-20 000695.SZ 11.37 11.37

2025-05-21 000695.SZ 12.51 12.51

2025-05-22 000695.SZ 13.76 13.76

2025-05-09 001367.SZ 29.89 29.58

2025-05-12 001367.SZ 29.70 29.60

2025-05-13 001367.SZ 30.11 29.96

2025-05-14 001367.SZ 29.96 29.83

2025-05-15 001367.SZ 29.80 30.18

2025-05-16 001367.SZ 30.13 30.39

2025-05-19 001367.SZ 30.46 30.01

2025-05-20 001367.SZ 20.25 20.08

2025-05-21 001367.SZ 20.05 20.70

2025-05-22 001367.SZ 20.98 22.77

如果要提取 000695.SZ的数据,只需要

df[df['code']=='000695.SZ']就可以了。

code open close

2025-05-09 001367.SZ 29.89 29.58

2025-05-12 001367.SZ 29.70 29.60

2025-05-13 001367.SZ 30.11 29.96

2025-05-14 001367.SZ 29.96 29.83

2025-05-15 001367.SZ 29.80 30.18

2025-05-16 001367.SZ 30.13 30.39

2025-05-19 001367.SZ 30.46 30.01

2025-05-20 001367.SZ 20.25 20.08

2025-05-21 001367.SZ 20.05 20.70

2025-05-22 001367.SZ 20.98 22.77

然后dataframe提取收盘价或者开盘价

# 收盘价

df[df['code']=='001367.SZ']['close']

# 开盘价

df[df['code']=='001367.SZ']['open']

再拿收盘价来计算MACD:

# 计算 MACD 值

def MACD(series):

ema_short = series.ewm(span=12).mean()

ema_long = series.ewm(span=26).mean()

macd_series = ema_short - ema_long

return macd_series.iloc[-1]

close_price_series = df[df['code']=='001367.SZ']['close']

macd_val = MACD(close_price_series)

同理get_price,get_individual_transaction ,get_fundamentals与之类似。

并且在get_histroy的入参里面,多了一个is_dict的参数,默认是False,返回的是dataframe数据,如果设置为True,则返回的dict数据。

返回的有序字典(OrderDict)类型数据:(省略了部分类型)

{'001367.SZ', array([(20250509, 29.89, 29.58), (20250512, 29.7 , 29.6 ),

(20250513, 30.11, 29.96), (20250514, 29.96, 29.83),

(20250515, 29.8 , 30.18), (20250516, 30.13, 30.39),

(20250519, 30.46, 30.01), (20250520, 20.25, 20.08),

(20250521, 20.05, 20.7 ), (20250522, 20.98, 22.77)]

}

但感觉这种列表形式下,提取数据反而没有使用dataframe方便和直观,它获取数据时需要用下标提取。

但官网说,返回dict类型数据的速度比DataFrame,Panel类型数据有大幅提升,对速度有要求的,建议使用is_dict=True 读取上述数据格式。

获取L2逐笔数据

L2逐笔委托数据接口函数

get_individual_entrust(stocks=None, data_count=50, start_pos=0, search_direction=1, is_dict=False)

参数:

data_count: 数据条数,默认为50,最大为200(int);

start_pos: 起始位置,默认为0(int);

虽然一次只能获取200条,但配合start_pos游标可遍历获取全部数据。

示例代码:

获取股票001367.SZ封涨停时的最新的逐笔委托行情。

code_list = ['001367.SZ']

df = get_individual_entrust(code_list)

print(df[['business_time','hq_px','business_amount','order_no']])

输出数据如下,因数据列太多,为了美观只取了其中的4列:

时间戳,委托价格,委托数量,委托编号

因为当前已经是收盘状态,是从14:59:57.020往前取50条数据

business_time(时间戳) hq_px(委托价格) business_amount(委托数量) order_no(委托编号)

20250523145702150 24.60 100 32493957

20250523145714840 24.95 100 32505581

20250523145718430 25.05 1000 32509041

20250523145719840 25.05 1000 32510350

20250523145721750 25.05 400 32512000

20250523145727610 25.05 200 32517007

20250523145730270 25.05 400 32519085

20250523145730330 25.04 1600 32519133

20250523145732330 25.05 2900 32520779

20250523145740780 25.05 500 32527204

20250523145743550 25.05 400 32529290

20250523145743810 25.05 200 32529474

20250523145749460 25.05 4300 32533295

20250523145749910 25.05 1000 32533630

20250523145749960 25.05 300 32533666

# 省略若干.....

20250523145926790 25.05 100 32589496

20250523145927480 25.05 1200 32589844

20250523145936090 25.05 200 32594134

20250523145946600 25.05 300 32601758

20250523145946800 25.05 49100 32601865

20250523145950800 25.05 36800 32607381

20250523145953780 25.05 55200 32611265

20250523145954240 25.05 500 32611713

20250523145956830 25.05 55300 32614479

20250523145957020 25.04 8500 32614683

可以看到上面的有些1秒内有多个的委托单,每单的委托量和委托编号。

逐笔成交

同理,L2的逐笔成交数据函数的用法一样,但返回字段不同,成交的时候有成交方向,可以通过程序计算资金流入或流出。

get_individual_transaction(stocks=None, data_count=50, start_pos=0, search_direction=1, is_dict=False)

同样用上面的股票例子获取到的数据如下:

business_time hq_px business_amount business_direction

20250523145648440 0.00 2500 1

20250523145648970 0.00 3200 1

20250523145649300 0.00 100 1

20250523145649370 0.00 900 1

20250523145649880 0.00 1800 1

20250523145650150 0.00 800 1

20250523145650360 0.00 200 1

20250523145650400 0.00 500 1

20250523145650420 0.00 100 1

20250523145652050 0.00 800 1

# 省略若干.....

20250523145659220 0.00 24100 1

20250523145659550 0.00 700 1

20250523145659600 0.00 400 1

20250523150000000 25.05 100 0

20250523150000000 25.05 100 0

20250523150000000 25.05 1600 0

20250523150000000 25.05 800 0

20250523150000000 25.05 1730 0

20250523150000000 25.05 670 0

20250523150000000 25.05 2430 0

20250523150000000 25.05 1200 0

20250523150000000 25.05 9270 0

20250523150000000 25.05 148 0

20250523150000000 25.05 100 0

20250523150000000 25.05 200 0

20250523150000000 25.05 1000 0

20250523150000000 25.05 2000 0

business_direction是 成交方向,0是卖出,1是买入,从而判断该笔成交的资金是流入还是流出。

获取分时成交

get_tick_direction(symbols=None, query_date=0, start_pos=0, search_direction=1, data_count=50, is_dict=False)

这个分时成交函数可获取到当前和历史某个毫秒下,该笔总成交量下有多少笔子订单。

比如用上面的涨停股001367.SZ做示例

最后一刻3点的时候,成交了21348股,里面共有14笔成交。

time_stamp hq_px business_amount business_count

20250523143721000 25.05 100 1

20250523143724000 25.05 200 1

20250523143745000 25.05 444 1

20250523143809000 25.05 10000 2

20250523144024000 25.05 100 1

20250523144048000 25.05 1500 1

20250523144106000 25.05 300 1

20250523144112000 25.05 11692 2

20250523144124000 25.05 100 1

20250523144142000 25.05 100 1

20250523144157000 25.05 100 1

20250523144200000 25.05 2360 1

# 省略若干.....

20250523145415000 25.05 1381 1

20250523145421000 25.05 480 1

20250523145439000 25.05 100 1

20250523145451000 25.05 1753 1

20250523145521000 25.05 5384 3

20250523145539000 25.05 3600 2

20250523145557000 25.05 1000 1

20250523145609000 25.05 400 2

20250523145615000 25.05 100 1

20250523150000000 25.05 21348 14

get_gear_price 获取当前时刻的逐笔委托队列

这个函数的功能:获取委买/卖1 至 委买/卖10 10档行情的价格与委托量,但现在发现它可以获取到委托1档下逐笔委托队列。

比如当前的股票是涨停状态,你下了一笔订单有8888股去排板,那么这个函数可以获取当前委1档下的委托队列(前50名),比如下面返回的数据,可以看到你的8888手排在第9个委托队列,还有前后的单子的逐笔数量。

{'bid_grp': {1: [13.68, 15225900, 4905, {1: 33200, 2: 104800, 3: 1000, 4: 100, 5: 51800, 6: 6300, 7: 777800, 8: 184000, 9: 8888, 10: 100, 11: 600, 12: 141800, 13: 100, 14: 141800, 15: 2700,

16: 900, 17: 58600, 18: 2900, 19: 800, 20: 133800, 21: 2800, 22: 900, 23: 2900, 24: 800, 25: 400, 26: 400, 27: 48200, 28: 51800, 29: 99400, 30: 26600, 31: 81800, 32: 493000, 33: 427300, 34: 27700, 35: 2800, 36: 460500,

37: 170200, 38: 166500, 39: 24100, 40: 800, 41: 29300, 42: 500, 43: 500, 44: 500, 45: 2800, 46: 1300, 47: 61700, 48: 27000, 49: 500, 50: 78900}],

2: [13.67, 30200, 32],

3: [13.66, 11100, 16],

4: [13.65, 134400, 11],

5: [13.64, 8500, 7],

6: [13.63, 1000, 3],

7: [13.62, 200, 2],

8: [13.6, 7300, 17],

9: [13.59, 600, 2],

10: [13.58, 10700, 7]},

# 因为是涨停,所以委卖是空的,挂单就立马成交,委托队列是空的

'offer_grp': {1: [0.0, 0, 0, {}],

2: [0.0, 0, 0],

3: [0.0, 0, 0],

4: [0.0, 0, 0],

5: [0.0, 0, 0],

6: [0.0, 0, 0],

7: [0.0, 0, 0],

8: [0.0, 0, 0],

9: [0.0, 0, 0],

10: [0.0, 0, 0]}

}

所以这次ptrade的升级除了python版本的升级,还带来了更多粒度的数据,有利于高频策略的开发。

还有一些新功能的函数就等下次继续更新,记得点赞关注+收藏哦~

待续......

关注公众号: 查看全部

并且还发现居然可以免费使用L2逐笔数据,分时逐笔委托队列等高频毫秒级的数据

Ptrade接口文档已更新: https://ptradeapi.com

Python 3.5是2015 年发布,Python 3.11是2022 年发布。

性能上来说是有很大的提升,尤其对做回测和高频策略。

如果本身策略很简单或者日线交易的,影响可以忽略不计。

不过这个升级并不是个人可选的,只要你的Ptrade的券商升级了,就只能使用升级后的版本接口格式,旧代码需要做的修改来适配。

性能对比示例(Python 3.5 vs 3.11) By 豆包

以下是部分操作在 Python 3.5 和 3.11 中的性能对比(仅供参考):

并且相应的第三方库版本亦做了升级。

接口变动影响比较大的是pandas在 Python3.11 中,Pandas已不再支持Panel格式。

pandas里的Pannel类型是用于存储三维或以上的矩阵数据。

而升级后就不支持之前的多维行情数据格式。但笔者觉得现在更加统一和简单。

以前不同入参,有时候返回的是dataframe,有时候返回的是panel,比较混乱。

现在统一返回的dataframe. (或者返回dict,可以自己设定)

影响的API有get_history,get_price ,get_individual_transaction ,get_fundamentals等。

比如通过get_history获取的历史日线数据

count = 10

code_list = ['000695.SZ','001367.SZ']

df = get_history(count, frequency='1d', field=['open','close'], security_list=code_list, fq=None, include=False, fill='nan',is_dict=False)

print(type(df))

print(df)

返回的dataframe数据格式如下

<class 'pandas.core.frame.DataFrame'>

code open close

2025-05-15 000695.SZ NaN NaN

2025-05-16 000695.SZ NaN NaN

2025-05-19 000695.SZ 10.34 10.34

2025-05-20 000695.SZ 11.37 11.37

2025-05-21 000695.SZ 12.51 12.51

2025-05-22 000695.SZ 13.76 13.76

2025-05-09 001367.SZ 29.89 29.58

2025-05-12 001367.SZ 29.70 29.60

2025-05-13 001367.SZ 30.11 29.96

2025-05-14 001367.SZ 29.96 29.83

2025-05-15 001367.SZ 29.80 30.18

2025-05-16 001367.SZ 30.13 30.39

2025-05-19 001367.SZ 30.46 30.01

2025-05-20 001367.SZ 20.25 20.08

2025-05-21 001367.SZ 20.05 20.70

2025-05-22 001367.SZ 20.98 22.77

如果要提取 000695.SZ的数据,只需要

df[df['code']=='000695.SZ']就可以了。

code open close

2025-05-09 001367.SZ 29.89 29.58

2025-05-12 001367.SZ 29.70 29.60

2025-05-13 001367.SZ 30.11 29.96

2025-05-14 001367.SZ 29.96 29.83

2025-05-15 001367.SZ 29.80 30.18

2025-05-16 001367.SZ 30.13 30.39

2025-05-19 001367.SZ 30.46 30.01

2025-05-20 001367.SZ 20.25 20.08

2025-05-21 001367.SZ 20.05 20.70

2025-05-22 001367.SZ 20.98 22.77

然后dataframe提取收盘价或者开盘价

# 收盘价

df[df['code']=='001367.SZ']['close']

# 开盘价

df[df['code']=='001367.SZ']['open']

再拿收盘价来计算MACD:

# 计算 MACD 值

def MACD(series):

ema_short = series.ewm(span=12).mean()

ema_long = series.ewm(span=26).mean()

macd_series = ema_short - ema_long

return macd_series.iloc[-1]

close_price_series = df[df['code']=='001367.SZ']['close']

macd_val = MACD(close_price_series)

同理get_price,get_individual_transaction ,get_fundamentals与之类似。

并且在get_histroy的入参里面,多了一个is_dict的参数,默认是False,返回的是dataframe数据,如果设置为True,则返回的dict数据。

返回的有序字典(OrderDict)类型数据:(省略了部分类型)

{'001367.SZ', array([(20250509, 29.89, 29.58), (20250512, 29.7 , 29.6 ),

(20250513, 30.11, 29.96), (20250514, 29.96, 29.83),

(20250515, 29.8 , 30.18), (20250516, 30.13, 30.39),

(20250519, 30.46, 30.01), (20250520, 20.25, 20.08),

(20250521, 20.05, 20.7 ), (20250522, 20.98, 22.77)]

}但感觉这种列表形式下,提取数据反而没有使用dataframe方便和直观,它获取数据时需要用下标提取。

但官网说,返回dict类型数据的速度比DataFrame,Panel类型数据有大幅提升,对速度有要求的,建议使用is_dict=True 读取上述数据格式。

获取L2逐笔数据

L2逐笔委托数据接口函数

get_individual_entrust(stocks=None, data_count=50, start_pos=0, search_direction=1, is_dict=False)

参数:

data_count: 数据条数,默认为50,最大为200(int);

start_pos: 起始位置,默认为0(int);

虽然一次只能获取200条,但配合start_pos游标可遍历获取全部数据。

示例代码:

获取股票001367.SZ封涨停时的最新的逐笔委托行情。

code_list = ['001367.SZ']

df = get_individual_entrust(code_list)

print(df[['business_time','hq_px','business_amount','order_no']])

输出数据如下,因数据列太多,为了美观只取了其中的4列:

时间戳,委托价格,委托数量,委托编号

因为当前已经是收盘状态,是从14:59:57.020往前取50条数据

business_time(时间戳) hq_px(委托价格) business_amount(委托数量) order_no(委托编号)

20250523145702150 24.60 100 32493957

20250523145714840 24.95 100 32505581

20250523145718430 25.05 1000 32509041

20250523145719840 25.05 1000 32510350

20250523145721750 25.05 400 32512000

20250523145727610 25.05 200 32517007

20250523145730270 25.05 400 32519085

20250523145730330 25.04 1600 32519133

20250523145732330 25.05 2900 32520779

20250523145740780 25.05 500 32527204

20250523145743550 25.05 400 32529290

20250523145743810 25.05 200 32529474

20250523145749460 25.05 4300 32533295

20250523145749910 25.05 1000 32533630

20250523145749960 25.05 300 32533666

# 省略若干.....

20250523145926790 25.05 100 32589496

20250523145927480 25.05 1200 32589844

20250523145936090 25.05 200 32594134

20250523145946600 25.05 300 32601758

20250523145946800 25.05 49100 32601865

20250523145950800 25.05 36800 32607381

20250523145953780 25.05 55200 32611265

20250523145954240 25.05 500 32611713

20250523145956830 25.05 55300 32614479

20250523145957020 25.04 8500 32614683

可以看到上面的有些1秒内有多个的委托单,每单的委托量和委托编号。

逐笔成交

同理,L2的逐笔成交数据函数的用法一样,但返回字段不同,成交的时候有成交方向,可以通过程序计算资金流入或流出。

get_individual_transaction(stocks=None, data_count=50, start_pos=0, search_direction=1, is_dict=False)

同样用上面的股票例子获取到的数据如下:

business_time hq_px business_amount business_direction

20250523145648440 0.00 2500 1

20250523145648970 0.00 3200 1

20250523145649300 0.00 100 1

20250523145649370 0.00 900 1

20250523145649880 0.00 1800 1

20250523145650150 0.00 800 1

20250523145650360 0.00 200 1

20250523145650400 0.00 500 1

20250523145650420 0.00 100 1

20250523145652050 0.00 800 1

# 省略若干.....

20250523145659220 0.00 24100 1

20250523145659550 0.00 700 1

20250523145659600 0.00 400 1

20250523150000000 25.05 100 0

20250523150000000 25.05 100 0

20250523150000000 25.05 1600 0

20250523150000000 25.05 800 0

20250523150000000 25.05 1730 0

20250523150000000 25.05 670 0

20250523150000000 25.05 2430 0

20250523150000000 25.05 1200 0

20250523150000000 25.05 9270 0

20250523150000000 25.05 148 0

20250523150000000 25.05 100 0

20250523150000000 25.05 200 0

20250523150000000 25.05 1000 0

20250523150000000 25.05 2000 0

business_direction是 成交方向,0是卖出,1是买入,从而判断该笔成交的资金是流入还是流出。

获取分时成交

get_tick_direction(symbols=None, query_date=0, start_pos=0, search_direction=1, data_count=50, is_dict=False)

这个分时成交函数可获取到当前和历史某个毫秒下,该笔总成交量下有多少笔子订单。

比如用上面的涨停股001367.SZ做示例

最后一刻3点的时候,成交了21348股,里面共有14笔成交。

time_stamp hq_px business_amount business_count

20250523143721000 25.05 100 1

20250523143724000 25.05 200 1

20250523143745000 25.05 444 1

20250523143809000 25.05 10000 2

20250523144024000 25.05 100 1

20250523144048000 25.05 1500 1

20250523144106000 25.05 300 1

20250523144112000 25.05 11692 2

20250523144124000 25.05 100 1

20250523144142000 25.05 100 1

20250523144157000 25.05 100 1

20250523144200000 25.05 2360 1

# 省略若干.....

20250523145415000 25.05 1381 1

20250523145421000 25.05 480 1

20250523145439000 25.05 100 1

20250523145451000 25.05 1753 1

20250523145521000 25.05 5384 3

20250523145539000 25.05 3600 2

20250523145557000 25.05 1000 1

20250523145609000 25.05 400 2

20250523145615000 25.05 100 1

20250523150000000 25.05 21348 14

get_gear_price 获取当前时刻的逐笔委托队列

这个函数的功能:获取委买/卖1 至 委买/卖10 10档行情的价格与委托量,但现在发现它可以获取到委托1档下逐笔委托队列。

比如当前的股票是涨停状态,你下了一笔订单有8888股去排板,那么这个函数可以获取当前委1档下的委托队列(前50名),比如下面返回的数据,可以看到你的8888手排在第9个委托队列,还有前后的单子的逐笔数量。

{'bid_grp': {1: [13.68, 15225900, 4905, {1: 33200, 2: 104800, 3: 1000, 4: 100, 5: 51800, 6: 6300, 7: 777800, 8: 184000, 9: 8888, 10: 100, 11: 600, 12: 141800, 13: 100, 14: 141800, 15: 2700,

16: 900, 17: 58600, 18: 2900, 19: 800, 20: 133800, 21: 2800, 22: 900, 23: 2900, 24: 800, 25: 400, 26: 400, 27: 48200, 28: 51800, 29: 99400, 30: 26600, 31: 81800, 32: 493000, 33: 427300, 34: 27700, 35: 2800, 36: 460500,

37: 170200, 38: 166500, 39: 24100, 40: 800, 41: 29300, 42: 500, 43: 500, 44: 500, 45: 2800, 46: 1300, 47: 61700, 48: 27000, 49: 500, 50: 78900}],

2: [13.67, 30200, 32],

3: [13.66, 11100, 16],

4: [13.65, 134400, 11],

5: [13.64, 8500, 7],

6: [13.63, 1000, 3],

7: [13.62, 200, 2],

8: [13.6, 7300, 17],

9: [13.59, 600, 2],

10: [13.58, 10700, 7]},

# 因为是涨停,所以委卖是空的,挂单就立马成交,委托队列是空的

'offer_grp': {1: [0.0, 0, 0, {}],

2: [0.0, 0, 0],

3: [0.0, 0, 0],

4: [0.0, 0, 0],

5: [0.0, 0, 0],

6: [0.0, 0, 0],

7: [0.0, 0, 0],

8: [0.0, 0, 0],

9: [0.0, 0, 0],

10: [0.0, 0, 0]}

}所以这次ptrade的升级除了python版本的升级,还带来了更多粒度的数据,有利于高频策略的开发。

还有一些新功能的函数就等下次继续更新,记得点赞关注+收藏哦~

待续......

关注公众号:

ptrade的python版本升级到3.11, 部分数据返回格式也改变了,无语

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1597 次浏览 • 2025-05-07 14:22

返回数据可以为dataframe,也可以为dict。

为python3.5会根据数据入参不同,返回dataframe,pannel

而新的函数返回,只有dataframe,当然这很好。只是以前的代码需要改动做兼容。

而修改的地方不止这一处。

查看全部

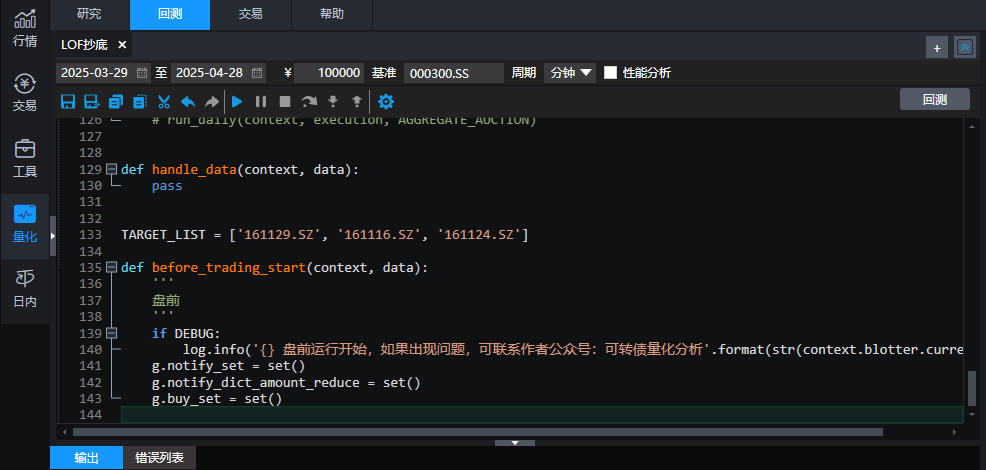

Ptrade LOF跌停抄底T+0 程序

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1576 次浏览 • 2025-04-29 09:02

$黄金主题LOF(SZ161116)$

$港股小盘LOF(SZ161124)$

吃早餐的突然想到,快速写了个监控跌停,封单小于X就买入,冲高后止盈卖出,T+0 ptrade 自动交易。这样抄底就不用自己盯盘啦

查看全部

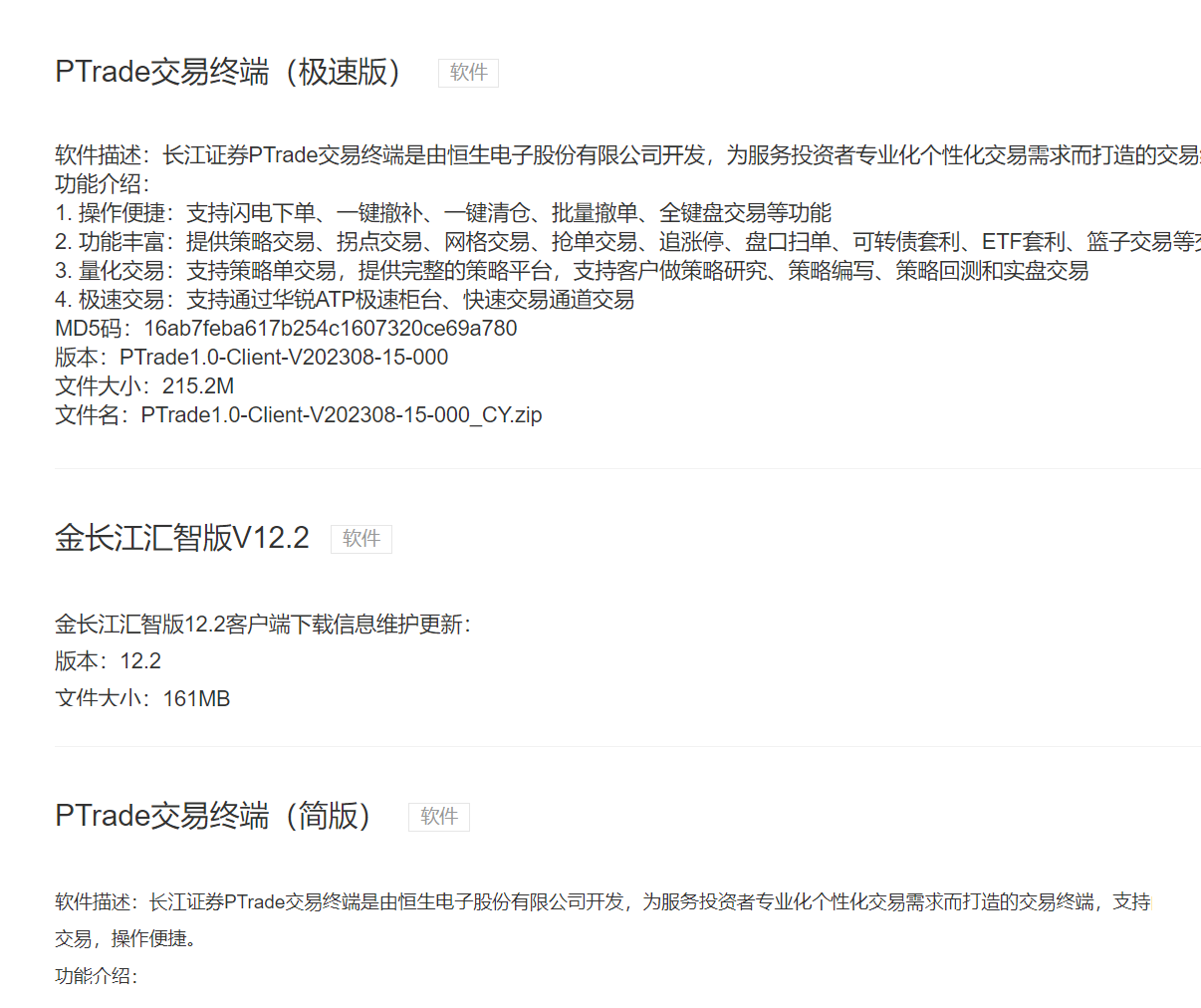

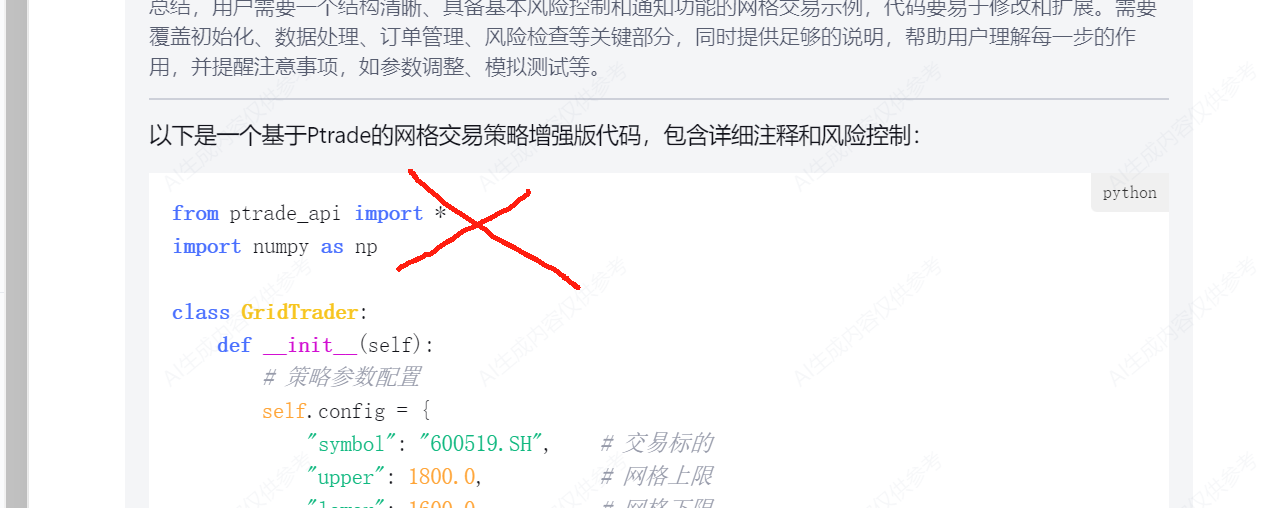

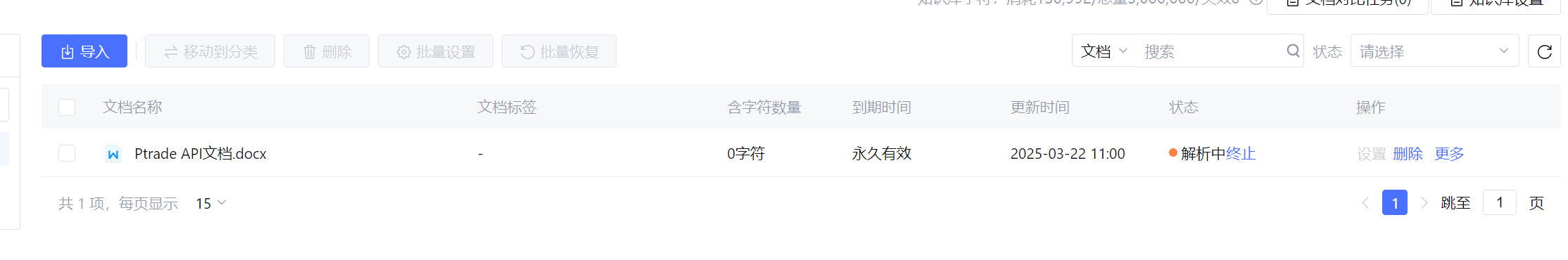

部署Ptrade deepseek编程AI助手

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 2956 次浏览 • 2025-03-22 19:19

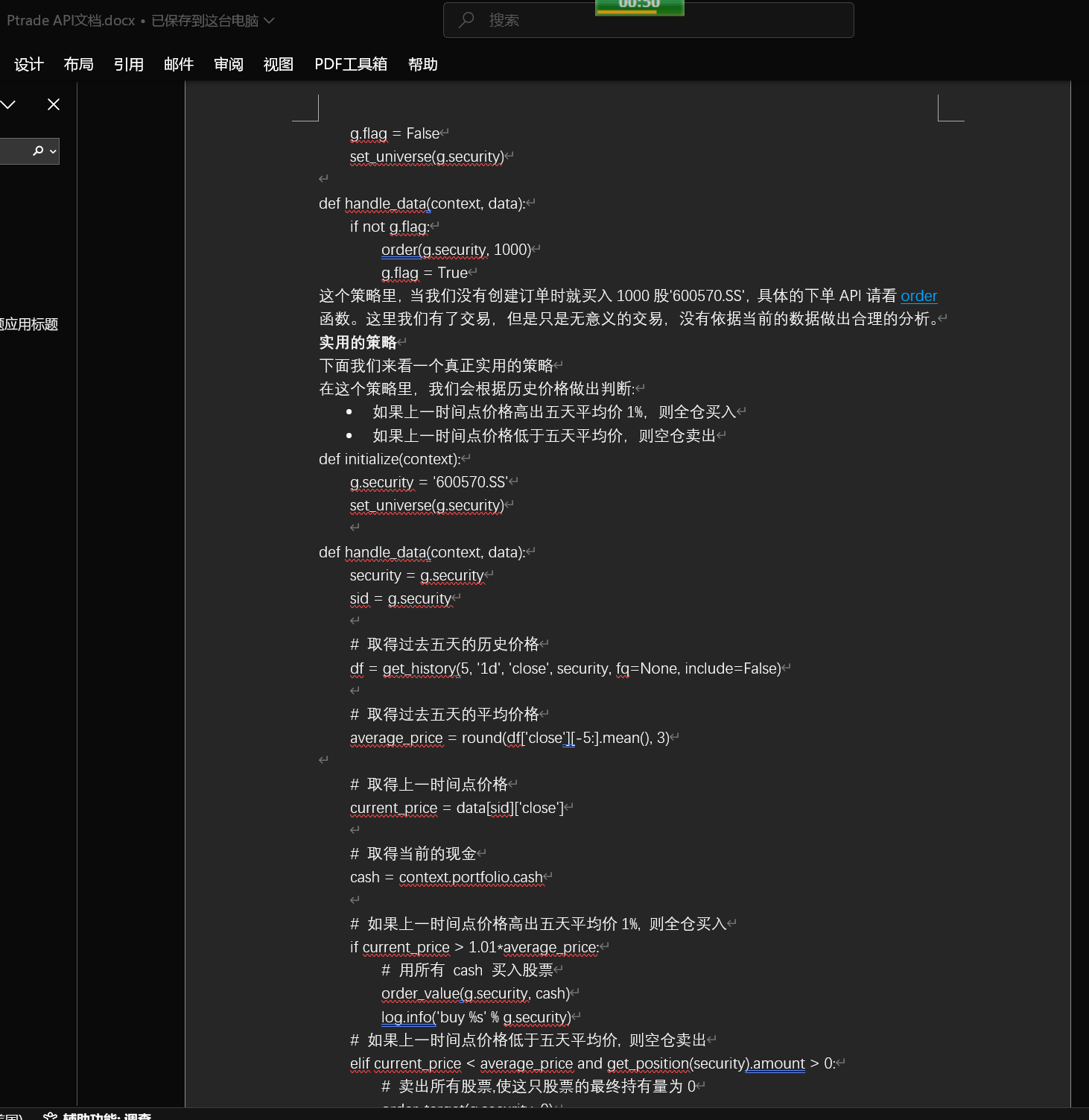

(第一行:根本没有ptrade_api这个库)

一个很大的原因,是互联网的残次不齐的语料污染了Deepseek的分析结果。很多不相关的广告或者垃圾内容进入到Deepseek的分析参考对象。三人成虎,Deepseek获取的3个网站都说那个图片里的猫是只老虎,那么它就真的认为它是只老虎。

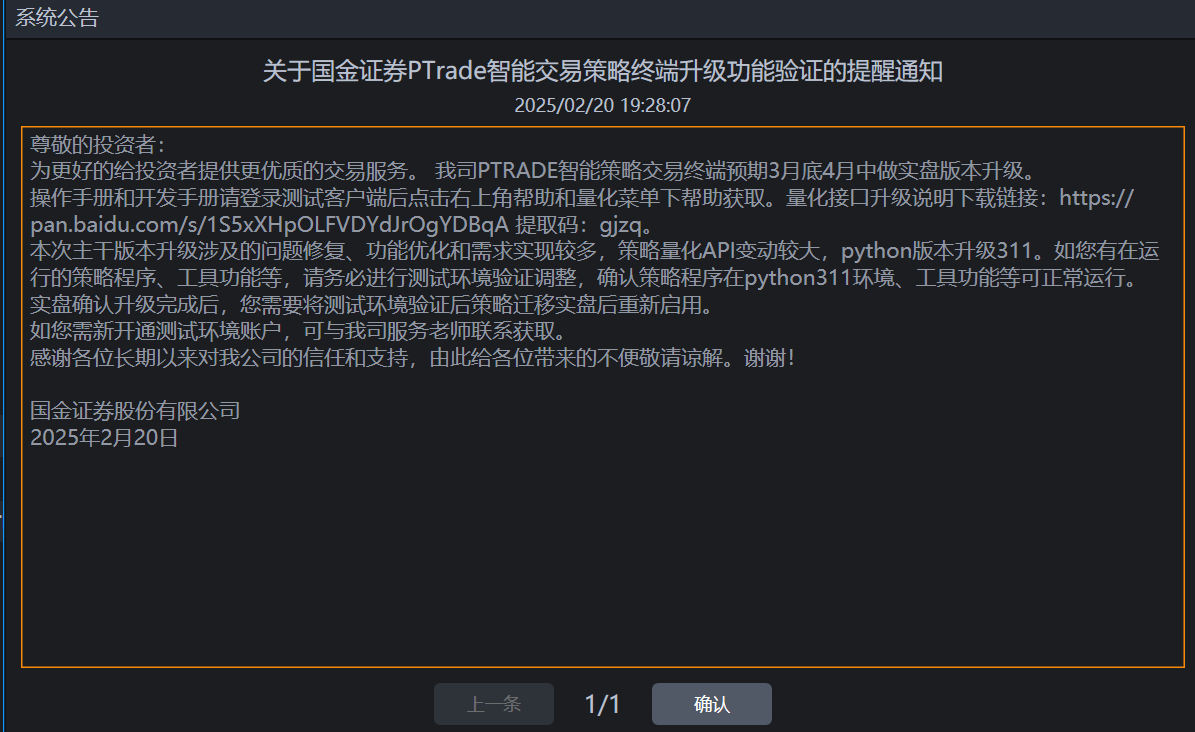

其实我们可以把API文档作为知识库,提交给Deepseek,让它学习后再替我们编写Ptrade量化程序,代码的完成度会有很大的提升。甚至基本不用修改就可以直接运行。

本文手把手带大家,部署属于自己的量化交易AI编程助手。

当然,不需要高级硬件,也不用付费。0元购。

效果图:

本次依然使用腾讯云提供的Deepseek大模型来运行。

打开链接:https://lke.cloud.tencent.com/

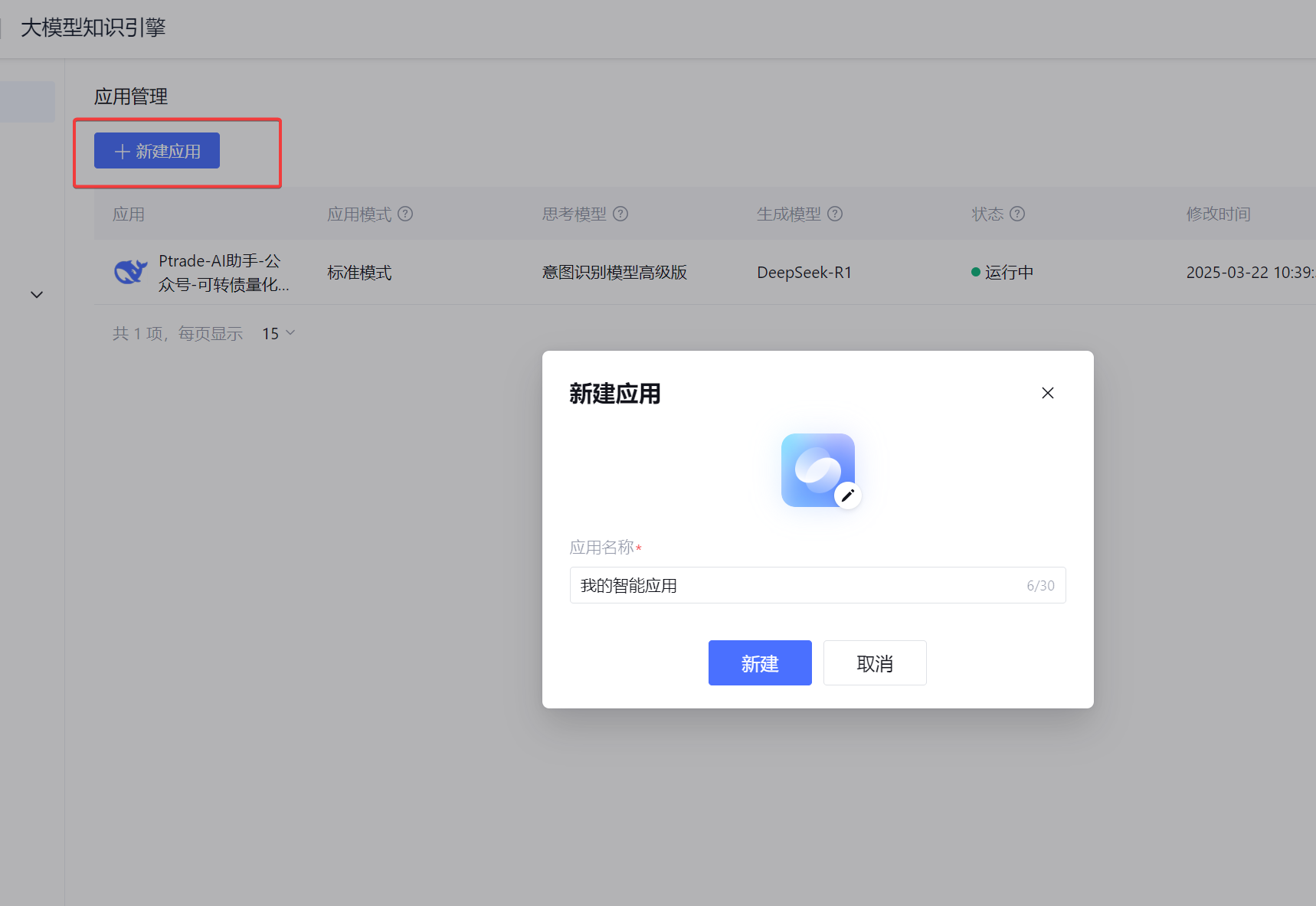

点击大模型知识引擎,如果没有开通就点击开通。个人有免费的token额度可用,不收费。[图片]进去之后点击“新建应用", 名称可以任意填,

在模型这里选择 Deepseek-R1 或者 Deepseek-V3

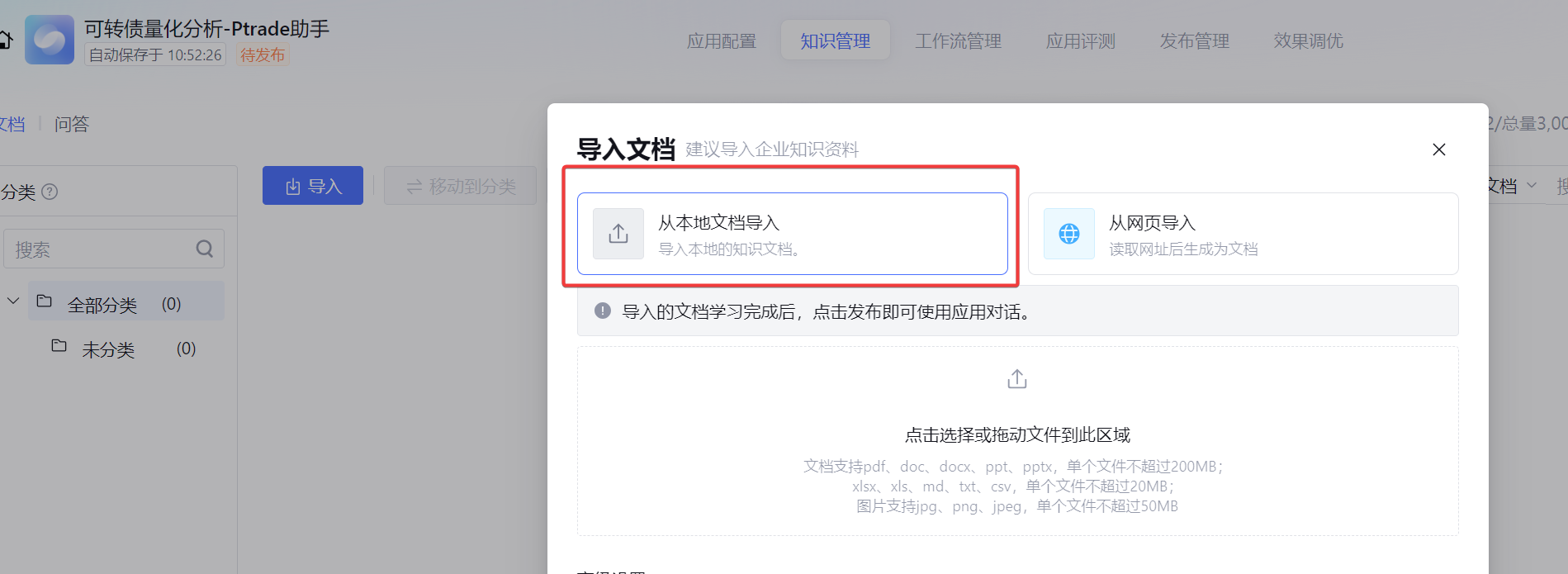

它支持从本地文档导入和网页URL导入。

我们使用的API文档URL为:https://ptradeapi.com/

但笔者发现,它这个从URL获取数据的工程能力太弱了,获取到的数据只有标题,没有内容。

所以只好手工把网站的内容全选,然后复制保存到本地的word文档。

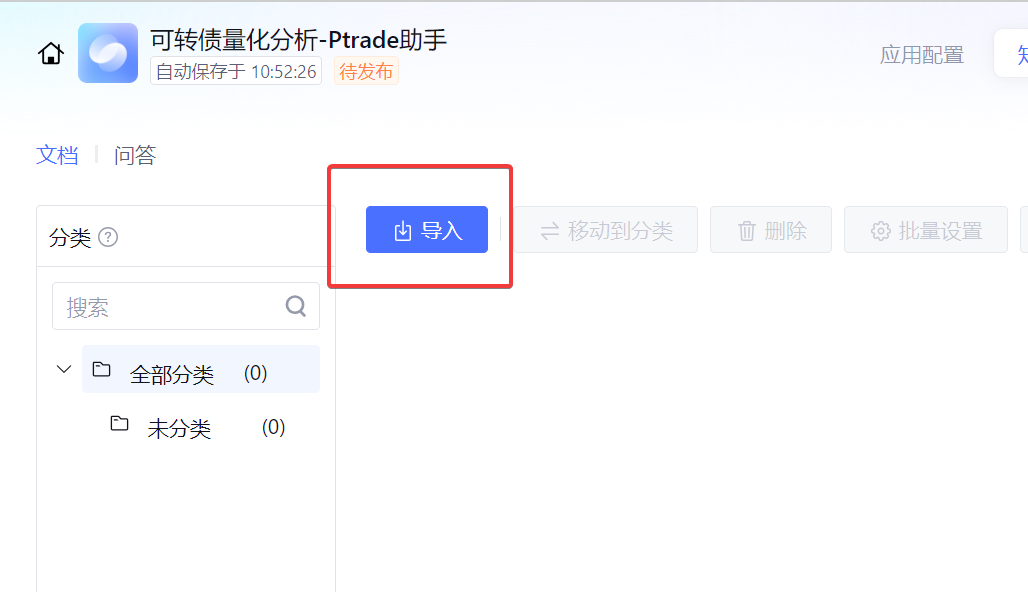

然后点击导入文档

这里需要几分钟的时间解析,内容审核。因为这个个人定制的知识库,是可以发布出去公开使用的,所以会对问答的内容做审核。

知识库里有对字符限制,免费的可以有个300w的字符可用。本次使用的ptrade api文档用了26w的字符,所以还可以上传更多的个人编写代码库。

知识库的状态变成"待发布"之后,可以点击"发布"按钮。

然后返回到应用配置,可以在提示词里添加一些限制。

比如目前的ptrade是基于python3.5的代码编写的。如果不限制,到时写出来的语法,比如用了诸如f-string的语法,ptrade会报错的。

f'{name}' -- wrong

'{}'.format(name) -- right

如果这里不限制,最后AI写出来的代码大概率在ptrade会报错。

设置完成之后,就完成了你个人专属的ptrade代码AI助手。

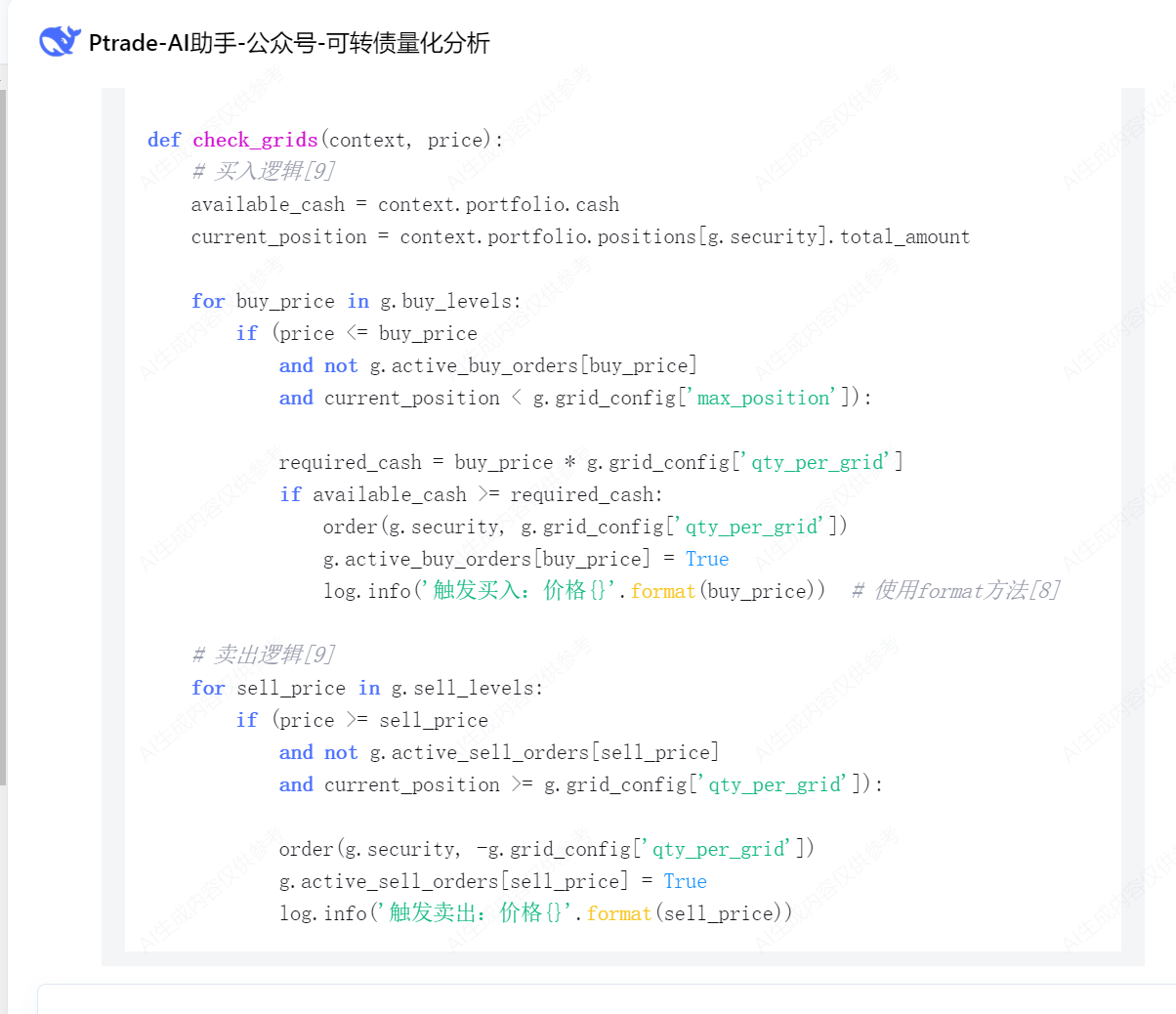

现在让它写一个ptrade网格交易程序

至少里面的策略逻辑代码框架整体问题不大,至少不再出现 from ptrade_api import * 这种不存在的包导入的情况。

但细节里还是有些bug的,比如卖出的时候是查enable_amount,而不是amount,因为当日买入的数量不一定能卖出。

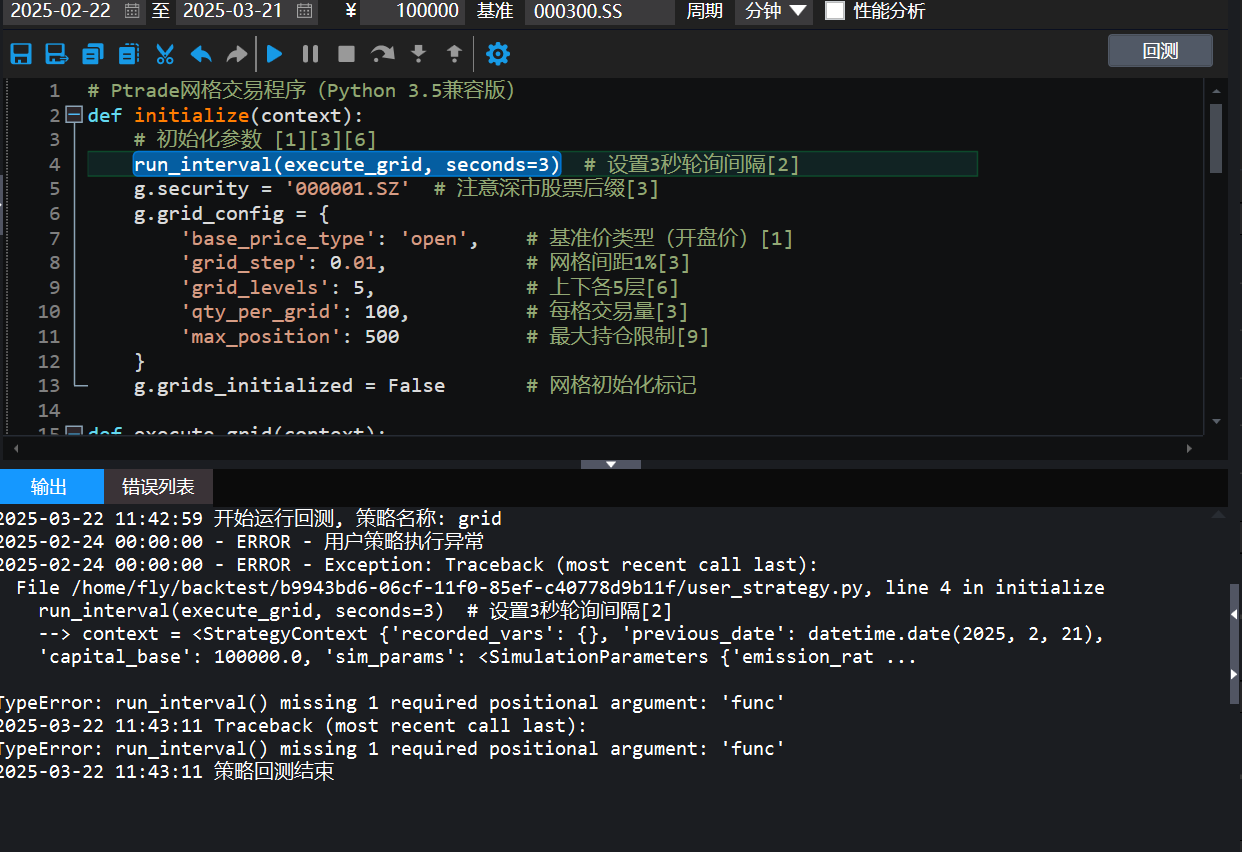

run_interval(execute_grid, seconds=3),还有函数的入参不符合。

并没有get_price这个函数,要用get_snapshot

复制到ptrade里面跑下回测验证一下语法。

出现语法错误,依然需要小改一下。run_interval(context,execute_grid, seconds=3) 就好了。

当然上面的代码离实盘依然有一定的距离,依然依赖提问者技术水平,但作为代码辅助工具,对编写代码效率是很大的提升。

一些没写过复杂代码的自媒体经常尬吹,AI完成替代程序员的工作,其实大部分只是满足生成最基础的demo级别的通用任务,对于高度定制的业务,依然依赖提问者的技术广度和深度,对细节的掌控。

同样使用Deepseek,一个家庭主妇和张小龙,张小龙的确可能在完全不写代码的情况下使用Deepseek写出一个微信,而家庭主妇只能用Deepseek绘出一个类似的微信的UI,却无法运行起来。

但AI作为生产力工具,无疑大大减少了很多重复基础性工作,提升效率,让强者恒强。

需要开通Ptrade,QMT,掘金,Matic,BigQuant等量化工具的读者可以在公众号菜单获取联系方式。像BigQuan已经把AI编写实盘和回测的功能集成进去了。将公众号设为星标,第一时间收到笔者的最新文章啦。

查看全部

(第一行:根本没有ptrade_api这个库)

一个很大的原因,是互联网的残次不齐的语料污染了Deepseek的分析结果。很多不相关的广告或者垃圾内容进入到Deepseek的分析参考对象。三人成虎,Deepseek获取的3个网站都说那个图片里的猫是只老虎,那么它就真的认为它是只老虎。

其实我们可以把API文档作为知识库,提交给Deepseek,让它学习后再替我们编写Ptrade量化程序,代码的完成度会有很大的提升。甚至基本不用修改就可以直接运行。

本文手把手带大家,部署属于自己的量化交易AI编程助手。

当然,不需要高级硬件,也不用付费。0元购。

效果图:

本次依然使用腾讯云提供的Deepseek大模型来运行。

打开链接:https://lke.cloud.tencent.com/

点击大模型知识引擎,如果没有开通就点击开通。个人有免费的token额度可用,不收费。[图片]进去之后点击“新建应用", 名称可以任意填,

在模型这里选择 Deepseek-R1 或者 Deepseek-V3

它支持从本地文档导入和网页URL导入。

我们使用的API文档URL为:https://ptradeapi.com/

但笔者发现,它这个从URL获取数据的工程能力太弱了,获取到的数据只有标题,没有内容。

所以只好手工把网站的内容全选,然后复制保存到本地的word文档。

然后点击导入文档

这里需要几分钟的时间解析,内容审核。因为这个个人定制的知识库,是可以发布出去公开使用的,所以会对问答的内容做审核。

知识库里有对字符限制,免费的可以有个300w的字符可用。本次使用的ptrade api文档用了26w的字符,所以还可以上传更多的个人编写代码库。

知识库的状态变成"待发布"之后,可以点击"发布"按钮。

然后返回到应用配置,可以在提示词里添加一些限制。

比如目前的ptrade是基于python3.5的代码编写的。如果不限制,到时写出来的语法,比如用了诸如f-string的语法,ptrade会报错的。

f'{name}' -- wrong

'{}'.format(name) -- right

如果这里不限制,最后AI写出来的代码大概率在ptrade会报错。

设置完成之后,就完成了你个人专属的ptrade代码AI助手。

现在让它写一个ptrade网格交易程序

至少里面的策略逻辑代码框架整体问题不大,至少不再出现 from ptrade_api import * 这种不存在的包导入的情况。

但细节里还是有些bug的,比如卖出的时候是查enable_amount,而不是amount,因为当日买入的数量不一定能卖出。

run_interval(execute_grid, seconds=3),还有函数的入参不符合。

并没有get_price这个函数,要用get_snapshot

复制到ptrade里面跑下回测验证一下语法。

出现语法错误,依然需要小改一下。run_interval(context,execute_grid, seconds=3) 就好了。

当然上面的代码离实盘依然有一定的距离,依然依赖提问者技术水平,但作为代码辅助工具,对编写代码效率是很大的提升。

一些没写过复杂代码的自媒体经常尬吹,AI完成替代程序员的工作,其实大部分只是满足生成最基础的demo级别的通用任务,对于高度定制的业务,依然依赖提问者的技术广度和深度,对细节的掌控。

同样使用Deepseek,一个家庭主妇和张小龙,张小龙的确可能在完全不写代码的情况下使用Deepseek写出一个微信,而家庭主妇只能用Deepseek绘出一个类似的微信的UI,却无法运行起来。

但AI作为生产力工具,无疑大大减少了很多重复基础性工作,提升效率,让强者恒强。

需要开通Ptrade,QMT,掘金,Matic,BigQuant等量化工具的读者可以在公众号菜单获取联系方式。像BigQuan已经把AI编写实盘和回测的功能集成进去了。将公众号设为星标,第一时间收到笔者的最新文章啦。

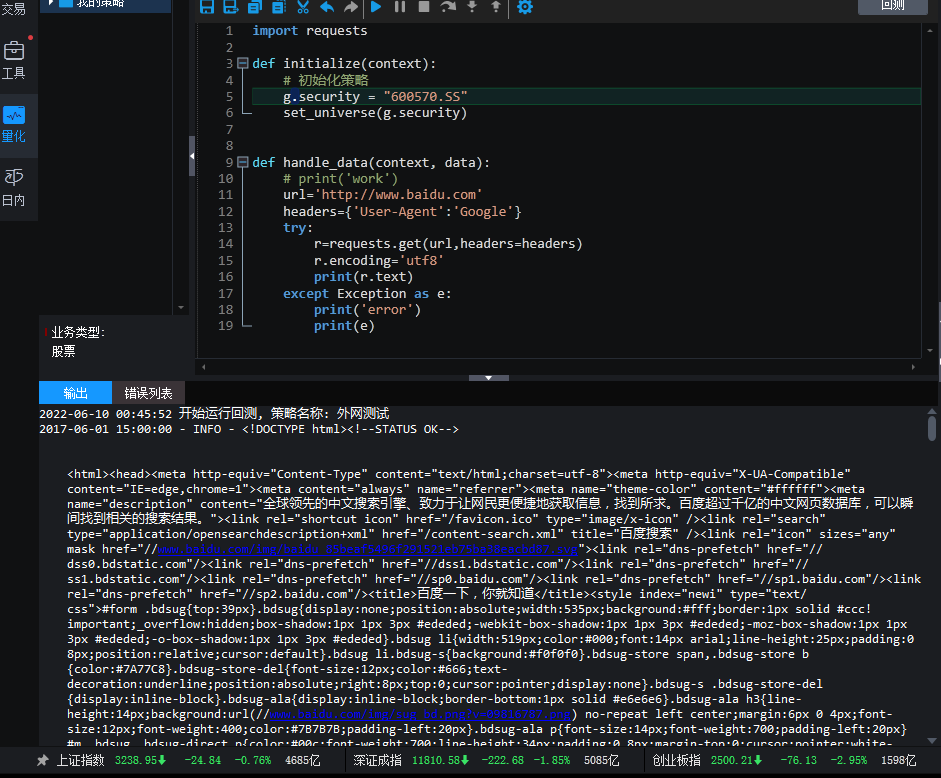

ptrade支持北交所股票交易吗?

Ptrade • 李魔佛 发表了文章 • 0 个评论 • 1995 次浏览 • 2025-03-12 20:25

可以运行下面的代码:

def initialize(context):

# 初始化策略

g.security = "600570.SS"

set_universe(g.security)

run_daily(context, func,'20:13')

def handle_data(context, data):

print('公众号:可转债量化分析')

def func(context):

info = get_Ashares(date=None)

print(info)

它会打印沪深A股的代码:

北交所有83开头的,或者找一个北交所的股票代码,在输出里面找一下: